In 2017, a game-changing research paper titled “Attention is All You Need” was published by Vaswani et al at Google which gave birth to ChatGPT. This paper introduced a revolutionary neural network architecture called the Transformer model. This architecture has since played a crucial role in the creation of ChatGPT and has reshaped the world of natural language processing. Transformers is the magic sauce behind GPT, ChatGPT, DALL-E, PaLM, Bard and whatever you can think of in the last two years that looks like magic instead of ML.

In this blog post, we will break down the concepts from the “Attention is All You Need” paper and understand why the Transformer model is so significant.

Read more: Explained: What the hell is ChatGPT

The “Attention is All You Need” Paper

Imagine you’re working on an assignment with a group of friends. Each friend focuses on a specific aspect, like grammar, context, or keywords. The Transformer model works similarly—it divides the sentence into different aspects and processes them simultaneously.

Attention:

Attention is like each friend looking at the sentence and highlighting the important words related to their aspect. This way, the model pays attention to different parts of the sentence based on what’s important for a specific aspect.

Multi-Head Attention:

Instead of just one friend, we have multiple friends, each looking at the sentence and highlighting different important words. This helps the model consider various aspects simultaneously, making it more versatile.

Positional Encoding:

Since the model doesn’t naturally know the order of words, we add markers to the words to help it understand the sequence and position of each word in the sentence.

Training and Optimization:

We teach the model using examples (sentences with translations), and it learns to predict the translations. The model adjusts itself to get better at translations using a technique called optimization, like adjusting the focus of our friends to get better results.

Results:

The Transformer model, with its attention mechanism, showed exceptional performance in language translation tasks, beating existing models and doing it faster.

Read More: How Alexa and Siri Understand What You’re Feeling?

Before the “Attention” Paper

Before the advent of the Transformer model, recurrent neural networks (RNNs) and convolutional neural networks (CNNs) were the dominant frameworks for processing sequential and spatial data, respectively. These frameworks were widely used in natural language processing (NLP) and other sequential data tasks.

1. Recurrent Neural Networks (RNNs):

RNNs are designed to process sequential data by maintaining a hidden state that retains information about the past. Each step in the sequence considers both the current input and the hidden state from the previous step. This enables RNNs to capture dependencies and context from past inputs.

However, RNNs face challenges in capturing long-term dependencies effectively, a limitation known as the vanishing gradient problem. This issue occurs because information from earlier time steps diminishes as it propagates through the network during training, making it difficult for the model to capture relationships that are distant in the input sequence.

2. Convolutional Neural Networks (CNNs):

CNNs are primarily designed for processing grid-like data, such as images. They use convolutional layers to scan the input using filters, capturing local patterns and hierarchies of features.

In the context of NLP, CNNs can be used with one-dimensional convolutions to process sequences of words. However, they are limited in their ability to capture long-range dependencies and understand sequential relationships effectively.

Read More: ChatGPT Beats Humans in Chip Design Contest!

How the Transformer Model Differs from other methods:

The Transformer model introduced a new architecture that addressed the limitations of RNNs and CNNs, making it a game-changer in NLP and beyond.

Parallel Processing:

Unlike RNNs, the Transformer model enables efficient parallel processing. It processes the entire input sequence at once, thanks to its attention mechanism. Each word can attend to all other words in the sequence independently, allowing for effective parallelization and capturing long-range dependencies without sequential constraints.

Attention Mechanism:

The key innovation of the Transformer model is the attention mechanism. Attention allows the model to focus on different parts of the input sequence, learning relationships between words regardless of their positions. This is crucial for understanding the context and meaning of a given word in a sentence.

Multi-Head Attention:

The Transformer model employs multi-head attention, allowing it to learn multiple sets of attention weights in parallel. Each “head” focuses on a different aspect or perspective of the input, enhancing the model’s ability to capture various patterns and relationships within the data.

Positional Encoding:

To account for the lack of inherent positional information in the Transformer model, positional encodings are added to the input embeddings. These encodings help the model understand the order of words in the input sequence, addressing one of the limitations of traditional RNNs.

In summary, the Transformer model fundamentally differs from earlier frameworks by enabling parallel processing, utilizing attention mechanisms for relationship understanding, employing multi-head attention for diverse perspective capture, and incorporating positional encodings to preserve order information. These innovations collectively make the Transformer model highly effective for various sequence processing tasks, especially in NLP.

Read more: 7 Best Career Opportunities in AI and ML Engineering

Why Google Didn’t Build Upon the Research Paper

In 2017, a group of researchers at Alphabet Inc.’s headquarters in Mountain View, California, engaged in casual conversation during lunch, brainstorming ways to improve text generation efficiency using computers. Over the subsequent five months, they conducted experiments that unknowingly led to a groundbreaking discovery. This research was compiled into a paper titled “Attention is All You Need,” representing a major leap forward in artificial intelligence (AI).

Their paper introduced the Transformer, a revolutionary system enabling machines to efficiently generate humanlike text, images, DNA sequences, and various other forms of data. The impact of this discovery was monumental, with the paper garnering over 80,000 citations from fellow researchers. The Transformer architecture laid the foundation for OpenAI’s ChatGPT, with the “T” in its name signifying its reliance on the Transformer model. Additionally, it powered image-generating tools like Midjourney, showcasing its versatility.

Interestingly, the researchers shared their groundbreaking discovery with the world, a common practice in the tech industry aimed at obtaining feedback, attracting talent, and fostering a community of supporters. However, what set this scenario apart was that Google, the organization where this breakthrough occurred, didn’t immediately leverage the technology they had unveiled. Instead, it remained relatively dormant for years as Google grappled with the challenge of effectively translating cutting-edge research into practical services.

Meanwhile, OpenAI seized the opportunity and harnessed Google’s own creation to introduce a substantial challenge to the search giant. Despite Google’s immense talent and culture of innovation, rival companies were the ones who capitalized on this significant discovery.

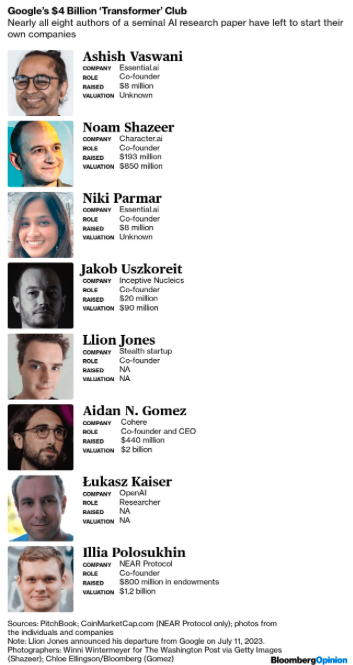

The team left google which was worth 4B$

The team which wrote Transformer Paper, most of which have left google to pursue their ideas or started their own company:

Polosukhin, on the verge of departing from Google, showed a greater inclination towards risk-taking compared to many others. He eventually initiated a blockchain venture after leaving. In contrast, Vaswani, destined to be the primary author of the Transformer paper, was enthusiastic about embarking on a substantial project. Alongside Niki Parmar, he ventured into establishing Essential.ai, a company focusing on enterprise software solutions. Uszkoreit, who generally leaned towards challenging the conventional norms in AI research, held the belief of “if it’s not broken, break it.” Following his tenure at Google, he co-founded a biotechnology firm named Inceptive Nucleics.

Read More: AI Can Prevent & Cure Cancer

Reasons why Google didn’t pursue the Transformer paper

Uszkoreit, known for his innovative approach, often roamed around Google’s offices, sketching diagrams of the novel architecture on whiteboards. Initially, his proposal to eliminate the “recurrent” aspect of the recurrent neural networks, a prevalent technique at that time, was met with skepticism and disbelief, particularly by his team members like Jones. However, as more researchers like Parmar, Aidan Gomez, and Lukasz Kaiser joined the endeavor, they began witnessing significant enhancements.

An illustrative example showcased the improvement. Consider the sentence, “The animal didn’t cross the street because it was too tired.” In this context, the word “it” refers to the animal. However, if the sentence were altered to “because it was too wide,” the reference of “it” becomes more ambiguous. Remarkably, the system they were developing managed to navigate this ambiguity effectively. Jones distinctly recalled witnessing the system work through these challenges and was astounded, remarking, “This is special.”

Furthermore, Uszkoreit, proficient in German, observed that this innovative technique could translate English into German with remarkable accuracy, surpassing the capabilities of Google Translate.

But why did google not implement it?

1. Scale:

A significant challenge arises from the scale of operations. Google boasts an extensive AI workforce of around 7,133 individuals & their overall employee base of approximately 140,000. In comparison, OpenAI has about 150 AI researchers among their total staff of roughly 375 as of 2023.

The vastness of Google’s operations posed a hurdle during the inception of the Transformer model. The large team size meant that scientists and engineers had to navigate through multiple layers of management to gain approval. Insights from several former Google scientists and engineers suggest that within Google Brain, a prominent AI division at Google, there was a lack of a clear strategic direction. This led many individuals to become preoccupied with career progression and their visibility in research publications.

2. Victim of its own Success

The threshold for transforming ideas into viable products was notably high within Google. Illia Polosukhin pointed out that for Google, an idea needed to potentially generate billions of dollars to garner serious attention. This stringent criterion hindered progress. This also necessitated constant iterations and a willingness to explore dead ends—something Google didn’t always accommodate.

A spokesperson for Google expressed pride in the company’s groundbreaking work on Transformers and the positive impact it has had on the AI ecosystem, fostering collaborations and opportunities for researchers to continue advancing their work both within and outside of Google.

In a peculiar way, Google found itself constrained by its own achievements. The company boasted eminent AI scientists such as Geoffrey Hinton and was already employing advanced AI techniques to process text as early as 2017. Many researchers adhered to the philosophy of “If it’s not broken, don’t fix it,” assuming that existing methods were sufficient given the company’s successful track record in the field.

Read the original Paper here

Conclusion:

The “Attention is All You Need” paper introduced the Transformer model, a groundbreaking concept that changed the way we process and generate text. While Google did not directly use this model for ChatGPT, the Transformer’s influence has been profound in the world of natural language processing, paving the way for the development of powerful language generation models like ChatGPT.

OpenAI took Google’s transformer idea and built a company around it by focusing on developing and deploying large language models that can be used for a variety of real-world applications. ChatGPT is one example of such a model. It is capable of generating human-quality text, translating languages, writing different kinds of creative content, and answering your questions in an informative way.

ChatGPT has become increasingly popular in recent months, and is now being used by millions of people around the world. This has made OpenAI a major player in the AI industry, and has put it in direct competition with Google.

References:

[1] Washington Post