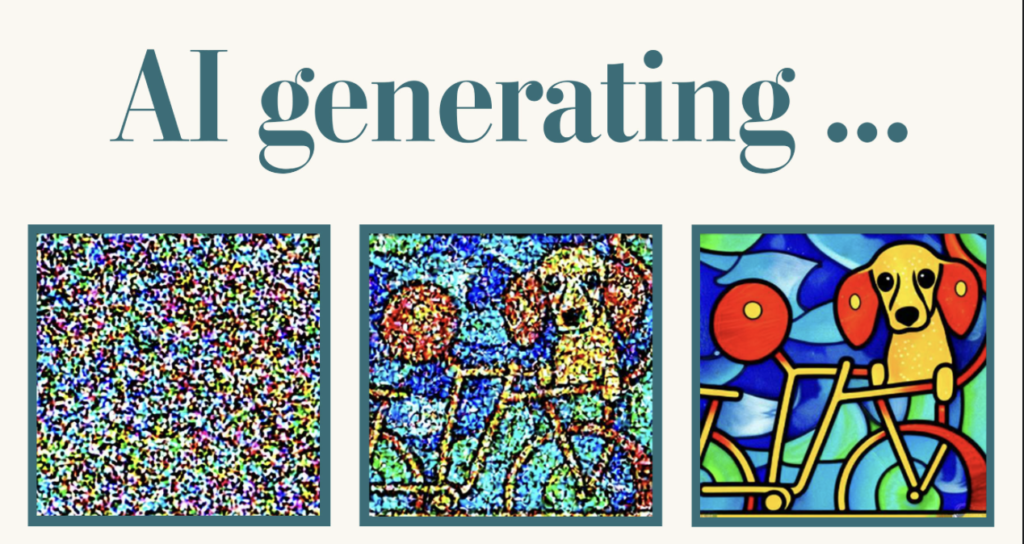

AI creating images from words is like having a clever robot that understands what you say and can turn it into a picture. First, it learns what words mean, just like we do. When you give it a description, it uses its special imagination skills, kind of like creating a rough sketch. This initial picture isn’t perfect, but the AI keeps looking at it and figuring out how to make it better to match your description. It tweaks and refines the picture, adding more details and adjusting things until it’s just right. And voila! You get a picture that’s exactly what you described. It’s like having an AI artist that listens to your words and paints a picture for you!

In recent years, the field of artificial intelligence has witnessed astounding advancements in generating images from text descriptions, thanks to models like DALL-E. DALL-E, developed by OpenAI, is a groundbreaking neural network that uses a diffusion model to create images based on textual inputs. This fusion of AI and creativity has the potential to revolutionize how we interact with and create visual content.

Image Credits: WSJ

Understanding DALL-E: The Fusion of Text and Images

DALL-E is a neural network trained on a vast dataset of text-image pairs, encompassing a diverse range of images, from realistic photos to artistic illustrations. The goal of DALL-E is to associate text descriptions with specific visual features such as objects, colors, and textures and generate images that match these descriptions.

To achieve this, DALL-E utilizes a diffusion model, a technique that starts with a random noise image and iteratively refines it until it aligns with the provided text description. Let’s delve into a detailed explanation of how this works.

Read More: How a Google Research Paper gave birth to its Rival, ChatGPT

The Technical Journey: Transforming Text to Pixels

Step 1: Tokenization

The AI first learns to understand words. Just like you know what an “apple” is and what “red” and “white” mean, the AI learns these too, but in a special computer way.

The text description is broken down into smaller units called tokens, such as words or subwords. Each token represents a specific element of the description.

Text Description: “A red apple on a white background”

Tokens: [“A”, “red”, “apple”, “on”, “a”, “white”, “background”]

2. Transformer Encoding:

You tell the AI a description, like “a red apple on a white background.” Now, the AI starts to imagine what this would look like. But instead of using its eyes like we do, it uses a special imagination technique with numbers and patterns.

Hidden State Representation: Think of this like a code that represents the important parts of the description. It’s like summarizing what the words “red apple on white background” mean in a way the computer can understand.

These tokens are passed through a transformer encoder, a neural network that converts the tokens into a hidden state representation. This representation encodes the semantic meaning and relationships among the tokens.

Read More: Explained: What the hell is ChatGPT

3. Noise Image Initialization:

Using the messy picture and the summarized code that represents “red apple on white background.”

DALL-E begins with a random noise image, acting as the starting canvas for image generation.

The AI takes this imagination and starts drawing a picture. It doesn’t get it right in one go, so it makes a rough, messy picture first. Imagine it’s like a draft.

4. Diffusion Model:

Now, the AI looks at the messy picture and thinks, “How can I make this better to match the description?” It keeps making changes, little by little, to get closer to what you described.

The diffusion model comes into play, taking two inputs: the noise image and the hidden state representation of the text. The model then predicts the next pixel values for the noise image based on the provided text’s representation.

Read More: 10 Free Online AI Courses From Top Companies

5. U-Net for Predictions:

Making a first attempt to create a picture of a red apple on a white background based on the messy picture and the summary code.

Within the diffusion model, a U-Net architecture is employed to predict the next pixel values. U-Net is a powerful neural network often used for image segmentation and translation tasks.

The diffusion model leverages the U-Net architecture to predict pixel values based on the combined information of the initial noise image and the encoded meaning of the text. This initial prediction starts shaping the image according to the encoded semantics.

6. Refining the Image:

Iterative Refinement: Making the picture better step by step. The summary code guides us on how to improve the picture to match “red apple on white background” better.

The diffusion model iteratively refines the image by predicting and adjusting pixel values based on the relationships and semantics captured in the hidden state representation. The encoded essence of “red apple on white background” guides the image towards a more accurate representation.

7. Convergence:

Multiple refinement steps occur, with the hidden state representation continually influencing the image’s refinement process, ensuring it aligns with the semantic essence of “A red apple on a white background.”

Convergence Achieved: After several tries, the picture starts looking very much like a red apple on a white background.

8. Image Completion:

Final Result: We end up with a picture that represents “A Red Apple on a White Background” as accurately as possible based on the initial messy picture and the summary code.

The resulting image is a visual representation that effectively captures the semantic essence of “A red apple on a white background” as encoded in the hidden state representation.

In conclusion, DALL-E stands at the intersection of artificial intelligence and creativity, offering a glimpse into a future where machines can translate words into vibrant, visual compositions. As this technology evolves, we anticipate even more astonishing applications and advancements in the field of image generation.