Introduction

In the ever-evolving landscape of artificial intelligence (AI), NVIDIA is renowned for its cutting-edge hardware and software solutions.

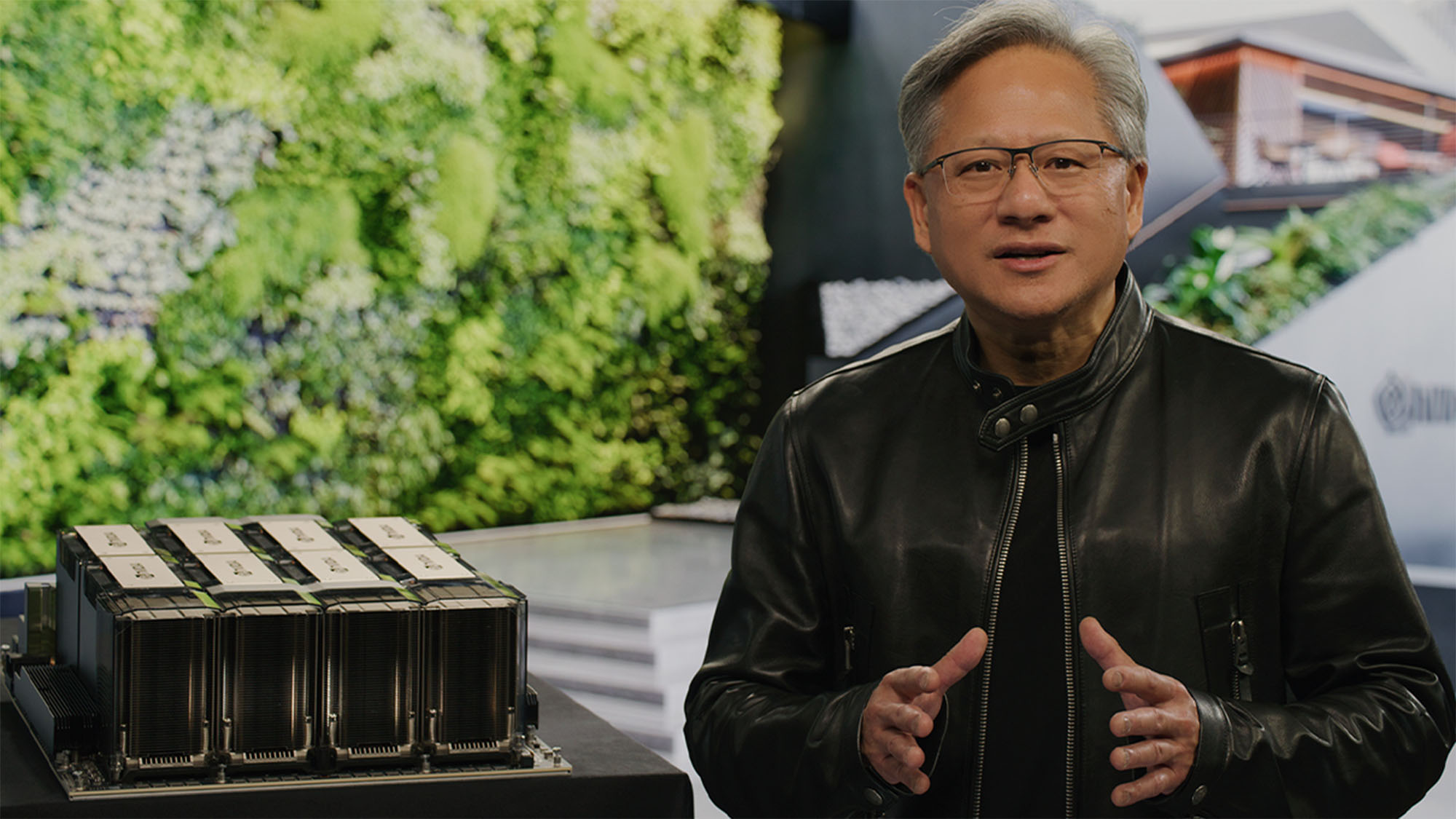

Recently, NVIDIA unveiled its latest secret weapon: TensorRT-LLM, an AI software designed to revolutionize large language model (LLM) inference. This enhances efficiency and speed on NVIDIA’s H100 GPUs.

This game-changing software is poised to keep developers firmly within the NVIDIA ecosystem. This would also maintain its competitive edge against tech giants developing their AI chips. Let’s find out how?

Understanding the Challenge of Nvidia

LLM inference, which involves training models like GPT and Llama to provide more accurate and insightful responses, is a resource-intensive process that primarily occurs on powerful GPUs like NVIDIA’s H100. However, this process can be both costly and time-consuming, presenting a significant challenge for AI developers.

Read more: Bankruptcy to Trillion-Dollar Company: Story of Nvidia

TensorRT-LLM: A Game-Changing Solution from Nvidia for AI monopoly

TensorRT-LLM is poised to address this challenge by making LLM inference on NVIDIA H100 GPUs more cost-effective and significantly faster. But just how fast does it make the process? Let’s delve into the key aspects:

Fourfold Speed Boost: H100 GPUs were already four times faster than their predecessors, the A100 GPUs, for LLM inference. With the introduction of TensorRT-LLM, this speed is doubled, making H100 eight times faster than the A100.

Boost for Meta’s Llama 2: TensorRT-LLM doesn’t stop at improving NVIDIA’s hardware. For inference on Meta’s Llama 2, a popular choice for AI applications, TensorRT-LLM makes it 4.6 times faster, further expanding its utility.

So, how does TensorRT-LLM achieve this remarkable performance boost? It relies on three innovative techniques:

Tensor Parallelism: By dividing the LLM across different GPUs and servers, Tensor Parallelism enables efficient cooperation among multiple GPUs, making it possible to scale up LLM operations efficiently.

In-Flight Batching: This technique allows the system to start processing new inference requests while some are still being worked on. It eliminates the need to wait for an entire batch to finish, optimizing resource utilization and reducing latency.

Quantization: TensorRT-LLM achieves memory efficiency by reducing the memory footprint of LLMs without compromising accuracy. This means developers can work with larger models using the same hardware resources, unlocking new possibilities in AI research and applications.

Read more: How ChatGPT made Nvidia $1T company

How can this lead to AI monopoly for Nvidia

Nvidia is a leading provider of artificial intelligence (AI) hardware and software. The company’s tensor software is a key part of its AI platform, and it could give Nvidia a monopoly in the AI hardware market.

Tensor software is a suite of tools that helps developers build and deploy AI applications. It includes libraries, compilers, and runtimes that are optimized for AI workloads. Tensor software is also tightly integrated with Nvidia’s hardware, which gives it a significant performance advantage over other AI software.

As a result, Nvidia’s tensor software is the preferred choice for many AI developers. This is why it is being used by leading AI companies such as Google, Microsoft, and Amazon.

The dominance of Nvidia’s tensor software could give the company a monopoly in the AI hardware market. This is because AI developers are more likely to choose hardware that is compatible with the software they are using. If Nvidia continues to improve its tensor software, it could become the de facto standard for AI development, which would make it difficult for other hardware vendors to compete.

The implications of Nvidia’s dominance in the AI hardware market are far-reaching. It could stifle innovation in the AI industry, as other vendors would be less likely to invest in developing new AI hardware if they know that Nvidia will have a significant advantage. It could also lead to higher prices for AI hardware, as Nvidia would be able to charge a premium for its products.

Only time will tell whether Nvidia’s tensor software will give the company an AI hardware monopoly. However, the potential implications of such a monopoly are worrying for the AI industry.

Maintaining NVIDIA’s Dominance

TensorRT-LLM represents a pivotal component of NVIDIA’s defense strategy against tech giants venturing into the AI hardware market. By enhancing the performance and efficiency of LLM inference specifically on NVIDIA H100 GPUs, this software further cements NVIDIA’s position in the AI ecosystem. But, one might argue, doesn’t this strategy create a vendor lock-in effect, tying developers to NVIDIA chips and software?

While it’s true that TensorRT-LLM is tailored for NVIDIA’s H100 GPUs, it’s important to note that NVIDIA has consistently pushed the boundaries of AI technology. By offering state-of-the-art solutions and driving innovation in both hardware and software, NVIDIA creates a compelling ecosystem that developers choose to be part of, not because they are forced but because it provides them with unmatched capabilities.

Conclusion

NVIDIA’s TensorRT-LLM is a testament to the company’s commitment to advancing AI technology. By making LLM inference faster and more efficient, it strengthens NVIDIA’s position in the AI market and offers developers the tools they need to push the boundaries of AI research and application development. While it may encourage loyalty to NVIDIA’s hardware and software, it does so by providing unparalleled performance and capabilities, making it a choice rather than a constraint for developers in the dynamic world of AI. As AI continues to reshape industries and drive innovation, NVIDIA remains at the forefront, powering the future of intelligent computing.