Introduction:

In computing, parallel processing involves breaking a task into smaller parts processed simultaneously, like a team of chefs collaborating on a meal, each handling a specific aspect, boosting speed and efficiency.

Parallel processing is akin to a well-coordinated orchestra, where different musicians play their instruments at the same time, producing a harmonious composition. Similarly, in the computing realm, it’s about multiple processors tackling various aspects of a task simultaneously, achieving a faster and more efficient outcome. Just as a team can achieve more than an individual, parallel processing leverages collective effort to enhance computational performance.

Parallel vs. Normal Processing

Let’s break down the difference between parallel and normal (or sequential) processing using a simple analogy: building a Lego castle.

Normal Processing:

Imagine you’re building a Lego castle all by yourself. You start by placing one brick at a time, carefully organizing and aligning each piece before moving on to the next. It’s a systematic, step-by-step approach.

Parallel Processing:

Now, let’s say you have a team of friends, and each friend is responsible for a specific section of the castle. One friend handles the walls, another the towers, and so on. Each friend works simultaneously, contributing to the construction of the castle. This is akin to parallel processing – tasks are divided, and multiple individuals (or components) work concurrently to achieve a common goal.

Follow us on Linkedin for everything around Semiconductors & AI

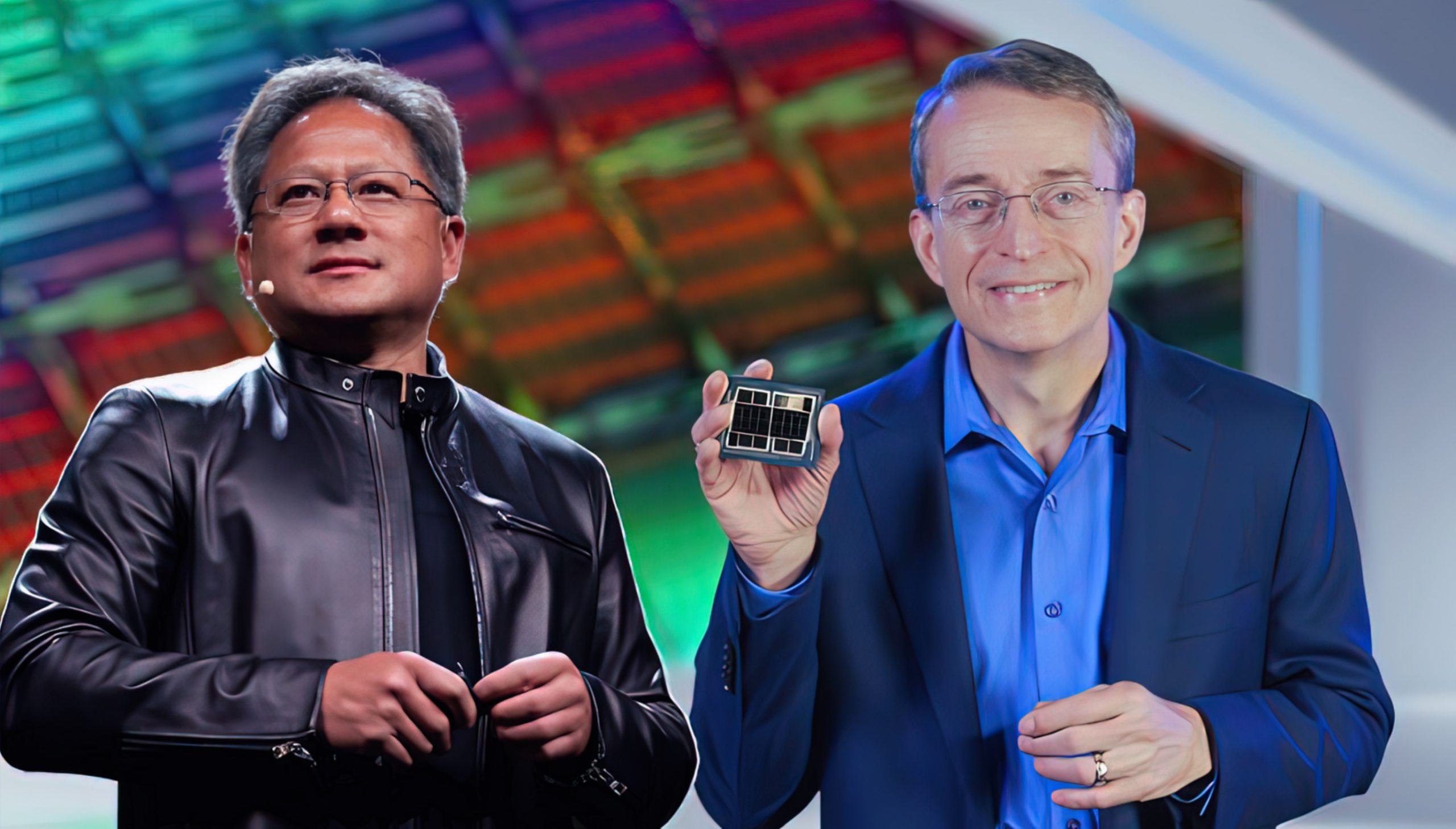

The Nvidia vs. Intel Showdown

Nvidia and Intel, two tech giants in the computing industry, embody the different approaches to processing.

Nvidia: The Parallel Powerhouse

They excel in parallel processing, especially in tasks like rendering graphics, AI (Artificial Intelligence), and complex calculations. Imagine building intricate Lego structures with several skilled builders working on different sections simultaneously – that’s Nvidia’s strength.

Intel: The Sequential Maestro

Intel, on the other hand, is like a master builder focusing on constructing the Lego castle methodically, brick by brick. They’re experts in traditional, sequential processing. Intel processors are exceptional at handling a single, intensive task at a time, making them ideal for tasks that require a strong, singular focus, such as executing a computer program step by step.

The Tug of War: Nvidia vs. Intel

CPU chips function as the “brain” of computers or data centers, handling a wide range of tasks like browsing the web or running software like Microsoft Excel. They are versatile and can perform various calculations, but they do so in a sequential, one-after-another manner.

Nvidia’s GPUs (Graphics Processing Units) have shown exceptional prowess in parallel processing, making them highly sought after for applications like gaming, scientific simulations, AI, and more. Their ability to handle numerous tasks concurrently allows for faster and smoother performance, akin to our team of Lego builders constructing the castle in unison.

Intel, on the other hand, has historically dominated the CPU (Central Processing Unit) market, excelling in sequential processing. Intel’s processors excel in handling intricate calculations step by step, similar to a meticulous Lego builder constructing a castle brick by brick. Unlike Nvidia’s GPUs, they may not manage multiple tasks as swiftly, but their strength lies in methodical and focused processing.

AI workloads often involve repetitive calculations using varying data, necessitating customized chips to make AI economically feasible. Major cloud computing companies like Amazon and Microsoft, responsible for hosting algorithms for many businesses on their data centers, invest billions annually in procuring chips and servers. Additionally, they

allocate substantial funds for powering these data centers. Optimizing chips for efficiency is vital for these companies as they strive to offer competitive cloud services. AI-optimized chips outshine general-purpose Intel CPUs by operating faster, occupying less data center space, and consuming less power.

Using general-purpose CPUs for AI is possible, but it’s costly due to AI’s substantial computational needs. Training a single AI model, like distinguishing cats from dogs, can cost millions, factoring in chip usage and power consumption. More animals for the algorithm mean more transistors, adding to the expense.

Read more: How ChatGPT made Nvidia $1T company

Conclusion

Parallel and normal processing are distinct but valuable approaches in computing. Nvidia and Intel embody these approaches, vying to keep up with evolving digital needs. Just like Lego builders and castle construction, both have their roles, vital in the computing world’s ongoing story of efficiency and power.