Introduction

AI boom has percolated into smartphones. Recently, smartphone chips companies are competing with each other on the basis of now powerful their chips can process AI. NPUs or Neural Processing Units come with different names in different SoCs. Apple calls their NPU as Neural Engine, Qualcomm calls it Hexagon NPU, MediaTek calls it APU (AI processing unit). While they are all meant to process AI workloads, the question is why do smartphones need a dedicated AI processing unit?

Follow us on LinkedIn for everything around Semiconductors & AI

Why do smartphones need dedicated AI processing units (For eg. NPUs) ?

Today most applications on smartphones leverage AI for providing new features to users. Whether it is facial recognition in apple’s Face ID, object detection in photography through Google lens, or speech recognition in voice assistants like Google assistant, AI computation is everywhere.

Thanks to NPU, a smartphone’s camera can recognize and focus based on the objects, environment, and people in the frame. It can automatically switch on the food filter mode for food photography or even remove unwanted subjects in the picture.

Additionally, Google’s photos had a feature which allowed to remove objects from an image. This is an example of on device AI.

Therefore, there needs to be a separate processing unit for AI workloads that can handle these tasks wholly without burdening the CPU.

Read More: Nvidia is currently unbeatable in the GPU market:

Efficiency and Privacy:

Smartphones are increasingly relying on artificial intelligence (AI) for a variety of tasks, from facial recognition in photos to voice assistants like Siri and Google Assistant. There are two main reasons why phones have dedicated AI processing units (sometimes called NPUs or Neural Processing Units):

Efficiency: Running AI tasks on the main CPU or graphics processor (GPU) can be slow and drain the battery. NPUs are specifically designed for the kind of math involved in AI, so they can handle these tasks much faster and with less power.

Privacy: Some AI features, like facial recognition, work best when they can process data on the device itself, rather than sending it to the cloud. This keeps your data private and allows features to work even without an internet connection.

Overall, dedicated AI processing units allow smartphones to run AI features faster, for longer, and more securely. This paves the way for even more advanced AI applications in the future.

How do NPU’s work?

An NPU’s specialty is crunching numbers for machine learning models.

The architecture of an NPU typically consists of several layers of interconnected processing blocks, each of which is designed to handle a specific aspect of the neural network. The input data is fed into one end of the unit and processed through a series of interconnected layers, ultimately producing the desired output at the other end.

The NPU has quick access to data like photos and videos stored on your phone and processes them using its own instruction set that is optimized for operations commonly used in image recognition, voice analysis etc. It also utilizes various data compression and caching techniques to maximize speed. In a nutshell, the parallel processing nature and AI/multimedia focused design of the NPU enables your phone to do things like apply filters to photos, recognize faces, understand speech etc. very quickly without needing to connect to the internet.

Benefits that an NPU brings

While the main processor could technically run these too, it would consume a lot of power and choke up resources for other apps. Meanwhile, the NPU breezes through these complex calculations using ultra-efficient circuitry assisted by dedicated memory. It generates lightning-fast results for AI apps, freeing up the main chip to hum along smoothly. And by offloading the AI-related heavy lifting, it also saves battery life. Because of these obvious advantages, NPUs are growing more common, particularly in smartphones.

Read more 4 Ways AI-Powered NPUs Are Taking Games to the Next Level – techovedas

How exactly is NPU different from GPU and CPU?

There are significant distinctions between the three, but overall, the NPU is closer to the GPU in that both are designed for parallel processing. It’s just that NPUs are more specialised, focusing solely on neural network tasks, whereas GPUs are more versatile and can handle a wider range of parallel computing tasks.

In terms of core count, the exact number can vary greatly depending on the specific model and manufacturer. However, the general trend is that CPUs have fewer, more powerful cores, GPUs have many cores optimized for parallel tasks (often in thousands) like video processing and gaming, and NPUs have cores specialized for AI computations.

Read More :1.4 Petabytes of Data in Our Brain: A Closer Look at Google’s Brain Imaging Study – techovedas

NPUs in market’s leading SoCs

Following their debut a few years ago, NPU silicon has rapidly improved and been integrated into smartphones by nearly all major vendors.

Apple led the charge by introducing the Neural Engine in its A11 mobile chipset and higher-end iPhone models back in 2017. The Neural Engine is purpose-built for tasks like video stabilization, photo correction, and other AI-related functions in higher-end iPhone models.

Huawei similarly unveiled an NPU with its Kirin 970 system on a chip in 2018.

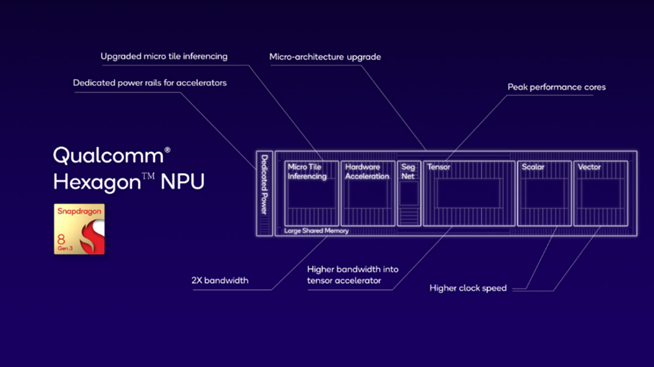

Qualcomm, the dominant Android mobile platform vendor also rolled out its AI Engine integrated into its premium 800 series chipsets. And more recently, Qualcomm is focusing on on-device generative AI, powered by the NPU on the Snapdragon 8 Gen 3.

The Sensing Hub in Qualcomm’s Hexagon NPU operates quietly in the background, securely processing data from various sensors on your device. It continuously analyzes this data to create a neural network that feeds into the Low-Level Machine Learning (LLM) assistant. By doing so, it offers habit-based suggestions and enhances user experiences.

MediaTek and Samsung have also followed suit by baking NPUs into their latest offerings.

The future of NPUs

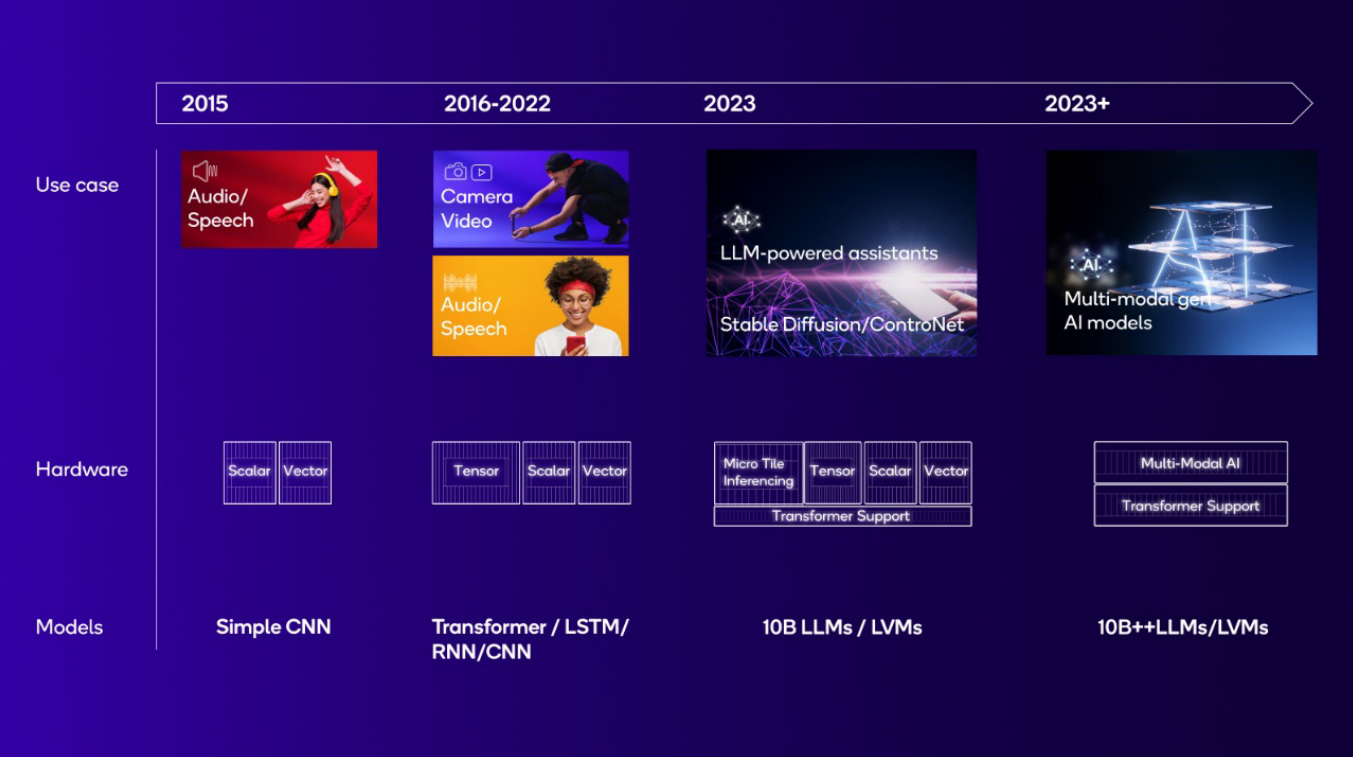

Early NPUs in 2015 were designed for audio and speech AI use cases that were based on simple convolutional neural networks (CNNs) and required primarily scalar and vector math. Starting in 2016, photography and video AI use cases became popular with new and more complex models, such as transformers, recurrent neural networks (RNNs), long short-term memory (LSTM), and higher-dimensional CNNs. These workloads required significant tensor math, so NPUs added tensor accelerators and convolution acceleration for much more efficient processing.

As AI continues to evolve quickly, many tradeoffs in performance, power, efficiency,

programmability, and area must be balanced. A dedicated, custom-designed NPU

makes the right choices and is tightly aligned with the direction of the AI industry.

Read more Explained: What the hell is Neural networks? – techovedas

Conclusion

Smartphones require dedicated AI processing units, or NPUs, to efficiently handle AI workloads like facial recognition, object detection, and speech recognition without overburdening the CPU. NPUs, designed specifically for neural network tasks, use a data-driven parallel computing architecture to process massive multimedia data efficiently. This specialization allows NPUs to deliver fast, power-efficient AI computations, enhancing performance and battery life. Major smartphone vendors like Apple, Huawei, Qualcomm, MediaTek, and Samsung have integrated NPUs into their chipsets to support advanced AI features, highlighting the growing importance of these processors in modern mobile devices.