Introduction

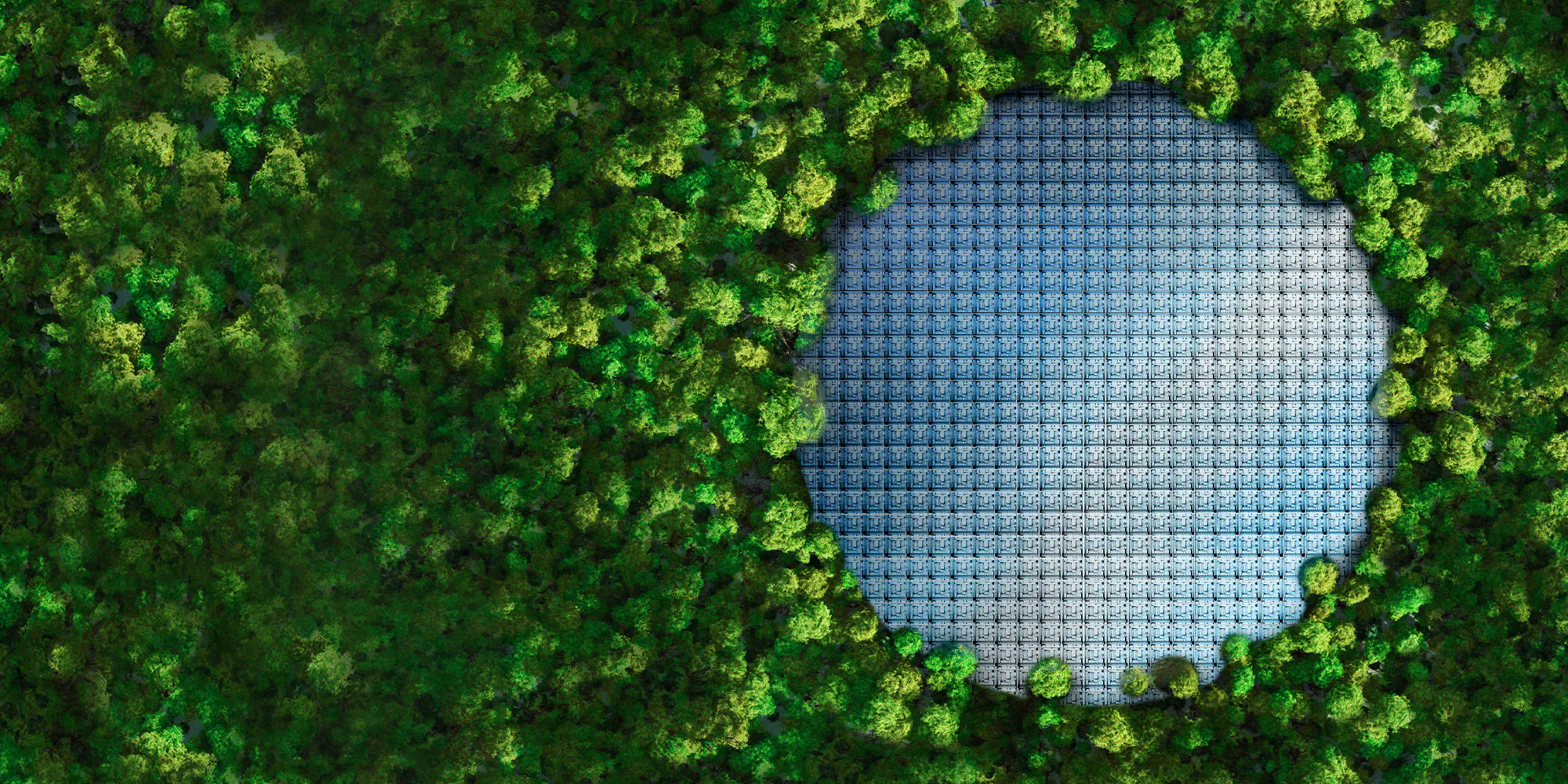

AI hardware consumes a lot of energy, produces a lot of heat, and emits a lot of CO2s. Did you know that training one machine translation model may generate the same amount of CO2 as 36 ordinary Americans in a year?

This is not sustainable for the earth or the future of AI.

Fortunately, there are ways to make AI hardware more energy-efficient and eco-friendly.

In this article, we will explore some of the strategies and techniques that researchers and engineers are using to design and optimize AI hardware for the green frontier. We will also discuss some of the challenges and opportunities that lie ahead for this emerging field.

1. Specialized Hardware Design

One of the most important methods to increase AI hardware’s energy efficiency is to build it specifically for AI workloads.

This means using specialized hardware platforms that can perform AI operations faster and with less power than general-purpose processors. Some examples of specialized hardware platforms are:

a. ASICs (Application-Specific Integrated Circuits):

These are specially designed chips that are tailored for a specific AI task, such as image recognition or natural language processing. ASICs can achieve high performance and low power consumption by eliminating unnecessary overhead and exploiting parallelism.

For instance, Google’s Tensor Processing Unit (TPU) is an ASIC that can accelerate deep learning models by up to 30 times compared to conventional CPUs and GPUs.

b. FPGAs (Field-Programmable Gate Arrays):

These are reprogrammable hardware platforms that can do certain AI functions. FPGAs can be more energy efficient than general-purpose processors because they adapt to the task and use just the resources that are required. For example, Microsoft’s Project Brainwave uses FPGAs to accelerate real-time AI inference on the cloud.

Read more: What is special about ARM for the biggest IPO in recent times?

2. Quantization and Pruning

Another way to improve the energy efficiency of AI hardware is to reduce the precision and complexity of the computations and data involved. This can be done by using techniques such as:

a. Quantization:

Quantization is the process of lowering the number of bits utilized in computations to represent numerical values.

For example, using 8-bit instead of 32-bit floating-point numbers can save energy without a significant drop in accuracy. Quantization can also reduce the memory and bandwidth requirements of AI models, leading to further energy savings.

b. Pruning:

It is the process of deleting or setting tiny weights in neural networks to zero. Pruning can reduce the computational workload and memory requirements of AI models, leading to energy savings during both training and inference. it can also improve the generalization and robustness of AI models by eliminating redundant or noisy features.

Read More: 7 Ways Synopsys “Chip GPT” Plans to Revolutionize India’s Semiconductor Industry – techovedas

3. Hardware-Software Co-Design

A third way to improve the energy efficiency of AI hardware is to coordinate the efforts of hardware and software engineers. This can be done by using techniques such as:

Model Optimization: Model optimization is the process of creating AI models that are more energy-efficient on a given hardware platform. This can involve choosing the appropriate model architecture, hyperparameters, and data formats to match the hardware capabilities and constraints. MobileNet optimizes AI models for mobile devices, achieving high accuracy and low latency while consuming minimal power.

Compiler Optimization: Compiler optimization is the process of producing code that makes efficient use of hardware features. This can involve applying various optimizations, such as loop unrolling, vectorization, and parallelization, to improve the performance and energy efficiency of the code.

For example, TVM is a compiler framework that can optimize AI models for different hardware platforms, achieving up to 30 times speedup and 10 times energy reduction compared to existing frameworks.

Read More: What is the Role of Processors in Artificial Intelligence (AI) Revolution – techovedas

4. Dynamic Scaling and Power Management

A fourth way to improve the energy efficiency of AI hardware is to adjust the operating conditions of the hardware components based on the workload requirements.

Dynamic Voltage and Frequency Scaling (DVFS): This is the technique that adjusts the operating voltage and clock frequency of hardware components based on workload needs. DVFS can save energy by reducing the power consumption when the hardware is not fully utilized.

For example, NVIDIA’s Jetson Nano is a low-power AI platform that can dynamically scale its performance and power consumption based on the application needs.

Sleep States and Power Gating: Incorporating hardware components or entire sections into low-power sleep states or completely shutting them down occurs when they are not in use.

These techniques can save energy by minimizing the idle and leakage power consumption. For example, Intel’s Movidius Myriad X is a vision processing unit that can switch between different power modes and gate off unused cores to optimize the energy efficiency.

Read More: Why are quantum computers taking so long to perfect? – techovedas

5. Cooling and Thermal Management

A fifth way to improve the energy efficiency of AI hardware is to prevent the hardware components from overheating.

Efficient Cooling: This is the technique of using proper cooling solutions to dissipate the heat generated by the hardware components. Efficient cooling can reduce the energy consumption and improve the reliability of the hardware. For example, IBM’s Summit is the world’s fastest supercomputer that uses water cooling to handle the massive heat generated by its AI processors.

Energy-Aware Training: This is the technique of using regularization methods during the training of AI models to reduce the heat generation and energy consumption. Regularization methods, such as dropout and weight decay, can improve the generalization and robustness of AI models by preventing overfitting and reducing the model complexity.

For example, a recent study showed that using dropout during the training of deep neural networks can reduce the power consumption by up to 50%.

Challenges and Opportunities

While the techniques discussed above can help make AI hardware more energy-efficient and eco-friendly, there are still many challenges and opportunities for further research and development. Some of the open questions and directions are:

How to measure and benchmark the energy efficiency of AI hardware?

MLPerf is a consortium that aims to provide fair and useful benchmarks for measuring the performance and energy efficiency of AI systems.

How to balance the trade-offs between energy efficiency and other objectives, such as accuracy, latency, and security?

Eyeriss is a chip that can dynamically reconfigure its architecture and dataflow to optimize the energy efficiency and accuracy of different AI tasks.

How to design and optimize AI hardware for emerging domains and applications, such as edge computing, neuromorphic computing, and quantum computing?

Loihi is a neuromorphic chip that mimics the brain’s structure and function to achieve high energy efficiency and adaptability for AI applications.

Conclusion

AI hardware is a key enabler for the advancement and adoption of AI technologies. However, it also poses a significant environmental challenge due to its high energy consumption and carbon footprint.

In this article, we have explored some of the strategies and techniques that can help make AI hardware more energy-efficient and eco-friendly. We have also discussed some of the challenges and opportunities for future research and development in this field.

We hope that this article can inspire and inform the readers who are interested in the green frontier of AI hardware. As Albert Einstein once said, “We can’t solve problems by using the same kind of thinking we used when we created them.”