Introduction:

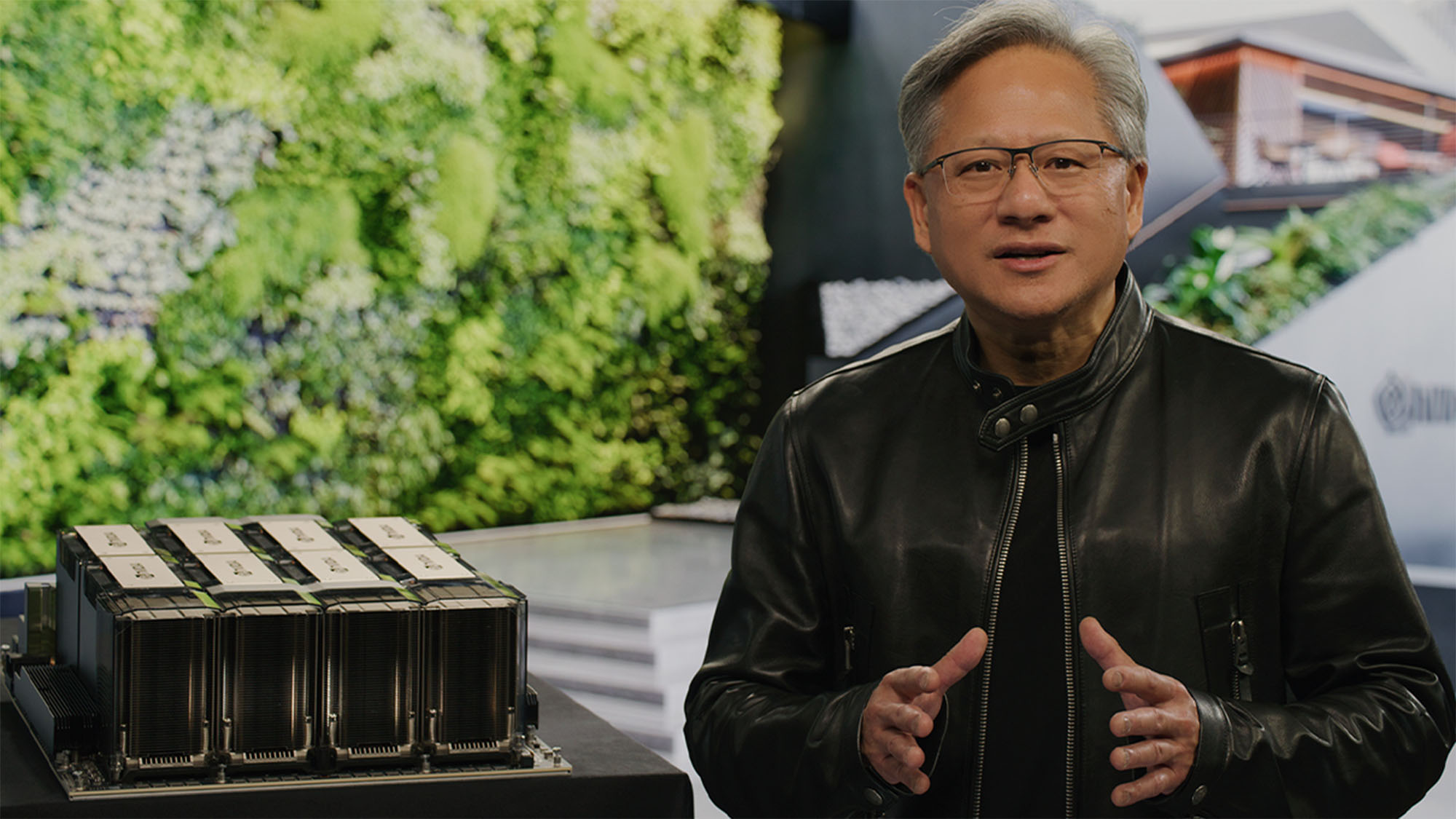

In the ever-evolving landscape of technology, Nvidia has emerged as a true titan, reaching the remarkable milestone of a trillion-dollar market capitalization with a lesser known asset called CUDA. This extraordinary achievement results from a convergence of strategic decisions, technological breakthroughs, and a keen understanding of market trends.

Nvidia’s GPUs became the go-to hardware for powering AI workloads, thanks to their superior performance compared to CPUs. This allowed Nvidia to capitalize on the explosive growth of AI across various industries, including data centers, healthcare, and self-driving cars.

Follow us on Linkedin for everything around Semiconductors & AI

- May 30, 2023: Nvidia briefly surpasses $1 trillion market cap for the first time.

- April 30, 2023: Nvidia reports strong Q1 results and forecasts 50% sales growth for the next quarter.

- 2023: Nvidia becomes a major player in the generative AI revolution.

- 2020: Nvidia acquires Arm Holdings, a major chip designer.

- 2019: Nvidia acquires Mellanox Technologies, a high-performance networking company.

- 2016: Nvidia’s market cap starts its upward trajectory due to strong gaming and AI demand.

Read More: Bankruptcy to Trillion-Dollar Company: Story of Nvidia

What is Nvidia CUDA?

CUDA, which stands for Compute Unified Device Architecture, is a parallel computing platform and application programming interface model developed by NVIDIA. It enables developers to harness the computational power of NVIDIA GPUs (Graphics Processing Units) for general-purpose processing, beyond traditional graphics rendering. In simple language, it is a programming language specialized for handling GPU compute.

Read More: What is Huang Law: 1000x Chip Performance in 10 Years

Why CUDA by Nvidia?

The GPU compute power is limited by three factors namely,

- Memory Bandwidth – Memory system can only feed data at about 1/6th of what the execution resources can request.

- Occupancy – Occupancy is a measure of how much of the GPU memory is being used by computations, and how much is left for other purposes.

- Concurrency – It is the number of independent computations that can be executed simultaneously by a GPU unit.

CUDA optimizes GPU tasks by efficiently using memory bandwidth and processing cores. It prioritizes and organizes tasks for maximum efficiency, ensuring available space for upcoming operations. Tasks are executed in parallel streams, maximizing concurrency.

Brief history of NVIDIA role in CUDA development

NVIDIA played a crucial role in developing CUDA, shaping parallel computing and GPU programming. CUDA, a parallel computing platform, empowers developers to utilize NVIDIA GPUs for general-purpose processing, extending beyond graphics.

Introduction of CUDA (2007): CUDA was first introduced by NVIDIA in 2007 with the release of the GeForce 8800 GPU. This marked a significant shift as it allowed developers to harness the parallel processing power of GPUs for scientific and general-purpose computing tasks.

Early GPU Computing Initiatives: Before CUDA, GPUs were primarily designed for graphics rendering. NVIDIA recognized the untapped potential of GPUs for parallel computing tasks and started laying the groundwork for their use in scientific and technical computing applications.

CUDA Toolkit Release (2007): NVIDIA released the first version of the CUDA Toolkit in 2007. This provided developers with the necessary software tools and libraries to program GPUs using the CUDA programming model. This included the CUDA C programming language, a parallel computing architecture, and development tools.

GPGPU Revolution: CUDA played a pivotal role in popularizing General-Purpose computing on Graphics Processing Units (GPGPU). It allowed researchers, scientists, and developers to leverage the massive parallel processing capabilities of GPUs for a wide range of applications beyond graphics, including scientific simulations, machine learning, and data analytics.

Read More: How Well do you know Nvidia- Take Our Quiz to Find out

Evolution of CUDA Continues

Evolution of CUDA Architecture: NVIDIA consistently refined the CUDA architecture, adding new features and optimizations while supporting the latest GPU hardware. This ongoing evolution has ensured that CUDA remains a top choice for developers in high-performance computing applications.

Expanding Ecosystem: NVIDIA fostered a vibrant ecosystem around CUDA by collaborating with developers, researchers, and industry partners. This led to the creation of a rich library of GPU-accelerated software across various domains, further solidifying CUDA’s position as a leading platform for parallel computing.

Integration with Deep Learning: CUDA became essential for deep neural network development as deep learning gained traction. Frameworks like TensorFlow and PyTorch used CUDA to speed up training and inference processes, contributing to the success of modern AI applications.

CUDA in HPC (High-Performance Computing): CUDA found extensive use in the realm of high-performance computing, where parallel processing is crucial. Its adoption in scientific simulations, weather modeling, financial modeling, and other computationally intensive tasks showcased the versatility of GPU computing.

CUDA-X AI and GPU-Accelerated Libraries: NVIDIA broadened its CUDA ecosystem with CUDA-X AI and GPU-accelerated libraries. These libraries, like cuDNN and cuBLAS, streamlined the development of GPU-accelerated applications, particularly in AI.

Continued Innovation: NVIDIA remains committed to CUDA development, continually introducing new features, optimizing performance, and supporting the latest GPU architectures. The company’s dedication to advancing GPU computing continues to influence the trajectory of parallel processing and high-performance computing.

Read More: How much Price a $1,000 in Nvidia Stock be if you had invested at IPO in 1999?

Three pillars of GPU compute

GPU compute is based on 3 pillars namely

- Memory bandwidth

- Memory occupancy

- Compute concurrency

Let us understand this one by one.

Memory bandwidth: Memory bandwidth is the rate at which data can be transferred between the GPU and its memory. It is measured in gigabytes per second (GB/s) or megabytes per second (MB/s).

Memory bandwidth determines how fast the GPU can access and process large amounts of data. The higher the memory bandwidth, the faster the GPU can perform computations.

Memory occupancy: Occupancy is a measure of how much of the GPU memory is being used by computations, and how much is left for other purposes.

The higher the occupancy, the less efficient the GPU utilization, because it means that there is less memory available for other tasks or data. You can imagine it as a 4D Tetris game where you have to fit incoming blocks most efficiently such that there is enough empty space for upcoming blocks.

Compute concurrency: Compute concurrency represents the quantity of independent computations a GPU unit or thread group can execute simultaneously, typically expressed as a number or fraction. It determines the level of parallel work achievable within a specified timeframe.

The higher the compute concurrency, the more productive and scalable the GPU performance. Compute concurrency hinges on factors like GPU unit or thread group architecture, encompassing cores, registers, caches, SIMD units, etc. Additionally, it is influenced by workload characteristics and requirements.

Read More: What the hell is difference between CPU & GPU

How CUDA helps achieve the above?

CUDA allows programmers to leverage the power of GPUs for general-purpose computing. It provides a programming interface that lets programmers write code that can run on compatible GPUs, as well as libraries and tools that help with development and debugging.

You can imagine it as your broker in a stock market who buys or sells shares you want, on your behalf.

- Actions of buying and selling are GPU compute

- Broker is CUDA

- And you, the investor, is the programmer

We discussed that GPU compute is based on the three pillars of bandwidth, occupancy and concurrency. While explaining how CUDA addresses all three is out of the scope of this article, we shall see how CUDA helps achieve one of these i.e. Concurrency.

How NVIDIA Earns Through CUDA: A Deep Dive

In the realm of parallel computing, NVIDIA’s CUDA (Compute Unified Device Architecture) has emerged as a game-changer. This revolutionary platform and programming model leverages the power of NVIDIA’s GPUs (Graphical Processing Units) to accelerate computing applications. But how does NVIDIA monetize CUDA? Let’s delve into the intricacies of NVIDIA’s business model and explore how CUDA contributes to its revenue streams.

Hardware Sales: The Power of GPUs

NVIDIA’s primary source of revenue is the sale of GPUs, which are integral to the functioning of CUDA. NVIDIA’s GPUs excel in performance and seamlessly integrate with CUDA, providing a competitive advantage. The increased demand for these GPUs is notable, especially in high-performance computing sectors like AI, gaming, and data science. This has led to a substantial increase in NVIDIA’s hardware revenue, affirming the profitability of their hardware sales strategy.

Software Licensing: Monetizing Innovation

Beyond hardware, NVIDIA also monetizes CUDA through software licensing. NVIDIA has developed a suite of software libraries and frameworks built on CUDA, including the likes of GeForce Experience, Omniverse, and RAPIDS. These software products are licensed to users for a fee, creating an additional revenue stream for NVIDIA. The potential of NVIDIA’s software revenue is immense, considering the growing reliance of industries on software solutions for complex computational tasks.

Platform Value: Creating a Unified Ecosystem

NVIDIA’s earnings from CUDA are not limited to direct sales and licensing. The company has created a unified platform integrating hardware, software, and services, providing immense value to its customers. CUDA lies at the heart of this platform, offering benefits such as performance, scalability, security, and innovation. This platform strategy has given NVIDIA a competitive advantage, attracting a broad user base and fostering customer loyalty. The value derived from this platform significantly contributes to NVIDIA’s earnings.

Free resources = more developers

NVIDIA is convincing people to join CUDA through a combination of a powerful toolkit, a supportive developer ecosystem, and a range of initiatives and resources. Here’s how:

- CUDA Toolkit: The CUDA Toolkit is a comprehensive suite of software libraries and tools for developing GPU-accelerated applications. It includes APIs for linear algebra, image and video processing, deep learning, and more. This wide range of capabilities makes it attractive to developers in various domains.

- Supportive Developer Community: NVIDIA has fostered a vibrant CUDA developer community by providing free access to hundreds of SDKs, technical trainings, and opportunities to connect with millions of like-minded developers, researchers, and students through the NVIDIA Developer Program. This sense of community and shared learning can be a strong draw for new developers. Apart from this NVIDIA organizes events such as GTC Digital Webinars, CUDA University Online Courses, and CUDA Cloud Training Platforms to showcase the latest features and best practices of CUDA.

- Open-Source Samples: NVIDIA also maintains the CUDA Samples repository on GitHub, which contains code samples for various platforms and applications. These samples demonstrate how to use CUDA effectively, providing a practical learning resource for developers.

NVIDIA encourages participation in the CUDA platform by offering a robust toolkit, community support, and various resources. As developers engage with free resources, their interest grows. While not all resources are free, NVIDIA capitalizes on CUDA through software licensing. This approach boosts CUDA adoption and creates an additional revenue stream beyond GPU sales.

Read More: AI Chipset Market : Size, Share, and Trends For Next Decade

Which industries use CUDA?

CUDA is a platform that allows software to use GPUs for general purpose computing, which can be useful for various industries that require high performance and parallel processing. Some of the industries that employ CUDA are:

- Gaming: CUDA enables developers to create realistic and immersive gaming experiences by using ray tracing, physics engines, and other advanced graphics features. GeForce RTX is a series of gaming GPUs that use CUDA technology.

- Data Centre: CUDA enables data centre operators to optimize their infrastructure and services by using GPU-accelerated computing. The Data Centre for Technical and Scientific Computing website demonstrates the utilization of NVIDIA GPUs in various data center scenarios, including cloud computing.

- Scientific Computing: CUDA supports a wide range of scientific applications such as linear algebra, image and video processing, deep learning, and graph analytics. The Tesla series comprises professional GPUs designed for data center workloads, including machine learning, high-performance computing, and scientific visualization.

- Artificial Intelligence: CUDA provides a powerful framework for developing and deploying AI applications such as natural language processing, computer vision, speech recognition, and more. The Jetson series encompasses embedded GPUs optimized for AI autonomous machines, such as robots, drones, and smart cameras.

Read More: Explained: Wonders of SoC (System-on-Chip) Design: Challenges & Future

Conclusion

NVIDIA’s CUDA is more than just a parallel computing platform; it’s a revenue-generating powerhouse. Through hardware sales, software licensing, and platform value, CUDA has proven to be a significant contributor to NVIDIA’s earnings. As we approach an AI-driven future, CUDA’s significance in NVIDIA’s business model is poised to escalate. The company’s substantial investment in CUDA has proven fruitful, and it will be intriguing to observe how NVIDIA capitalizes on this technology for future growth. In the evolving landscape of high-performance computing, CUDA is likely to play an increasingly prominent role for NVIDIA.