Introduction

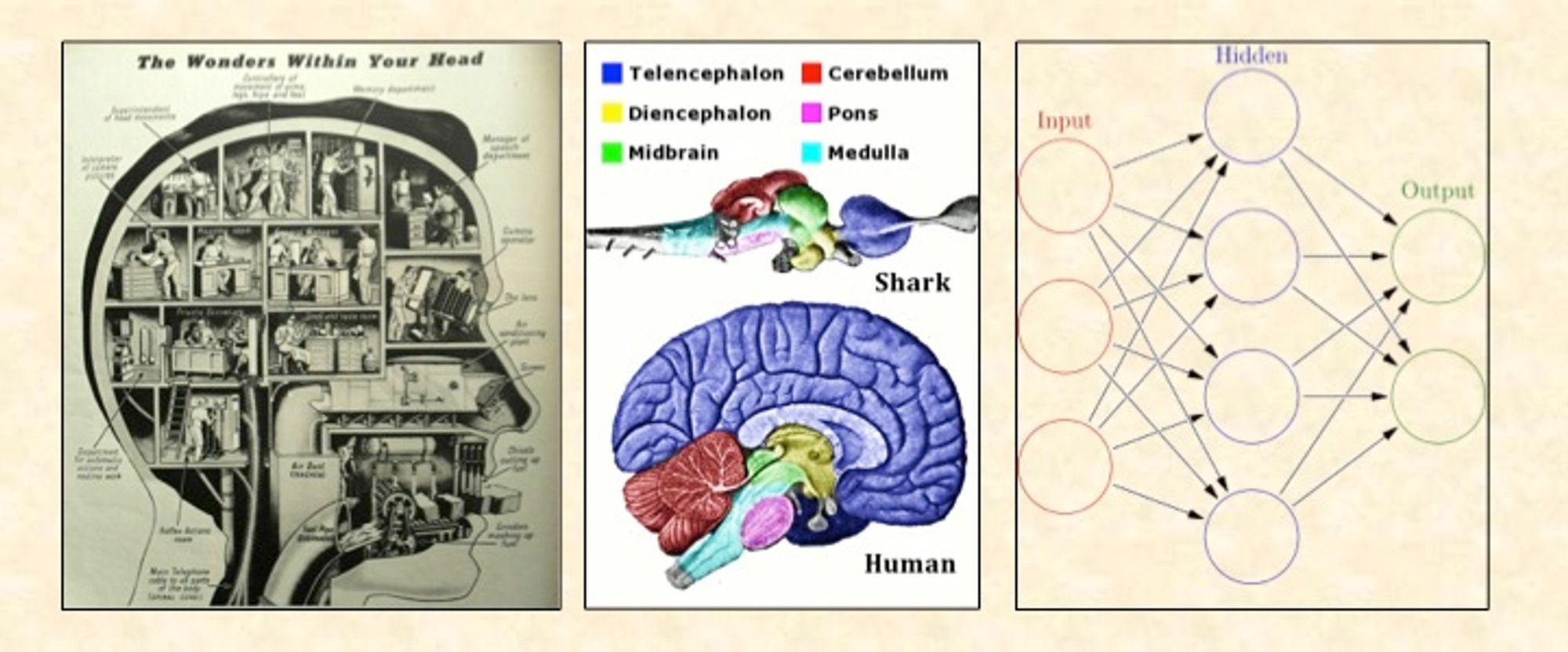

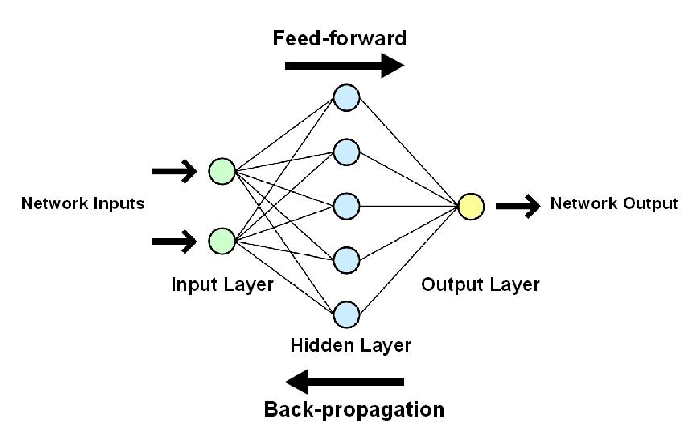

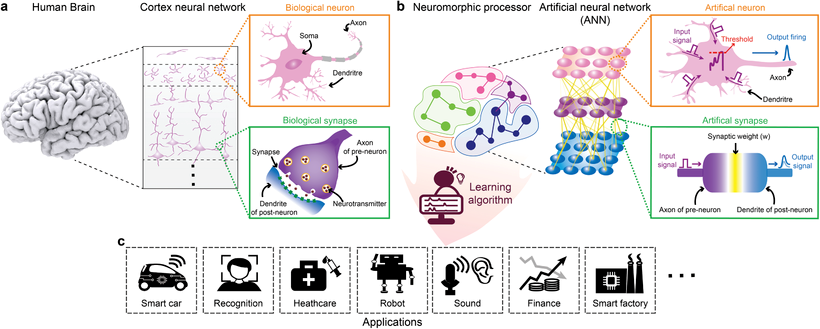

Neural Network Architectures are computational models that mimic the structure and function of the human brain. They consist of interconnected units called neurons that process information and learn from data. Various artificial intelligence applications, including computer vision, natural language processing, and speech recognition, have widely used neural networks.

However, implementing neural networks on conventional hardware platforms, such as CPUs and GPUs, poses several challenges. These platforms lack the design for handling the massive parallelism, high memory bandwidth, and low power consumption demanded by neural networks. Furthermore, the von Neumann bottleneck constrains them, representing the gap between the processing speed and the memory access speed.

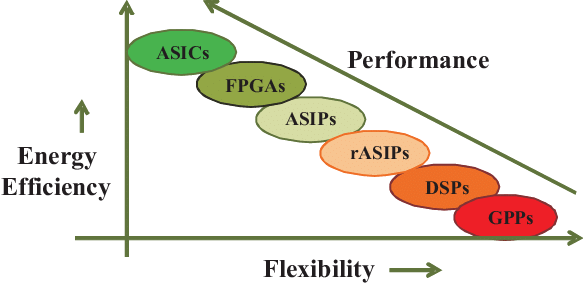

To overcome these limitations, researchers have been exploring alternative hardware architectures that can accelerate and optimize the performance of Neural Network Architectures. These architectures include FPGAs, ASICs, CGRAs, and neuromorphic chips. In this article, we will provide a brief overview of these architectures, their advantages and disadvantages, and their current and potential applications.

Follow us on Linkedin for everything around Semiconductors & AI

Analogy: Building a House

Before delving into the specifics of each architecture, let’s draw an analogy to simplify the differences and trade-offs among them. Envision building a house with four options:

Ready-Made House (CPU/GPU): Similar to buying a house from a developer, CPUs and GPUs are general-purpose, pre-fabricated hardware. While offering a quick implementation, customization, and optimization have limitations, akin to accepting the house as is, potentially not meeting specific needs.

Contractor-Built House (ASIC): Hiring a contractor to build a customized house resembles using ASICs, dedicated hardware for specific applications. While tailored to needs, ASICs require substantial investment and time, limiting post-fabrication modifications.

Building Blocks Kit (FPGA): Assembling a house from building blocks is akin to using FPGAs—reconfigurable and programmable. This offers flexibility but demands skills and may not match the efficiency of contractor-built houses.

Growing a House (Neuromorphic Chip): Growing a house from a seed, inspired by natural processes, parallels using neuromorphic chips. While providing scalability and sustainability, understanding and predicting outcomes pose challenges.

Each option has its pros and cons, and the choice depends on goals, constraints, and preferences. Similarly, selecting a neural network architecture depends on the model, application, and requirements.

Read More: Why Hardware Accelerators Are Essential for the Future of AI – techovedas

FPGAs: Flexible and Customizable

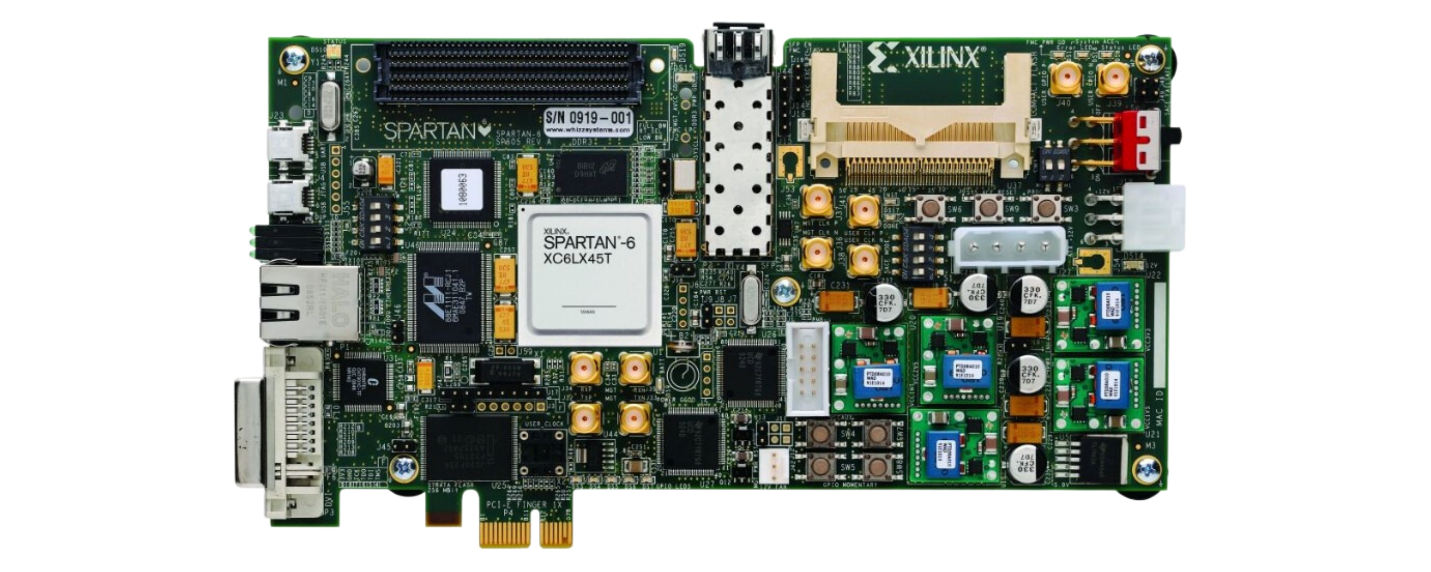

Users configure Field Programmable Gate Arrays (FPGAs) to integrate them into any logic function since FPGAs are integrated circuits. FPGAs consist of an array of logic blocks capable of performing fundamental operations like addition, multiplication, and comparison. Programmable interconnects link these logic blocks, enabling the flow of data between them.

FPGAs are attractive for neural network implementation because they offer high flexibility and customizability.Unlike CPUs and GPUs, which have fixed architectures and instruction sets, FPGAs can be tailored to the specific needs and characteristics of the neural network.

For example, FPGAs can support different data types, such as fixed-point or floating-point, and different precision levels, such as 8-bit or 16-bit.FPGAs can also exploit the sparsity and redundancy of the neural network to reduce the computation and memory requirements.

FPGAs have been used to implement various types of neural networks, such as convolutional neural networks (CNNs), recurrent neural networks (RNNs), and spiking neural networks (SNNs). Some examples of FPGA-based neural network accelerators are FINN, ESE, and SpiNNaker.

However, FPGAs also have some drawbacks.

Design complexity and difficulty.

It requires low-level programming skills and hardware knowledge, which are not common among neural network developers.

FPGAs have limited resources, such as logic blocks, memory, and power, which constrain the scalability and efficiency of the neural network.

FPGAs have lower performance and higher cost than ASICs, which are dedicated hardware chips for neural networks.

Read More: What is Hardware Artificial Intelligence: Components Benefits & Categories

ASICs: Fast and Efficient

Application Specific Integrated Circuits (ASICs) design and fabricate integrated circuits tailored for a specific purpose. These circuits optimize performance, minimize power consumption, and reduce size for the target application. ASICs are the opposite of FPGAs, which are general-purpose and reconfigurable.

ASICs are ideal for neural network implementation because they can exploit the inherent parallelism, regularity, and locality of the neural network.

It can implement specialized architectures and circuits that are tailored to the neural network operations, such as matrix multiplication, convolution, and activation.

ASICs can also support various optimization techniques, such as quantization, pruning, and compression, to reduce the computation and memory overhead of the neural network.

ASICs have been used to implement various types of neural networks, such as CNNs, RNNs, and SNNs. Some examples of ASIC-based neural network accelerators are Google TPU, NVIDIA DLA, and Intel Loihi.

However, ASICs also have some drawbacks.

ASICs require a large investment and a long development cycle, which make them less flexible and adaptable to the fast-changing neural network models and applications. Manufacturers face a significant challenge with ASICs as these chips possess limited reusability and upgradability, making modifications or improvements challenging after the fabrication process. It chips, drawing inspiration from the biological brain, outperform ASICs in terms of power consumption and heat dissipation.

Neuromorphic Chips: Bio-Inspired and Energy-Efficient

Neuromorphic chips integrate circuits to emulate the structure and function of the biological brain. These chips consist of an array of artificial neurons and synapses, processing and storing information in a distributed and parallel manner. Neuromorphic chips use analog and digital circuits to implement the neural dynamics and learning mechanisms of the brain.

Neuromorphic chips are promising for neural network implementation because they can achieve high energy efficiency and scalability.

Unlike CPUs, GPUs, FPGAs, and ASICs, which use the von Neumann architecture, neuromorphic chips use the non-von Neumann architecture, which eliminates the separation between processing and memory. This reduces the data movement and communication overhead, which are the main sources of power consumption and performance degradation in conventional hardware platforms.

Neuromorphic chips can support large-scale neural networks with millions or billions of neurons and synapses, which are comparable to the brain.

Neuromorphic chips have been used to implement various types of neural networks, such as CNNs, RNNs, and SNNs. Some examples of neuromorphic chips are IBM TrueNorth, BrainChip Akida, and Samsung NeuroSim.

However, neuromorphic chips also have some drawbacks. One of them is the design challenge and complexity.

The neuroscience and engineering communities do not fully understand and model the brain and its computational principles, which neuromorphic chips require for their development.

Neuromorphic chips have lower accuracy and reliability than conventional hardware platforms, which use deterministic and precise computation.

Neuromorphic chips have limited compatibility and interoperability with existing neural network frameworks and tools, which are based on the von Neumann architecture.

Conclusion

Neural networks are powerful and versatile computational models that can solve various artificial intelligence problems. However, implementing neural networks on conventional hardware platforms, such as CPUs and GPUs, is not efficient and optimal. Therefore, researchers have been exploring alternative hardware architectures that can accelerate and optimize the performance of neural networks.

These architectures include FPGAs, ASICs, CGRAs, and neuromorphic chips, each with its own advantages and disadvantages. In this article, we have provided a brief overview of these architectures, their features, and their applications. We hope this article has given you some insights and inspirations on how to build a brain using hardware. Thank you for reading.