Introduction

Chatbots are becoming more popular and powerful as a way to interact with customers, provide information, and answer questions. But how can you ensure that your chatbot is giving accurate and reliable answers, and not just making things up or repeating outdated facts? This is where RAG comes in.

RAG, or Retrieval-Augmented Generation, is a technique that combines the capabilities of a pre-trained large language model (LLM) with an external data source. This approach combines the generative power of LLMs like GPT-3 or GPT-4 with the precision of specialized data search mechanisms, resulting in a system that can offer nuanced responses.

What is a Large Language Model?

A large language model (LLM) is an artificial intelligence (AI) system that can generate natural language text for various tasks, such as answering questions and translating languages. LLMs are trained on vast volumes of data and use billions of parameters to learn the statistical patterns of language.

However, LLMs have some limitations. For example, they may:

–Present false information when they do not have the answer.

-Present out-of-date or generic information when the user expects a specific, current response.

-Create a response from non-authoritative sources.

You can think of the LLM as an over-enthusiastic new employee who refuses to stay informed with current events but will always answer every question with absolute confidence. Unfortunately, such an attitude can negatively impact user trust and is not something you want your chatbot to emulate!

Read More: 5 FREE Courses on AI and ChatGPT to Take You From 0-100 – techovedas

What is Retrieval-Augmented Generation?

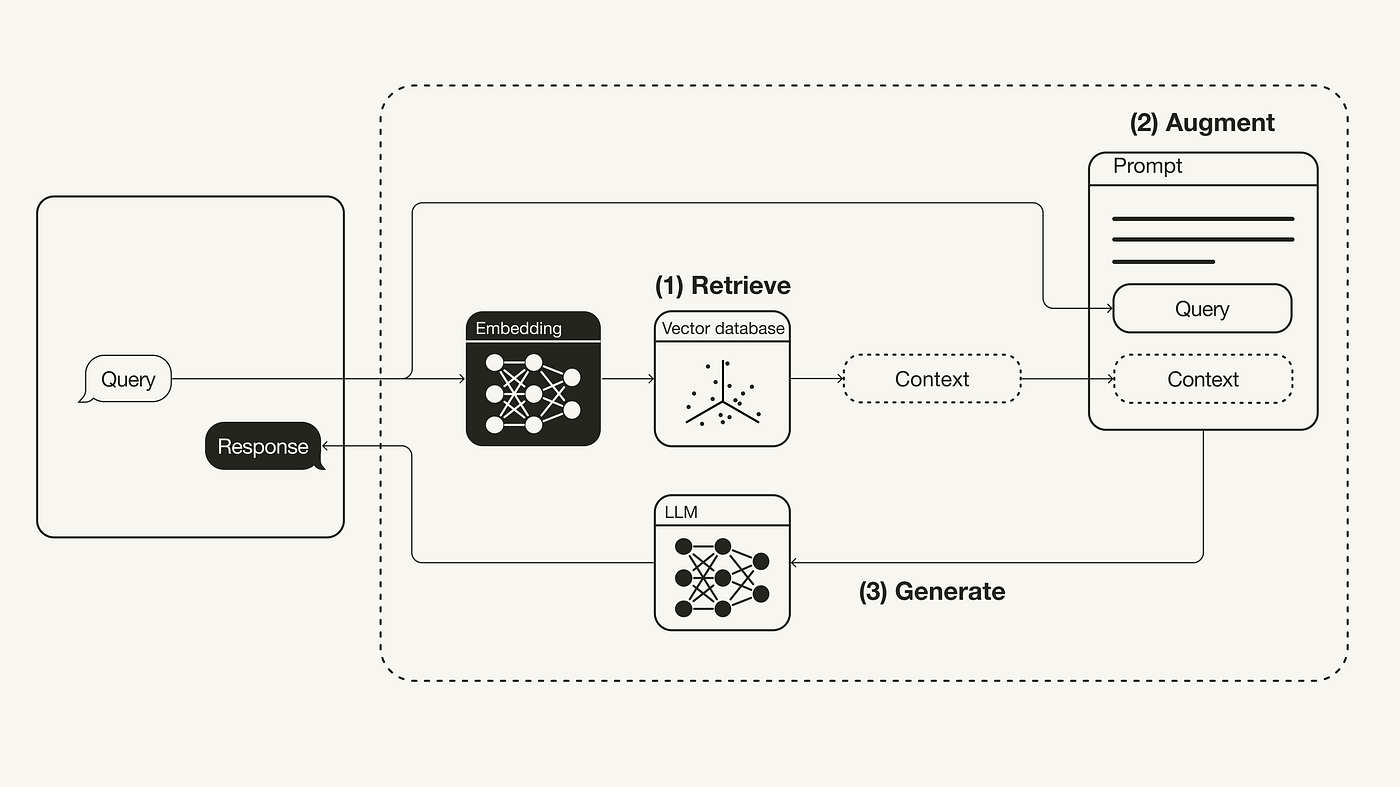

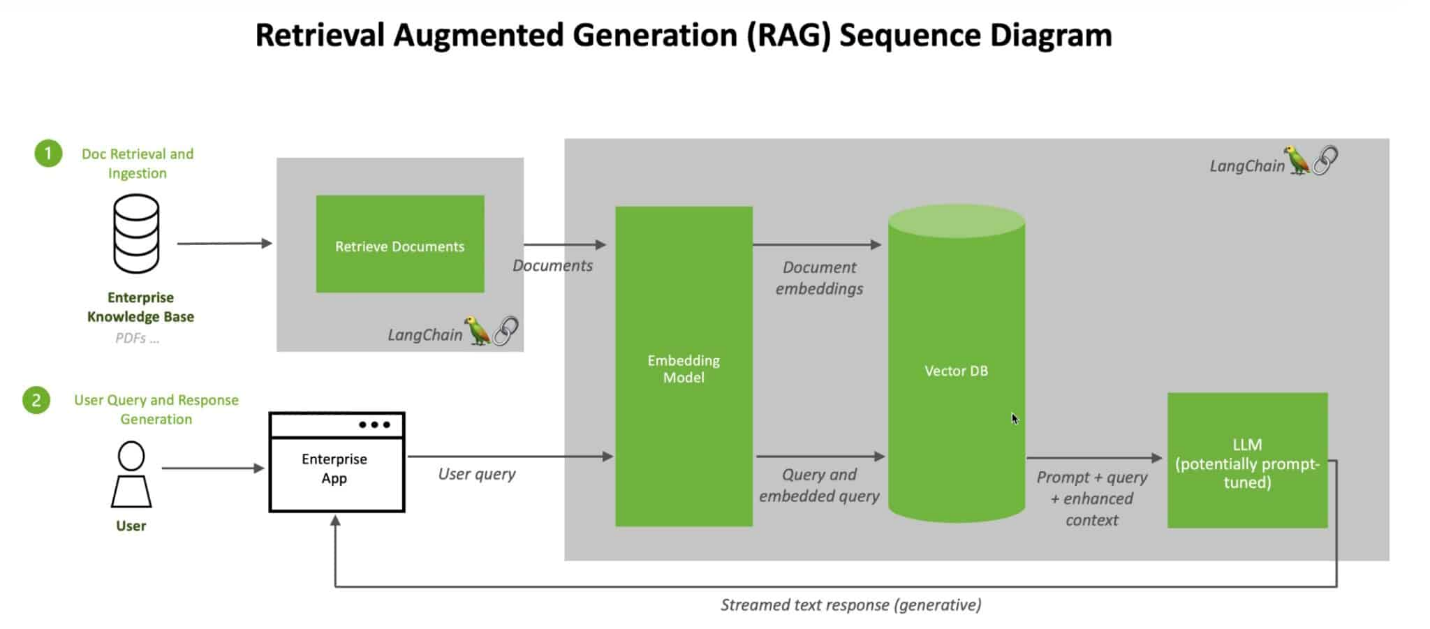

Retrieval-Augmented Generation (RAG) is a technique for enhancing the accuracy and reliability of LLM-generated responses with facts fetched from external sources. In other words, it fills a gap in how LLMs work.

RAG allows LLMs to build on a specialized body of knowledge to answer questions in a more accurate way. It is the difference between an open-book and a closed-book exam. In a RAG system, you are asking the model to respond to a question by browsing through the content in a book, as opposed to trying to remember facts from memory.

RAG works by retrieving relevant information from an authoritative knowledge base outside of its training data sources before generating a response. The knowledge base can be a collection of documents, such as Wikipedia articles, news articles, or research papers, that are related to the domain of the question. The retrieved information is then used to guide the LLM to generate a more informed and grounded response.

Benefits of Retrieval-Augmented Generation

RAG technology brings several benefits to your chatbot development and deployment. For example, it can help you:

- Improve the quality of your chatbot’s responses by ensuring that they are based on the most current and reliable facts.

- Increase the user’s trust and satisfaction by providing them with the sources of your chatbot’s answers.

- Reduce the need for continuous training and updating of your LLM, as RAG can dynamically access external knowledge sources as they evolve.

- Lower the computational and financial costs of running your LLM-powered chatbot, as RAG can leverage existing knowledge bases without the need to retrain the model.

Read More:What is Black Box Problem in Large Language Models? – techovedas

How to Implement Retrieval-Augmented Generation?

If you are interested in implementing RAG for your chatbot, you have several options to choose from. One of them is to use watsonx, a new AI and data platform from IBM that offers RAG as one of its features. watsonx allows you to easily integrate RAG with your existing LLM and knowledge base, and provides you with tools to monitor and improve your chatbot’s performance.

Another option is to use NVIDIA’s RAG framework, which is based on the original RAG paper by Meta (formerly Facebook). NVIDIA’s RAG framework is compatible with PyTorch and Hugging Face Transformers, and supports various LLMs and knowledge bases. You can also customize your RAG system by using different retrieval and generation strategies.

Read More:Samsung vs. SK Hynix in the Battle for HBM Dominance – techovedas

Conclusion

RAG is a powerful technique for enhancing the capabilities of LLMs and improving the quality of chatbot responses. By grounding the LLM on external sources of knowledge, RAG can ensure that your chatbot is giving accurate and reliable answers, and that your users can trust and verify them. RAG can also reduce the need for retraining and updating your LLM, and lower the costs of running your chatbot.