Introduction

In a groundbreaking move that underscores the rapid evolution of artificial intelligence (AI) technology, microchip startup Ampere Computing has joined forces with mobile innovator Qualcomm.

Joint AI Processor: They will combine Ampere’s Altra CPUs with Qualcomm’s Cloud AI100Ultra AI inference cards. This will enable efficient processing of large AI models in the cloud.

Focus on Lower Power Consumption: Both companies are known for their energy-efficient chips and aim to further reduce the power needed to run AI tasks through this collaboration. This can lead to significant cost savings for businesses.

Cloud-Based Solution: Their initial offering will target cloud data centers.

Room for Future Development: The two companies see this as the first step in a broader collaboration. They plan to develop more advanced AI solutions in the future.

This collaboration aims to push the boundaries of AI performance while reducing power consumption, marking a significant step forward in the industry.

Announced on Friday, this partnership will leverage Arm technology to develop a next-generation AI chip, emphasizing energy efficiency and advanced processing capabilities.

Ampere Computing and Arm Technology

Ampere Computing, known for its innovative approach to chip design, utilizes Arm technology in its AI chips.

Arm Holdings plc originally developed Arm, which is renowned for its reduced instruction set computing (RISC) architectures that prioritize energy efficiency and performance.

These processors are ideal for a wide range of applications due to their low power consumption and high performance, making them a perfect fit for AI operations.

Read More: Why Warren Buffett Thinks AI Scamming Is The Next Big Industry? – techovedas

Qualcomm’s Expansion into AI

Although Ampere can handle many general-purpose cloud instances, its AI capabilities are fairly limited. The company claims that its 128-core AmpereOne CPU, featuring two 128-bit vector units per core and supporting INT8, INT16, FP16, and BFloat16 formats, can deliver performance comparable to Nvidia’s A10 GPU, but with lower power consumption. However, Ampere needs a stronger solution to compete with Nvidia’s A100, H100, or B100/B200.

To address this, Ampere partnered with Qualcomm to develop platforms for LLM inferencing, combining Ampere’s CPUs with Qualcomm’s Cloud AI 100 Ultra accelerators. There is no announced timeline for when the platform will be ready, but this collaboration indicates that Ampere’s ambitions extend beyond general-purpose computing.

Read More: Power & Speed Leap: TSMC’s N3P Process Prepares for Mass Production – techovedas

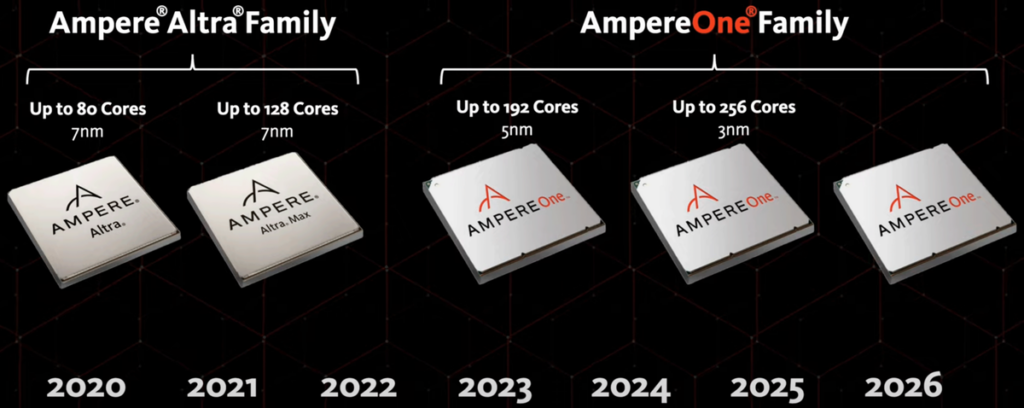

The Next-Gen AI Chip: Unveiling the 256-Core Powerhouse

The centerpiece of this collaboration is a new AI chip that features 256 cores for processing operations, a significant upgrade from Ampere’s previous 192-core version.

Notably, this chip combines Ampere CPUs with Qualcomm Cloud AI 100 inferencing chips and TSMC will manufacture it using its cutting-edge 3-nanometer process.

Consequently, this advancement highlights the increasing interest among businesses in capitalizing on the AI boom by enhancing server-level AI solutions.

We are extending our product family to include a new 256-core product that delivers 40% more performance than any other CPU in the market. It is not just about cores; it is about what you can do with the platform. We have several new features that enable efficient performance, memory, caching, and AI compute.

Renee James, CEO of Ampere

Future-Proofing AI Solutions

Ampere is also working on developing standards that enable the combination of different companies’ chips on single pieces of silicon.

This modular approach allows customers to specify their desired products more precisely, reducing dependency on single suppliers and enhancing efficiency and performance.

Consequently, this innovative strategy will likely feature Ampere processors and Qualcomm accelerators on the same chip, ultimately providing a versatile and powerful AI solution.

Read More: VNG is Nvidia Latest SEA Partner Amidst US China Tensions – techovedas

What’s the big deal about this ?

There are a couple of key things that make this collaboration between Ampere and Qualcomm a big deal for the AI industry:

Energy Efficiency: Both Ampere and Qualcomm are known for making chips that are efficient with power consumption. By working together, they have the potential to create even more energy-friendly AI solutions. This can significantly reduce the cost of running AI applications in data centers, which can be very power-hungry.

Focus on Large Language Models (LLMs): The joint solution specifically targets processing large AI models, which are becoming increasingly important for tasks like natural language processing and image recognition. This focus shows that Ampere and Qualcomm are addressing a growing need in the AI field.

Strong Players Joining Forces: Ampere is a rising star in the server chip market, and Qualcomm dominates the mobile chip market. Their collaboration brings together significant expertise and resources, making them a force to be reckoned with in the AI chip space. This could lead to more competition and innovation in the market, ultimately benefiting consumers.

Potential for Wider Applications: While the initial offering targets cloud data centers, their collaboration hints at future developments for broader AI applications. This could lead to more energy-efficient AI capabilities in various devices and services.

Conclusion

The collaboration between Ampere Computing and Qualcomm marks a significant milestone in the AI industry.

This partnership not only highlights the growing demand for powerful AI solutions but also sets the stage for future innovations that will shape the landscape of AI technology.

As businesses continue to embrace AI, the advancements from Ampere and Qualcomm will undoubtedly play a crucial role in driving the next wave of AI-driven progress.