Introduction

Artificial intelligence (AI) is one of the most transformative technologies of our time. It has enabled us to achieve feats that were once considered impossible, such as beating humans at chess, recognizing faces, translating languages, and driving cars. But behind every AI system, there is a hardware component that makes it possible. In this article, we will explore the evolution of AI hardware, from the past to the present and the future, and how it has shaped the field of AI and the world.

Follow us on Linkedin for everything around Semiconductors & AI

How AI Hardware has a Evolution From Vacuum Tubes to Quantum Computing

1940s: The Early Days of AI Hardware

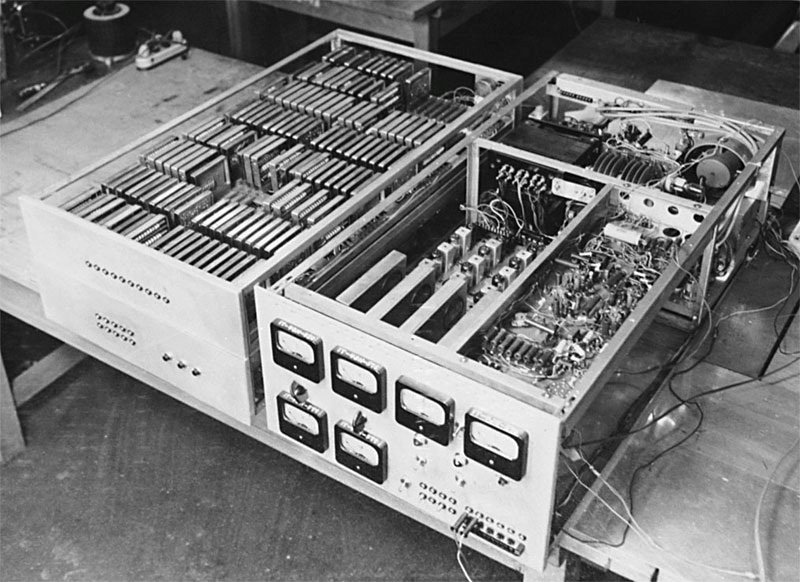

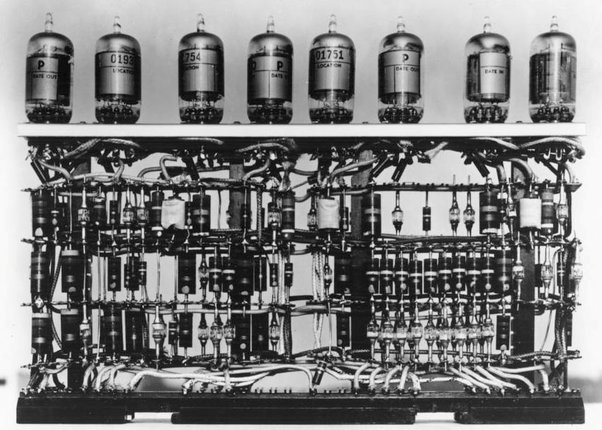

The history of AI hardware can be traced back to the history of computing itself. The first digital computers were invented in the 1940s, using vacuum tubes as the basic building blocks. These devices were large, expensive, and unreliable, but they paved the way for the development of the first AI systems, such as the Theseus, a remote-controlled mouse that could find its way out of a labyrinth and remember its course.

However, vacuum tubes had many limitations, such as high-power consumption, heat generation, and frequent failures.

Read More: 10 Job Profiles in Semiconductor Chip Fabrication & Required Skill Sets – techovedas

1950s: The Transistor Revolution

In the 1950s, a new technology emerged that revolutionized the field of computing and AI: the transistor. Transistors are tiny switches that can control the flow of electricity, and they are much smaller, cheaper, faster, and more reliable than vacuum tubes.

Transistors empowered the development of more potent and compact computers, like the IBM 704, which John McCarthy and Marvin Minsky utilized to conduct some of the earliest AI research at MIT.

1960s: The Rise of AI Hardware

The next major breakthrough in AI hardware came in the 1960s, with the invention of the integrated circuit (IC). An IC is a device that contains thousands of transistors and other components on a single chip, allowing for further miniaturization and performance improvement of computers.

ICs enabled the development of more sophisticated AI systems, such as ELIZA, a natural language processing program that could simulate a psychotherapist, and SHRDLU, a program that could manipulate and reason about objects in a virtual world.

However, Moore’s Law, predicting the exponential growth of transistors on ICs, began to show its limits.

Read More: Explained: What the hell is Artificial Intelligence – techoveda

1980s: The Parallel Processing Powerhouse

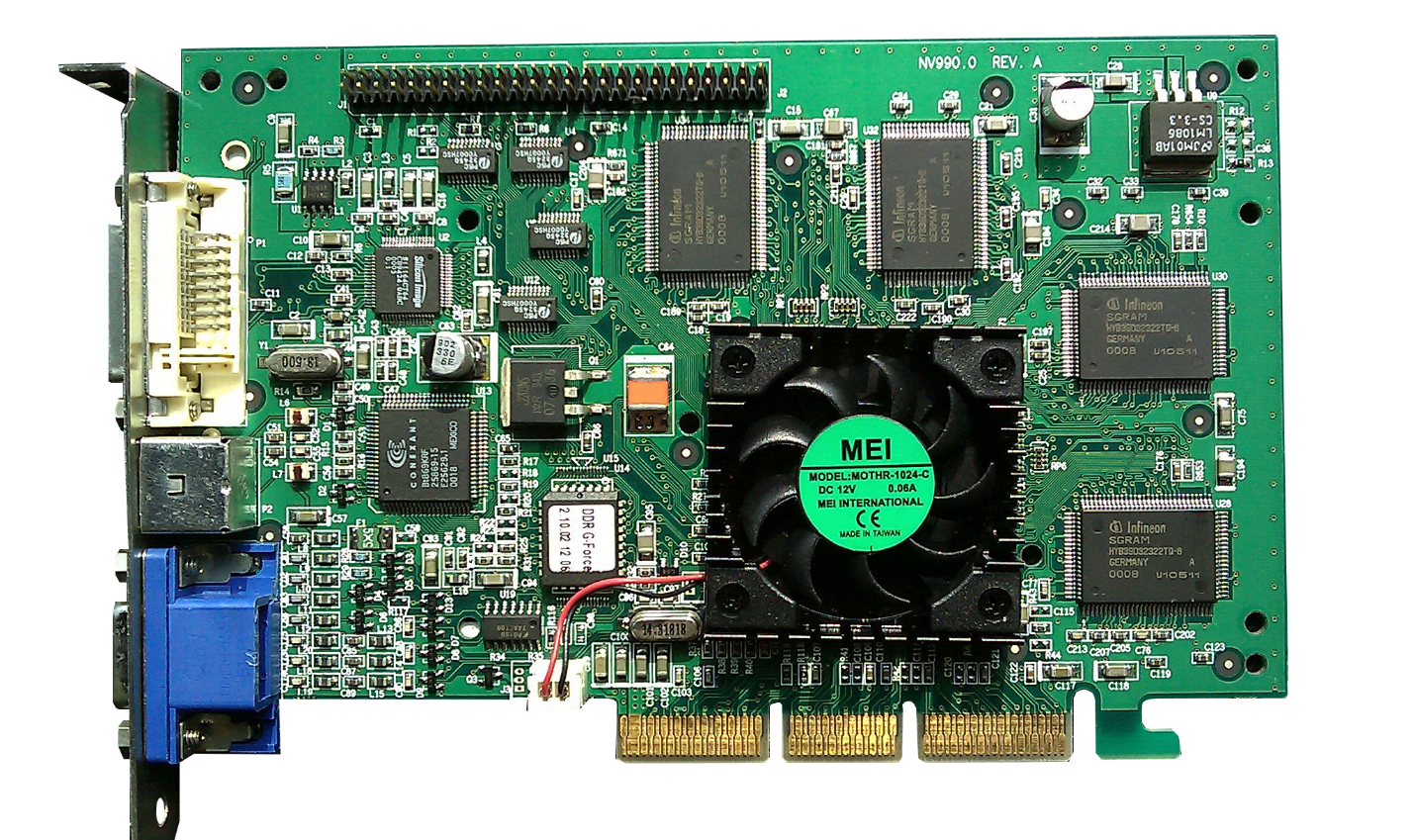

To overcome this challenge, a new technology emerged in the 1980s that changed the game for AI hardware: the graphics processing unit (GPU).A GPU is a specialized chip that can perform parallel computations, meaning that it can process many data points at the same time.

Originally designed for rendering graphics, GPUs quickly demonstrated their usefulness for AI applications, particularly for neural networks, a type of AI model that mimics the structure and function of the human brain.

GPUs enabled the resurgence of neural networks in AI research, leading to advancements in domains such as computer vision, natural language processing, and speech recognition.

The Present of AI Hardware: The ASICs

Today, AI hardware is more diverse and advanced than ever before. In addition to GPUs, designers create specialized chips for specific AI tasks, like the application-specific integrated circuit (ASIC).

An ASIC customizes a chip for a specific application, exemplified by the Google Tensor Processing Unit (TPU), which optimizes deep learning—a subset of neural networks capable of learning from extensive data. ASICs offer advantages such as lower power consumption, higher speed, and lower cost than general-purpose chips, such as CPUs and GPUs.

The Future of AI Hardware: Quantum Computers

Looking to the future, the evolution of AI hardware is set to continue at an accelerated pace. With the advent of quantum computing, we are on the brink of a new era in AI hardware.

Quantum computers, with their ability to perform complex calculations at speeds unimaginable with current technology, hold the potential to revolutionize AI.

Quantum computers could enable the development of new AI algorithms, such as quantum neural networks, that could solve problems that are intractable for classical computers, such as optimization, encryption, and simulation.

Conclusion

AI hardware has come a long way since the first digital computers. From vacuum tubes to transistors, from integrated circuits to GPUs, from ASICs to quantum computers, AI hardware has evolved in parallel with AI software, enabling the creation of more powerful and intelligent AI systems.

AI hardware has changed the world in many ways, such as enhancing our productivity, entertainment, communication, and education. But it also poses many challenges, such as ethical, social, and environmental issues, that require careful consideration and regulation.

As AI hardware continues to advance, we should be mindful of its impact and potential, and strive to use it for the benefit of humanity.

You need to take part in а contest for one

of the most useful blogѕ on the net. I am going to recommend this web site!