Introduction

AI has made tremendous progress in recent years, thanks to the availability of large amounts of data, powerful computing resources, and advanced algorithms. However, most AI systems today are still limited by their narrow scope and domain-specificity. They can only handle one type of data (such as text or images) and one type of task (such as classification or generation) at a time. This makes them brittle and unable to cope with the complexity and diversity of the real world. To overcome this limitation, researchers have been exploring a new paradigm of AI called multimodal training technique.

This is a technique that enables AI systems to learn from and integrate multiple types of data (such as text, images, audio, video, and code) and multiple types of tasks (such as reasoning, planning, understanding, and creating) in a unified and coherent way. By doing so, multimodal training technique aims to create more general, robust, and versatile AI systems that can handle a wide range of scenarios and challenges.

In this article, we will explain what multimodal training technique is, how it works, what are its benefits, and what are some of the state-of-the-art examples of multimodal AI systems. We will also discuss how Google’s Gemini, the most capable AI model yet, leverages multimodal training technique to achieve unprecedented performance and functionality across various domains and modalities.

Read More: Deepsouth Awakens: The Human Brain-Scale Supercomputer Coming in 2024 – techovedas

What is Multimodal Training Technique?

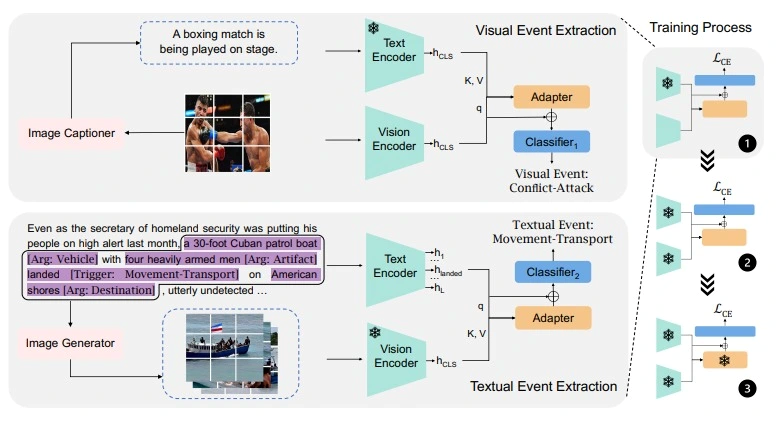

Multimodal representation from a large corpus of text-image pairs. The framework uses a transformer-based architecture, with separate encoders for text and images, and a contrastive loss function to align the representations. The framework also uses a data augmentation technique, called cross-modal augmentation, to generate synthetic text-image pairs by replacing or adding text or image segments from other pairs. This technique helps to increase the diversity and robustness of the multimodal representation.

In the fine-tuning stage, the framework uses a supervised learning method to adapt the multimodal representation to the event extraction task. The framework uses a task-specific decoder, which takes the output of the text and image encoders and predicts the event type and arguments for each input pair. The framework also uses a multi-task learning technique, which jointly optimizes the event extraction task and a secondary task, such as image captioning or text summarization. This technique helps to improve the performance and accuracy of the event extraction task by leveraging the auxiliary information and feedback from the secondary task.

The pre-training and fine-tuning framework can be applied to different levels of granularity and complexity, depending on the design and objective of the AI system.

What are the Benefits of Multimodal Training Technique?

Multimodal training technique can offer several benefits to AI systems, such as

Improved performance and accuracy:

By learning from and integrating multiple types of data and tasks, multimodal training technique can help AI systems to achieve higher levels of performance and accuracy than using a single type of data or task.

For example, an image captioning system that uses both text and images can generate more accurate and relevant captions than a system that uses only images or only text. Similarly, a code generation system that uses both natural language and code can generate more correct and efficient code than a system that uses only natural language or only code.

Increased robustness and versatility:

By learning from and transferring across different domains and scenarios, multimodal training technique can help AI systems to become more robust and versatile, able to handle a wide range of inputs and outputs.

For example, a math problem solving system that uses both text and equations can solve more types and levels of math problems than a system that uses only text or only equations. Similarly, a video summarization system that uses both audio and video can summarize more kinds and genres of videos than a system that uses only audio or only video.

Enhanced creativity and innovation:

By combining and generating multiple types of data and tasks, multimodal training technique can help AI systems to exhibit more creativity and innovation, able to produce novel and diverse outputs.

For example, a poem generation system that uses both text and images can generate more poetic and expressive poems than a system that uses only text or only images. Similarly, a music composition system that uses both audio and code can compose more musical and original music than a system that uses only audio or only code.

Read More: Top 10 Semiconductor Equipment Companies of 2023 – techovedas

How Does Multimodal Training Technique Work?

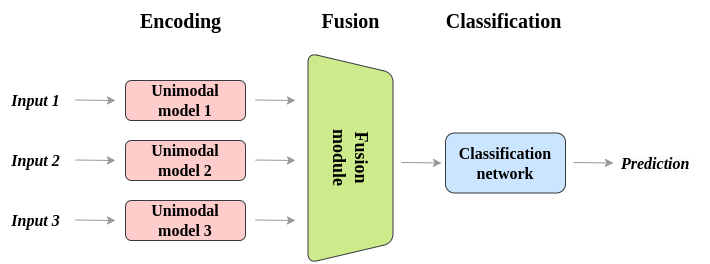

Multimodal training technique can be implemented in various ways, depending on the architecture, algorithm, and objective of the AI system. However, a common approach is to use a pre-training and fine-tuning framework, which consists of two stages:

Pre-training: In this stage, the AI system is trained on a large and diverse corpus of multimodal data, such as text, images, audio, video, and code, using a self-supervised or unsupervised learning method. The goal is to learn a general and powerful representation of the multimodal data, which can capture the common and salient features and concepts across different modalities and tasks. A single model, such as a neural network, or a set of models, like a transformer encoder and decoder, can encode this representation. The pre-training stage involves engaging in multimodal learning, emphasizing the acquisition of knowledge from various types of data.

Fine-tuning: In this stage, the AI system is trained on a specific dataset and task, such as image captioning, code generation, or math problem solving, using a supervised or semi-supervised learning method. The goal is to adapt and optimize the representation learned in the pre-training stage to the target domain and task, by adjusting the parameters of the model or adding some task-specific layers or modules. The fine-tuning stage can be seen as a form of multitask learning, which focuses on learning multiple types of tasks.

One example of multimodal training technique is the CAMEL framework, which stands for Cross-Modal Augmentation for Multimedia Event Extraction1. This framework aims to extract events and their arguments from multimodal data, such as text, images, and videos. For example, given a news article and a video clip about a protest, the framework can identify the event type (protest), the location (city), the participants (people), the date (day), and the cause (reason) of the event.

What are Some Examples of Multimodal AI Systems?

Various AI systems have applied multimodal training techniques to range across natural language processing, computer vision, speech processing, code generation, music composition, and art creation. Here are some of the state-of-the-art examples of multimodal AI systems:

Google Gemini:

Google Gemini is the most capable and general AI model yet, built by Google DeepMind. It is a multimodal and multitask model, optimized for three different sizes: Ultra, Pro, and Nano. It can handle text, images, audio, video, and code, and perform various tasks, such as reasoning, planning, understanding, and creating.

Gemini is built from the ground up for multimodality, using a pre-training and fine-tuning framework. Gemini uses a transformer-based architecture, with a shared encoder and decoder for all modalities and tasks. It is also leverages training techniques from AlphaGo, such as tree search and reinforcement learning, to enhance its performance and functionality.

Gemini is currently rolling out across a range of Google products and platforms, such as Bard, Google Assistant, Google Photos, Google Translate, and more.

Read More: 15 Top Semiconductor companies in the World – techovedas

DALL-E:

DALL-E is a multimodal AI system that can create images from text descriptions, built by OpenAI. It is a generative model, trained on a large corpus of text-image pairs, using a self-supervised learning method. DALL-E uses a transformer-based architecture, with a shared encoder and decoder for text and images. DALL-E can generate diverse and realistic images from natural language inputs, such as “a pentagon made of cheese” or “a snail made of a harp”.

CLIP:

CLIP is a multimodal AI system that can learn from any natural language supervision, built by OpenAI. It is a contrastive model, trained on a large corpus of text-image pairs, using a self-supervised learning method. CLIP uses a transformer-based architecture, with separate encoders for text and images, and a contrastive loss function to align the representations. CLIP can perform various tasks, such as image classification, object detection, and image captioning, using natural language labels, such as “a photo of a cat” or “a painting of a person”.

Follow us on LinkedIn for everything around Semiconductors & AI

Conclusion

Multimodal training technique is a technique that trains AI systems to learn from and integrate multiple types of data and tasks in a single framework. It can improve the performance, accuracy, robustness, versatility, creativity, and innovation of AI systems, and enable them to handle a wide range of scenarios and challenges. Various AI systems, such as Google Gemini, DALL-E, and CLIP, have applied multimodal training techniques, showcasing the state-of-the-art capabilities and functionalities of multimodal AI. Multimodal training technique is a promising and exciting direction for the future of AI, as it can bring us closer to the ultimate goal of creating artificial general intelligence, which can understand and interact with the world as humans do.