Introduction

The AI hardware industry is booming, with the global market predicted to reach USD 50.67 billion by 2025. This surge is fueled by the increasing demand for AI technologies across various sectors, including healthcare, automotive, and financial services. AI hardware, comprising specialized chips and processors, is the cornerstone that supports the complex algorithms and applications of AI. In this article, we’ll delve into the top 5 companies that are not just riding the AI wave but are creating it, by developing cutting-edge AI hardware.

Follow us on LinkedIn for everything around Semiconductors & AI

Top 5 Under-the-Radar AI Hardware Companies Poised to Take Off

1. SambaNova Systems

What they do:

- SambaNova develops hardware and software specifically designed for running large artificial intelligence models like large language models (LLMs) . Their goal is to make AI faster, more efficient, and easier to use for businesses.

Their technology:

- One of their key products is a custom-designed AI chip called the SN40L. This chip is built for handling the massive data flows required by modern AI models.

- They also offer a software platform that manages the entire AI workflow, from data ingestion to model training and deployment.

Impact:

- SambaNova’s technology has the potential to democratize AI by making it more affordable and accessible for companies of all sizes. This could lead to a new wave of innovation in various industries.

Read More:$42 Million: PSMC Opens New Factory to Produce 50,000 12-Inch Wafer including CoWoS – techovedas

2. Cerebras Systems

What they do:

- Cerebras Systems is an American AI company focusing on building computer systems specifically designed for complex deep learning applications within the field of artificial intelligence.

- Their core product tackles large-scale AI workloads by offering a unique hardware and software solution.

Their technology:

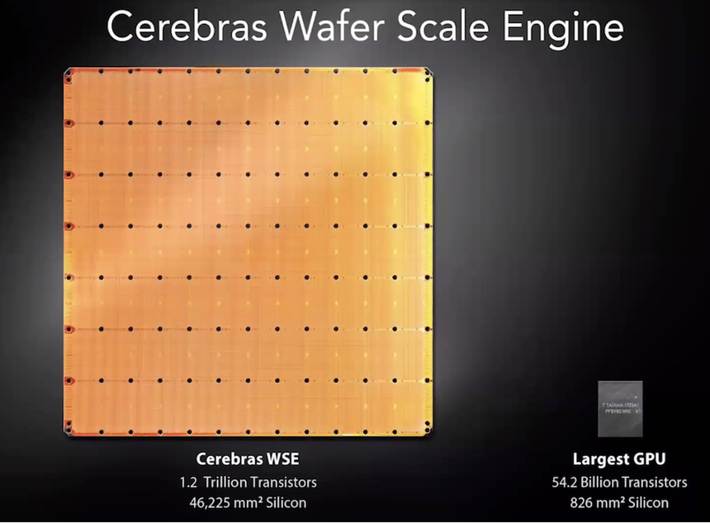

- The centerpiece of Cerebras’ technology is the Cerebras Wafer-Scale Engine (WSE). This is essentially a single, giant computer chip designed specifically for AI tasks.

- The WSE integrates all the necessary components (processing, memory, and connections) onto a single chip, allowing for faster and more efficient data flow compared to traditional AI hardware built with multiple separate components.

- Cerebras Systems builds their AI computers, called CS-series systems, around the WSE technology. Their latest offering, the CS-3, is touted as the world’s fastest AI accelerator designed to handle complex AI workloads with massive datasets.

Company details:

- Founded in 2015, Cerebras Systems has its headquarters in Sunnyvale, California, with offices around the world.

- As of 2023, they employed around 335 people and are currently in the process of registering for an Initial Public Offering (IPO) which would make them a publicly traded company.

Impact:

- Cerebras’ innovative technology has the potential to revolutionize deep learning by enabling researchers and companies to train complex AI models faster and more efficiently. This could lead to breakthroughs in various fields that rely on AI, such as drug discovery, materials science, and autonomous vehicles.

3. Graphcore

Graphcore is a British semiconductor company founded in 2016 with headquarters in Bristol, UK . Their primary focus is developing hardware accelerators specifically designed to boost the performance of machine learning (ML) tasks.

What they offer:

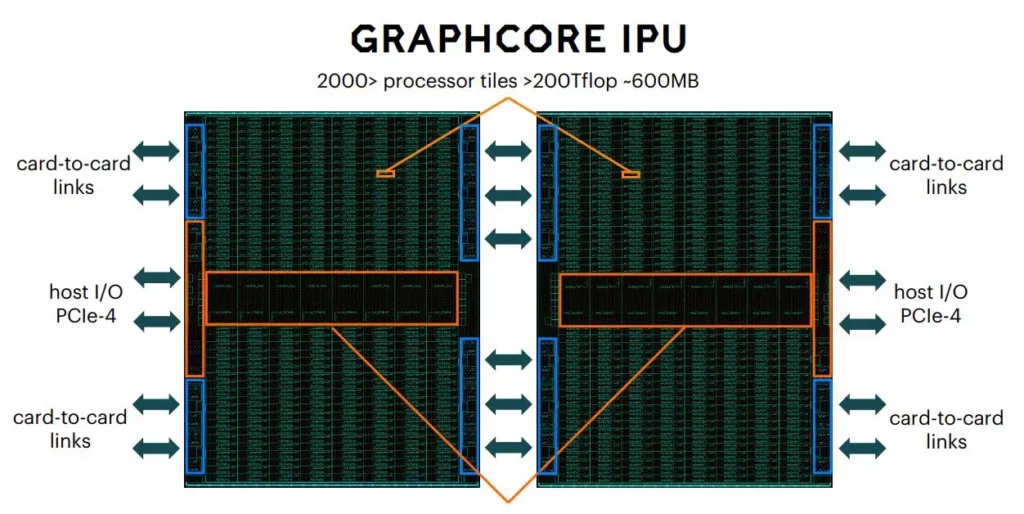

- Intelligence Processing Unit (IPU): This is a flagship product, a specialized processor for running AI and ML workloads. Unlike traditional CPUs or GPUs, IPUs are designed from the ground up to handle the specific needs of machine learning algorithms. This allows for significant speedups compared to using general-purpose hardware.

- Cloud Services: The company offers access to their IPUs through cloud platforms, making it easier for businesses to leverage their technology without significant upfront investment.

- Software Tools: They provide a suite of software tools that work alongside their IPUs to streamline the development and deployment of ML models.

Benefits:

- Faster Training and Inference: IPUs can significantly reduce the time it takes to train and run ML models, leading to faster development cycles and quicker deployment of AI applications.

- Improved Efficiency: The specialized architecture of IPUs allows for more efficient use of power and resources compared to traditional hardware.

- Scalability: Graphcore’s systems are designed to scale up to meet the demands of ever-growing datasets and increasingly complex models.

Real-world applications:

- Drug Discovery: Pharmaceutical companies are using Graphcore’s IPUs to accelerate the development of new drugs by analyzing vast amounts of biological data

- Financial Fraud Detection: Financial institutions are leveraging Graphcore technology to detect fraudulent activity in real-time, protecting their customers and improving security.

- Smart Cities: Cities are using Graphcore IPUs to analyze traffic patterns and optimize infrastructure management, leading to improved efficiency and sustainability.

Comparison with other AI hardware companies:

- A competitor to companies like NVIDIA and Cerebras Systems, all of which are developing specialized hardware for AI applications.

- Their IPUs differ from NVIDIA’s GPUs in their more specialized design for machine learning tasks.

- Cerebras Systems focuses on building massive, wafer-scale AI processors, while Graphcore offers a more scalable and accessible solution through their IPU architecture.

Read More:Satya Nadella, Sam Altman, and Sundar Pichai to Join U.S. AI Security Advisory Board – techovedas

Lightmatter

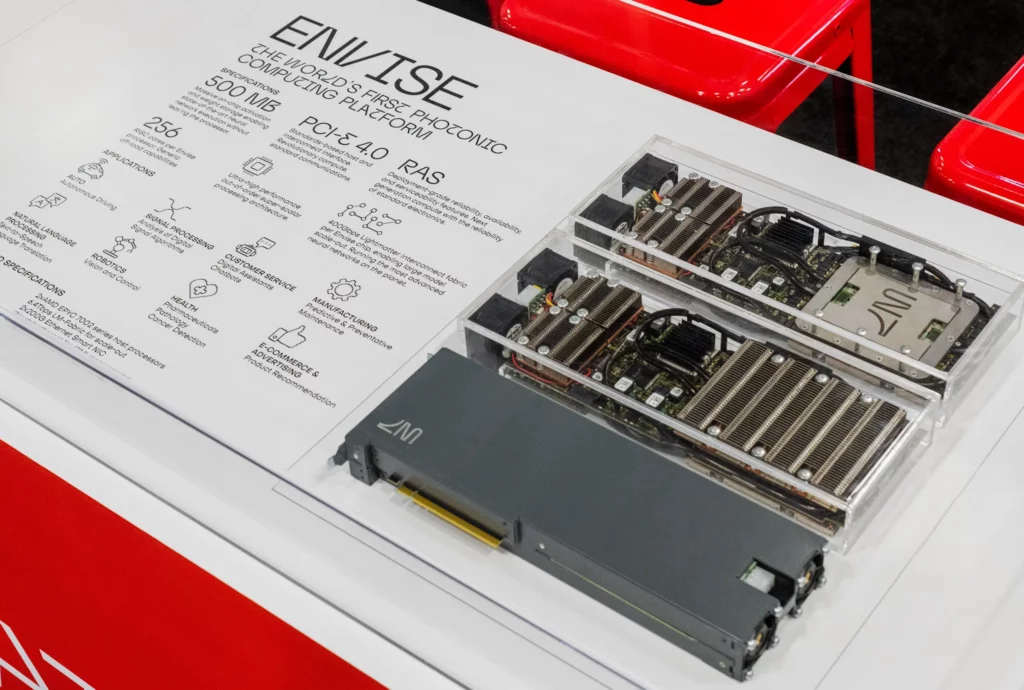

Lightmatter is a company pioneering the use of photonics to revolutionize computing.

Focus:

- Lightmatter is specifically interested in using light (photons) alongside electricity (electrons) for data transmission and processing within computer chips . This approach, known as photonic computing, has the potential to be significantly faster and more energy-efficient than traditional electronic methods.

Products:

- Their initial focus is on developing two key products:

- Photonic AI Chip: This chip is designed to accelerate specific tasks crucial for artificial intelligence (AI), such as machine learning operations.

- Photonic Interconnect: This technology aims to improve communication between different chips within a computer system using light pulses instead of electrical signals.

Impact:

- Lightmatter’s technology has the potential to be a game-changer for the computing industry. By leveraging the speed and efficiency of light, they could enable the development of faster, more powerful computers with lower energy consumption. This could lead to advancements in various fields that rely on high-performance computing, like artificial intelligence, scientific research, and big data analysis.

Recent Developments (as of May 8, 2024):

- Lightmatter recently secured $155 million in additional funding, valuing the company at $1.2 billion. This significant investment highlights the growing interest in photonic computing and Lightmatter’s position as a leader in the field.

- They were also featured in a recent MIT News article discussing their progress in developing photonic chips for AI applications.

Here’s a comparison with traditional electronic computing:

- Current computers rely on electrons to transmit and process data. While this method has served us well, it faces limitations in terms of speed and energy efficiency as chip sizes continue to shrink.

- Lightmatter’s approach utilizes photons, which can travel faster and with less energy loss than electrons. This opens doors for significant performance improvements and reduced power consumption in future computing systems.

5. Groq

Groq is an American AI company focused on accelerating the performance of AI workloads, particularly large language models (LLMs) and other demanding applications.

Here’s a breakdown of what they offer:

Core Technology:

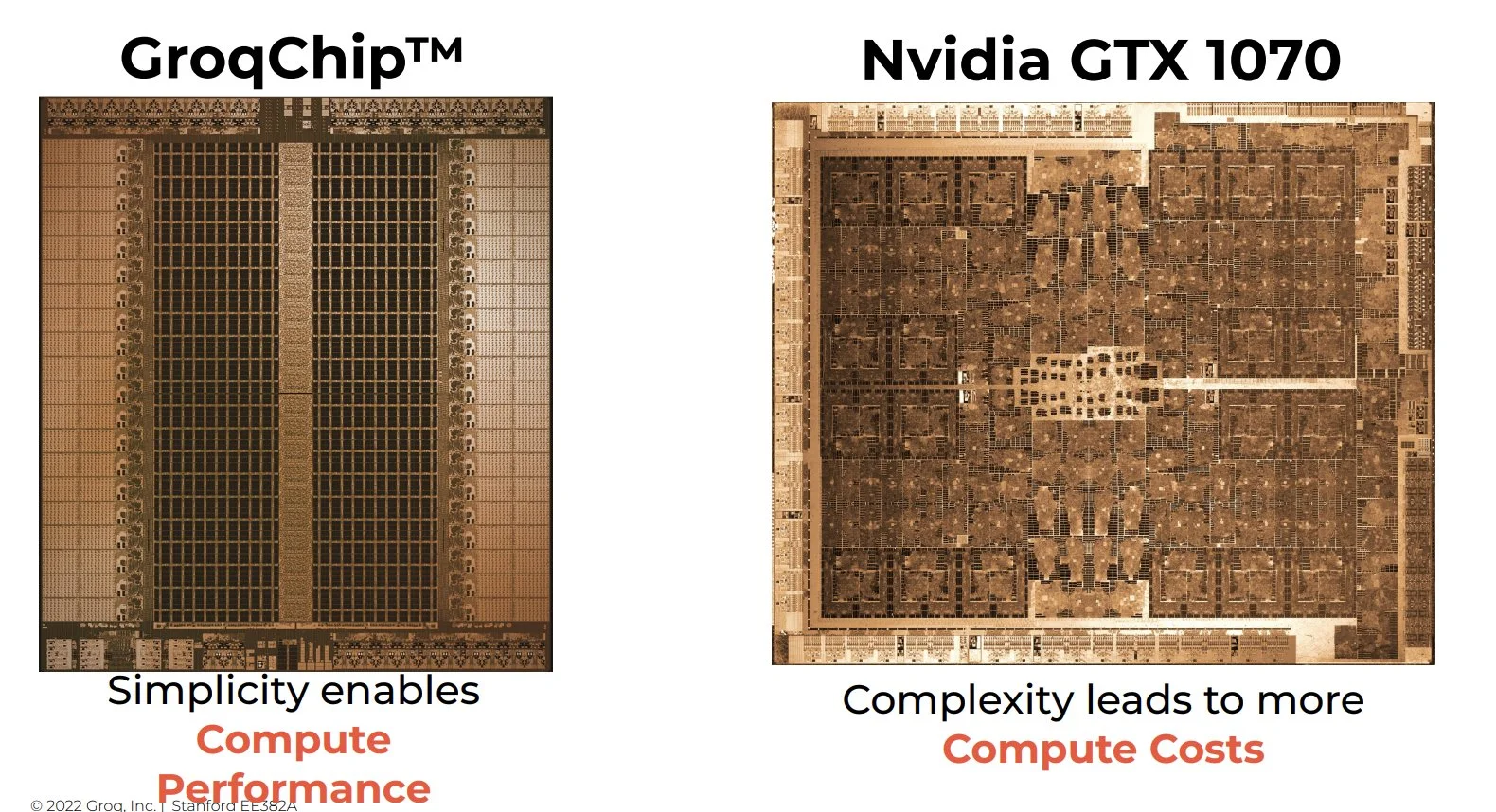

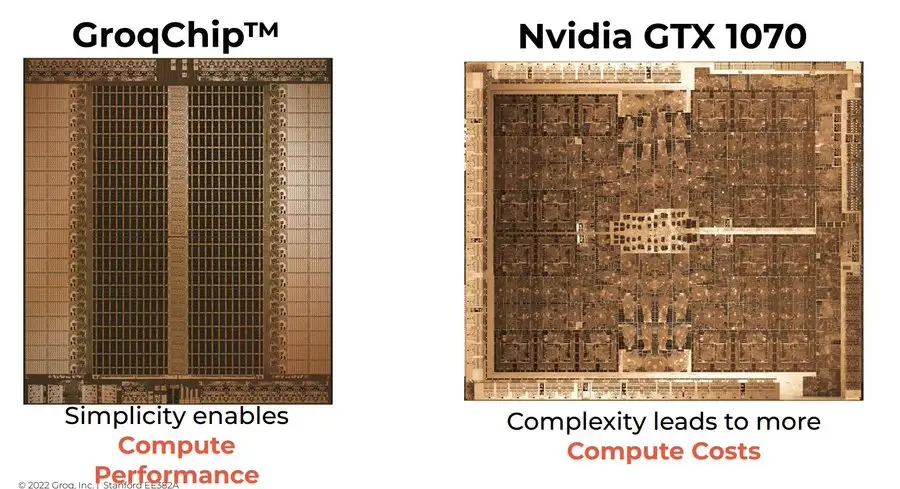

- Groq’s key product is the Language Processing Unit (LPU), a custom-designed AI accelerator chip [Groq]. Unlike some competitors who create their own AI models, Groq focuses on making existing open-source models run faster on their LPU.

Benefits of Groq’s LPU:

- Faster Inference: The LPU is designed to significantly speed up the process of running AI models, leading to quicker responses and real-time applications.

- Focus on Open-Source: By working with open-source models, Groq ensures wider accessibility and avoids vendor lock-in for users.

- Simpler Deployment: Groq’s simpler hardware architecture is claimed to ease the process of deploying AI solutions at scale.

Target Applications:

- Groq’s LPU is well-suited for various AI applications requiring fast processing, such as:

- Large Language Models (LLMs): Enabling faster and more efficient use of large language models for tasks like chatbots, machine translation, and text generation.

- Image Classification: Accelerating image recognition and analysis tasks crucial in various fields.

- Anomaly Detection: Enabling real-time detection of anomalies in data streams, useful for fraud prevention and system monitoring.

- Predictive Analytics: Speeding up analysis of large datasets for predictive modeling and forecasting.

Company Background:

- Founded in 2016 by a team of ex-Google engineers, including Jonathan Ross, who co-designed the Google TPU (Tensor Processing Unit). This experience positions Groq as a strong player in the AI hardware acceleration field.

- Groq has secured significant funding rounds, indicating investor confidence in their technology.

- They are headquartered in Mountain View, California, with offices worldwide.

Impact:

- Groq’s technology has the potential to democratize access to high-performance AI by making it faster and easier to deploy complex models. This could benefit various industries and organizations looking to leverage the power of AI.

Here’s a quick comparison with other AI hardware companies:

- NVIDIA: A major player in AI hardware, offering GPUs (Graphics Processing Units) that can be used for AI workloads. However, Groq’s LPU is specifically designed for AI tasks, potentially offering better performance and efficiency for certain applications.

- Cerebras Systems: Focuses on building massive AI processors for large-scale deep learning tasks. Groq offers a more scalable and accessible solution through their LPU architecture.

Read More: Samsung Foundry Makes Bold Move: Partners with DSP to Secure Overseas Market Share – techovedas

Conclusion

As we stand on the cusp of a new era, the Top 5 AI Hardware Companies are not merely participants but pioneers. They are shaping a future where AI is not a distant concept but a tangible, integral part of our daily lives. The AI hardware revolution is here, and it’s redefining what it means to be a leader in technology through these top 5 AI Hardware Companies.