Introduction

In the realm of large language models (LLMs), the need for speed reigns supreme, and with the emergence of Groq, founded by former Google engineers, a significant leap forward is on the horizon. Groq is making waves with its groundbreaking Language Processing Unit (LPU), a specialized chip poised to revolutionize performance benchmarks in Contrast to GPUs.

While established players such as ChatGPT rely on conventional Nvidia GPUs, Groq’s LPU operates on an entirely different level. While ChatGPT’s GPUs manage a modest 30-60 tokens per second, Groq’s offering blows that away, boasting an impressive 500 tokens per second for small AI models and a still impressive 250 for larger ones. This translates to a performance leap of 5-8 times!

Follow us on Linkedin for everything around Semiconductors & AI

Groq VS GPUs

Groq’s LPU: Designed for Speed

- Focuses on natural language processing (NLP) tasks like chatbots and text generation.

- Boasts superior performance compared to traditional GPUs for specific LLM models, claiming to be 10 times faster.

- This translates to quicker response times and potentially lower power consumption.

GPUs: The Workhorse of AI

- Graphics Processing Units (GPUs) are more versatile, handling various tasks with their parallel processing architecture.

- They’re the current workhorse for many AI applications, including training LLMs (different from running them, which is Groq’s focus).

- While powerful, GPUs may not be as optimized for the sequential nature of language processing as Groq’s LPU.

A New Era for NLP?

Groq’s LPU represents a new generation of specialized AI chips. If it delivers on its promises, it could significantly improve the speed and efficiency of NLP tasks. This could lead to:

- Faster and more responsive chatbots

- More powerful language translation tools

- Advancements in areas like text summarization and content creation

Is Groq a GPUs Killer?

It’s too early to say. While Groq excels at NLP, GPUs have broader applications. They’ll likely continue to be crucial for various AI tasks. However, Groq’s emergence could push the entire industry towards more specialized AI hardware in the future.

Overall, Groq’s LPU is an exciting development for the field of NLP. It has the potential to disrupt the status quo and usher in a new era of faster, more efficient language processing.

Why Groq chip is faster Compared to GPUs?

There are two main reasons why Groq chips are faster than GPUs for specific tasks like running large language models (LLMs):

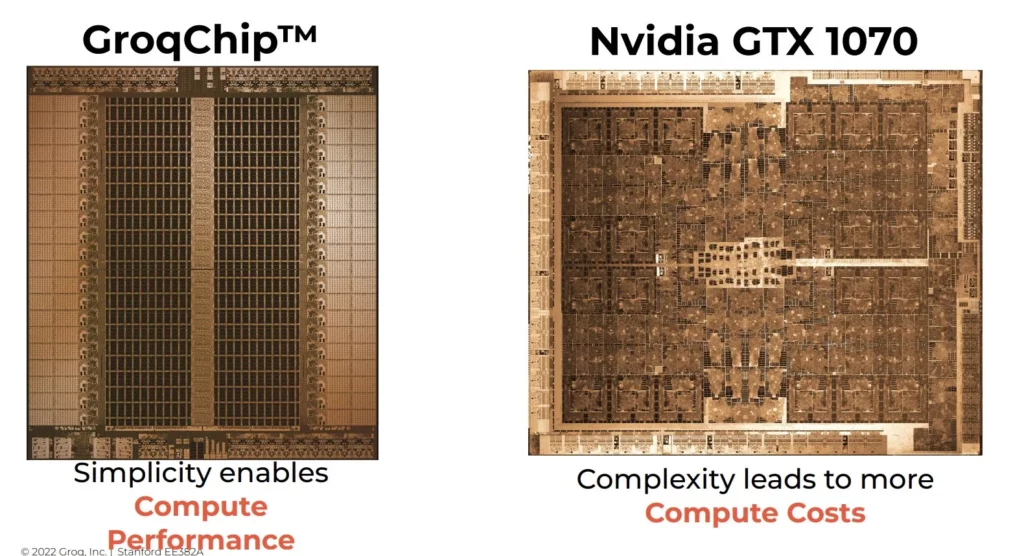

Specialized Design: Unlike GPUs which are designed for a wider range of tasks, Groq’s LPU (Language Processing Unit) is custom-built for the specific needs of NLP (Natural Language Processing). This means the chip architecture is optimized for the types of calculations required in language processing, leading to greater efficiency. Groq focuses on maximizing compute density and memory bandwidth, which are critical bottlenecks for LLMs.

Compiler-Driven Approach: Traditional GPUs rely on a more generic approach where the software needs to translate instructions for the hardware. Groq’s LPU takes a different approach. Its compiler directly orchestrates the hardware, eliminating the need for this translation step. This tighter control allows Groq to precisely manage the flow of instructions and optimize performance for each specific task.

These factors combined can lead to significantly faster processing times for LLMs compared to GPUs. Groq claims their chips can achieve up to 10 times faster speeds for certain tasks. This translates to quicker response times, lower latency, and potentially more efficient power consumption.

Sushi Knife of the LLM World

Think of it like this: a general kitchen knife can chop vegetables, but a specialised sushi knife makes the job quicker and more precise. Groq’s LPU is the sushi knife of the LLM world, perfectly tailored for the intricate task of language processing.

Groq’s On chip memory architecture

Groq has something that most of the modern AI accelerators don’t have – on chip memory. On chip memory is a realization of non-von neumann architecture in which the compute unit and storage unit are not seperate but the same. The processing happens in the memory itself.

This is similar in a way to Cerebras chip design but in the case of Cerebras chip, the memory and the cores are intertwined while Nvidia GPUs, on the other hand, always come with a huge off-chip memory.

The close location of crossbar matrix unit and the memory helps to minimize the latency and this explains why everyone who is trying Groq Hardware seeing such amazing latency.

Read more How in-memory Computing Could be a Game Changer in AI – techovedas

Packaging advantage of On-chip memory

In addition to minimized latency, the second advantage of having on-chip memory is that this chip doesn’t require expensive and hard-to-get advanced packaging technology.

Groq doesn’t depend on the memory chips from Korean SK Hynix or on CoWoS packaging technology from TSMC. This makes it much cheaper for Groq to manufacture their silicon. More mature process node means lower costs per wafer and pure die and no fancy packaging means they don’t have to pay for this, and they can stick to domestic manufacturing offerings. And all of this gives them such a rare flexibility to switch between fabs.

Read more 5 Ways Chip Packaging Will Define Next Generation of Chips – techovedas

Performance benefits

During interactions with ChatGPT, users often experience a delay of 3 to 5 seconds before receiving a response. This delay is due to the GPT model operating on Microsoft Azure Cloud which runs on Nvidia H100 GPUs. The responsiveness depends on GPU performance and latency. A delay of 3 to 5 seconds significantly impacts conversation flow, highlighting a common challenge in AI interactions.

While Groq with its LPU is breaking all limits. These are the official benchmarks that compare different AI inference services running the same Mistral model.

We obviously want the highest throughput at the lowest price. You can see that there is one big outlier, Gro, which costs about 30 cents per 1 million tokens and delivers about 430 tokens per second. And if we take the average here, Groq system is on average four to five times faster than any of the inference services listed here.

According to another official Benchmark (shown above), Meta’s Llama 2, which is a 70 billion parameters model while running on Groq system is up to 18 times faster than GPU-based cloud providers. These benchmarks have generated a lot of excitement around Groq’s hardware.

Targeting the AI inference market

Generative AI model deployment involves training the model with parameters and then using the model for prediction (inference).

While training AI models is a one-time problem and computing power is getting cheaper and cheaper, inference is a constant problem and it’s inherently larger market than training. And the most important thing that it scales very well as more users, more businesses, and more people are starting to use generative AI.

So, companies like Mistral and Meta are building open-source AI models and then Groq is then accelerating these models by their LPUs. Groq, thus provides inference as a service.

Reviews

Many believe that now is a perfect time for this chip because this speed and latency might be game-changing for many applications. If we are talking of chatbots and voice assistance, this speed advantage can make a huge difference because it can make all this interaction to feel more natural.

Groq is able to outperform Nvidia GPUs like H100 in latency and costs per million tokens but not yet at throughput.

Read more Comparison of NVIDIA’s A100, H100, and H200 – techovedas

Conclusion

Groq is a very promising startup along with Cerebras, but their success depends on their development of their software stack and also on their next-generation 4-nanometer chip. Keep in mind that all the metrics we discussed today are achieved by their older 40 nm chip, which is from about 2 years ago.

So, with their next planned design, they will increase several axes in speed and power efficiency.