Introduction

Friday saw further gains in Wall Street’s primary indices, with Nvidia surging past the $2 trillion mark in market valuation for the first time. This milestone was propelled by the ongoing frenzy surrounding artificial intelligence following the chip maker’s impressive quarterly report. Computer components are not usually expected to transform entire businesses and industries, but a graphics processing unit Nvidia Corp. released in 2023 has done just that. The Nvidia H100 data center chip has added more than $1 trillion to Nvidia’s value and turned the company into an AI kingmaker overnight.

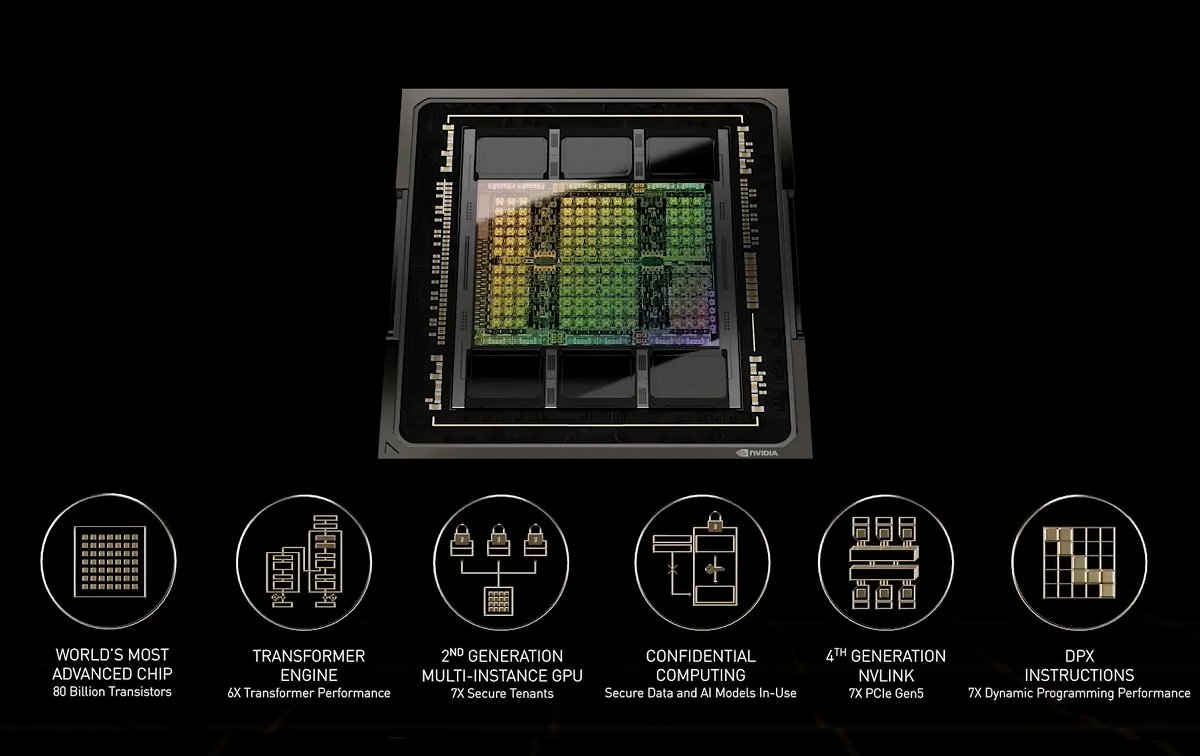

Lets find out what is Special about a chip that has taken the entire world by storm.

Follow us on Linkedin for everything around Semiconductors & AI

1. What are GPUs?

Nvidia began as a company manufacturing graphics processing unit (GPUs). As the name implies, these units were initially utilized for processing graphics in computers. For example, take computer games. We perceive intricate digital artwork as we play the game.

While we view these scenes as static images, our computers process them through simultaneous calculations.

These calculations occur simultaneously. For example, scenarios like rendering a beach scene. In such a scenario, waves move, birds fly in the sky, people walk along the shore, and the viewpoint itself may be in motion. All these computations must be executed concurrently and would take a CPU very long time to process. This is because CPUs employ sequential computing i.e. one task after another. Hence, GPUs were invented to implement parallel computing.

Read More: What is So Special about NVIDIA Datacentre GPUs

2. Repurposing GPUs

However, in recent times, games are not the only applications necessitating parallel computing. Artificial intelligence (AI), for instance, demands extensive parameters during training, and the speed of training an AI algorithm relies on how rapidly we input and execute these parameters.

This is where GPUs come in. These parallel computing units adeptly handle matrix multiplications involved in training AI algorithms seamlessly. Utilizing a higher number of GPUs in parallel enhances the parallel computing power significantly.

With the emergence of generative AI, there has been a substantial surge in demand for GPUs like the Nvidia H100. These GPUs are employed in supercomputers and servers as computing units for training machine learning (ML) algorithms.

Read more: How ChatGPT made Nvidia $1T company

3. What is Nvidia H100?

In response to the escalating demand for parallel computing units in servers, Nvidia embarked on manufacturing GPUs exclusively designed for data centres. It introduced a series of GPUs ranging from the Tesla architecture to the current Hopper architecture. Each of these architectures encompasses variants tailored based on cost. One such variant among the Hopper architecture is the H100.

Here, “H” stands for the surname of the computer science pioneer Grace Hopper. In fact, all of Nvidia’s preceding GPUs are named after the surnames of renowned scientists. For instance, the Ampere (A100) is named after André-Marie Ampère, Volta (V100) after Alessandro Volta, and so forth.

Read More: 5 Biggest MOATS of Nvidia

4. Why is Nvidia H100 so special?

Generative AI platforms learn to complete tasks such as translating text, summarizing reports and synthesizing images by training on huge tomes of preexisting material. The more they see, the better they become at things like recognizing human speech or writing job cover letters. They develop through trial and error, making billions of attempts to achieve proficiency and sucking up huge amounts of computing power in the process.

Nvidia says the H100 is four times faster than the chip’s predecessor, the A100, at training these so-called large language models, or LLMs, and is 30 times faster replying to user prompts. For companies racing to train LLMs to perform new tasks, that performance edge can be critical.

An analogy for Nvidia H100

The NVIDIA H100 is like the engine of a high-performance sports car in the world of data centers. Just as a sports car’s engine is designed for speed, power, and efficiency, the H100 is optimized for handling vast amounts of data at lightning-fast speeds in AI and high-performance computing (HPC) tasks.

Think of it this way: Imagine you have a massive warehouse filled with thousands of packages that need to be sorted and delivered in record time. The NVIDIA H100 is like the ultimate sorting machine in this warehouse. It can quickly and efficiently process each package, categorize it, and send it to its destination with incredible speed and accuracy.

What makes the H100 special is its advanced architecture and cutting-edge features, which allow it to tackle complex AI algorithms and HPC simulations with unprecedented performance. Just as a sports car’s engine pushes the limits of speed and power on the racetrack, the H100 pushes the boundaries of what’s possible in data processing, enabling breakthroughs in fields like healthcare, finance, and scientific research.

Performance of Nvidia H100:

- Order-of-magnitude leap: Nvidia claims the H100 offers an order-of-magnitude improvement in performance compared to previous generations, making it significantly faster for tasks like AI, scientific computing, and data analytics.

- FP8 support: It’s the first GPU to support FP8 precision, which allows for smaller models and faster computations while maintaining accuracy, particularly for large language models (LLMs).

- Multi-precision capabilities: It excels across various precision formats (FP64, TF32, FP32, FP16, INT8, and FP8), making it adaptable to diverse workloads.

Scalability of Nvidia H100:

- NVLink Switch System: This system allows connecting up to 256 H100 GPUs, enabling tackling exascale workloads that demand massive computational power.

- MIG (Multi-Instance GPU): This technology lets you partition a single H100 into multiple smaller virtual GPUs, optimizing resource utilization and providing flexibility for various workloads.

Security of Nvidia H100:

- Enhanced security features: The H100 incorporates advanced security features to protect data and prevent unauthorized access, crucial for sensitive applications.

Other advantages of Nvidia H100:

- Versatility: It’s suitable for various tasks, from AI inference and training to scientific simulations and high-performance computing.

- Lower latency: Delivers lower latency for real-time applications like autonomous vehicles and edge computing.

Overall, the H100 stands out for its exceptional performance, scalability, security, and versatility, making it a powerful tool for various demanding tasks across different industries.

Read More: Comparison of NVIDIA’s A100, H100, and H200 for Dominance in High-Performance Computing

5. Does Nvidia have any real competitors?

Nvidia controls about 80% of the market for accelerators in the AI data centers operated by Amazon.com Inc.’s AWS, Alphabet Inc.’s Google Cloud and Microsoft Corp.’s Azure. Those companies’ in-house efforts to build their own chips, and rival products from chipmakers such as Advanced Micro Devices Inc. and Intel Corp., haven’t made much of an impression on the AI accelerator market so far.

Chips like Intel’s Xeon processors are capable of more complex data crunching, but they have fewer cores and are much slower at working through the mountains of information typically used to train AI software.

Read More: What are Top Competitors and Alternatives to NVIDIA in 2024

Nvidia’s advantage isn’t just in the performance of its hardware. The company invented something called CUDA, a language for its graphics chips that allows them to be programmed for the type of work that underpins AI programs. The language is unique to Nvidia and comes with a subscription.

Thus, having the GPU is not enough, you need to have access to the libraries that help you program them. This is Nvidia’s secret being exploding revenues. Nvidia’s data centre division posted an 81% increase in revenue to $22 billion in the final quarter of 2023.

Read More: Bankruptcy to Trillion-Dollar Company: Story of Nvidia

Conclusion

In conclusion, Nvidia’s H100 chip’s rapid ascent has propelled the company’s value by over $1 trillion, marking a significant milestone in AI innovation. With an 80% market share in AI data centres, Nvidia remains unrivalled in the industry. Its data centre division saw an impressive 81% revenue surge to $22 billion in the final quarter of 2023. Moreover, Nvidia’s CUDA programming language and continuous updates underscore its commitment to staying ahead of competitors.

Piece of writing writing is also a excitement, if you be familiar with afterward you

can write otherwise it is complex to write.