Introduction

NVIDIA has emerged as a key player with NVIDIA Datacentre GPUs accelerators. These powerful processing units are designed to cater to a diverse range of applications, promising unparalleled performance and reliability in high-demand environments.

In this comprehensive exploration, we delve into the intricacies of NVIDIA Datacentre GPUs, unravelling their specifications, use cases, and the optimal fit for various applications.

Follow us on Linkedin for everything around Semiconductors & AI

Understanding the Datacentre

Before delving into the specifics of NVIDIA’s Datacentre GPUs, it’s crucial to comprehend the term “Datacentre” in this context.

Datacentres are mission control hubs for digital activities, where servers store, process, and manage vast amounts of information.

Picture servers as powerful engines within a datacenter, each assigned specific tasks like hosting websites, storing Data, or running applications. These servers work collectively to ensure uninterrupted online experiences.

NVIDIA Datacentre GPUs are meticulously crafted, tested, and certified for environments where continuous, robust performance is imperative.

These powerful processors ensure seamless digital experiences, from online activities to complex scientific calculations, by efficiently handling diverse tasks.

They do this employing parallel computing which involves breaking a big task into many small simple tasks and processing each task parallelly.

Read More – Forget GPUs, CUDA is the Real Powerhouse Behind Nvidia Trillion-Dollar Ascent – techovedas

The Architecture

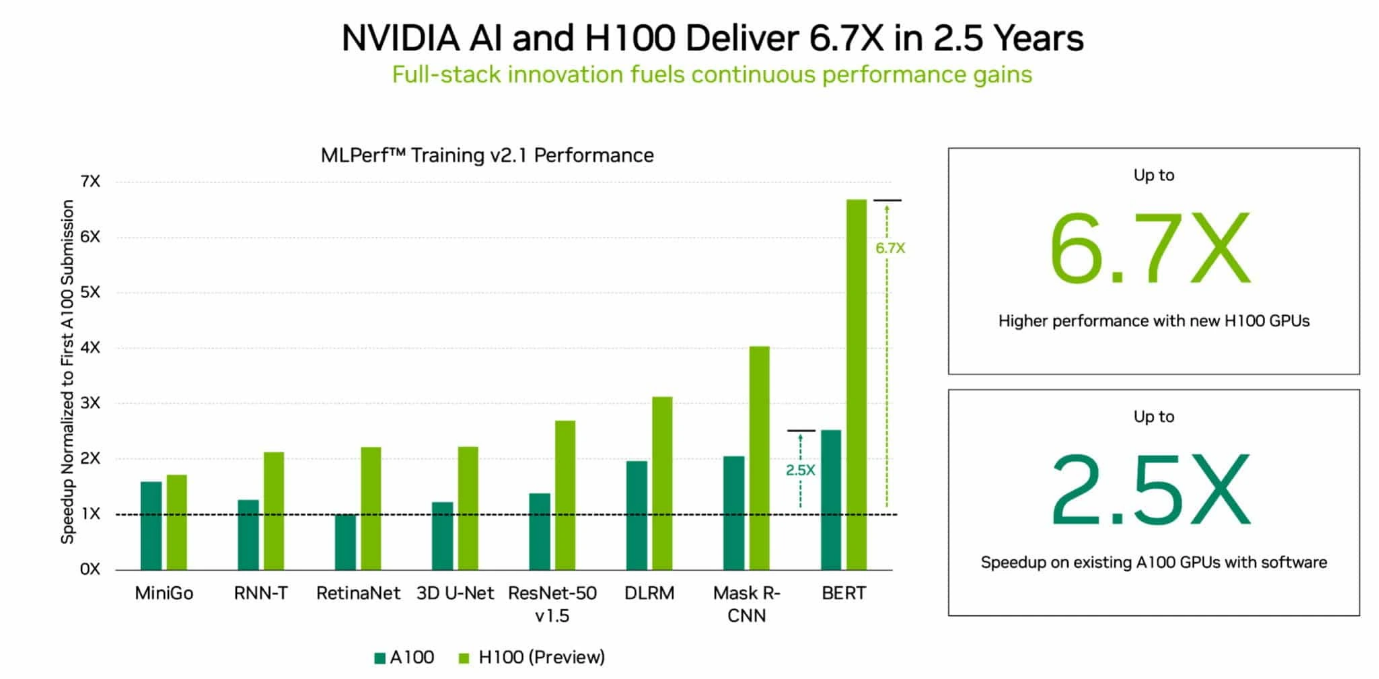

At the heart of NVIDIA’s Datacentre GPUs lies the Ampere architecture till now the company has released two architectures for Datacentre/HPC applications i.e. Ampere and Hopper.

Hopper architecture is a successor to Ampere and built with a cutting-edge TSMC 4N process, delivering up to 6.7x more performance than Ampere.

The successor to NVIDIA’s Hopper GPUs is the Blackwell architecture. The Blackwell GPU architecture is expected to be launched in 2024.

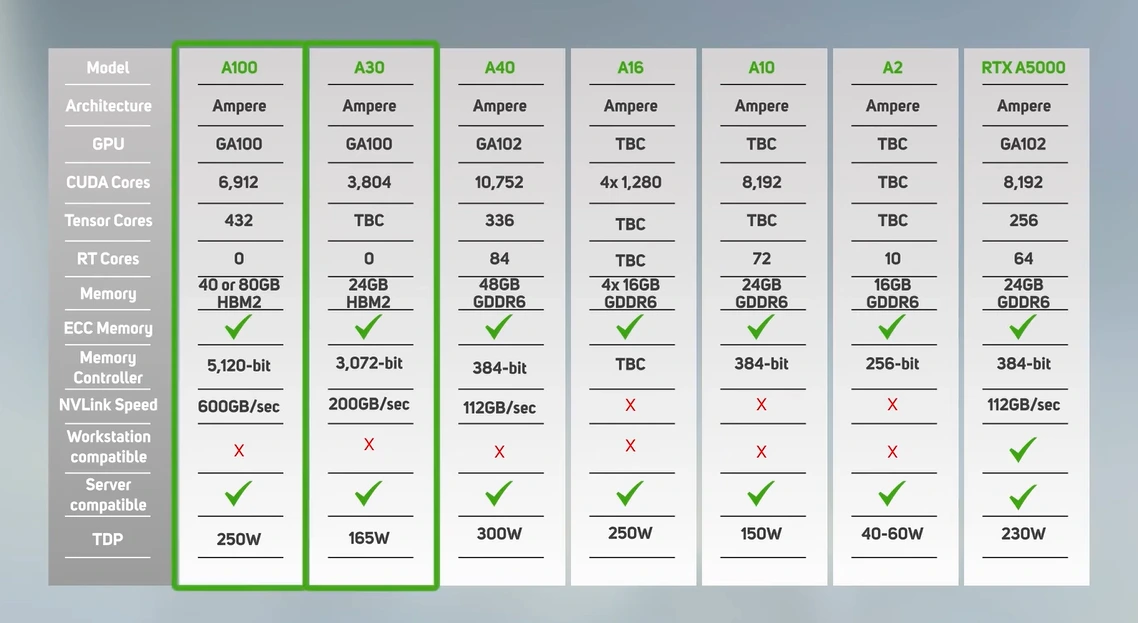

Each architecture is has got a lot of variations based on the use case. For example, the Ampere architecture has A10, A16, A30, A40, A100 and so on.

Unlike consumer-grade GPUs, the ascending model numbers in Datacentre GPUs don’t uniformly indicate increased performance.

Instead, these GPUs are meticulously tailored to cater to specific use cases, marking a departure from the one-size-fits-all approach.

In this article we will explore the Ampere architecture as it is most widely used. To better understand the relative performance and ideal use cases we have divided the range into three specific use cases.

1.Data Intensive Workloads (HPC and AI)

(High demand scientific computing workloads)

The first subset of applications that NVIDIA Datacentre GPUs excel in revolves around data-intensive workflows, such as High-Performance Computing (HPC) and Artificial Intelligence (AI).

The flagship A100 leads the pack in this domain, featuring 80GB of high-bandwidth (HBM) memory, a robust tensor core count, and the fastest NVLink speed.

The A100 is the go-to choice for intensive AI model training, data analytics, and large batch inferencing.

What is NVlink?

NVLink is a high-speed communication interface developed by NVIDIA to connect GPUs (Graphics Processing Units).

It acts like a super-efficient bridge, enabling direct and fast data exchange between GPUs. This boosts their collaboration, crucial for demanding tasks such as AI and scientific computing.

For more cost-effective options, the A30 and A10 come into play, catering to varying workloads with nuanced specifications.

The A2, with lower and variable power consumption, enters the equation for systems handling both training and inference tasks.

Read More: What are Major Semiconductor Companies in Europe – techovedas

2.High-Performance Visualization

(Applications such as rendering, Nvidia vWS or Omniverse enterprise)

In simpler terms, it’s about rendering vivid and intricate graphics, simulations, or virtual environments swiftly and accurately. This capability is crucial in various fields, from intricate 3D rendering in design and architecture to simulating scientific data for analysis.

In this realm, the A40 takes centre stage. Boasting over 10,000 CUDA cores, significant tensor and RT cores, and a substantial 48 gigabytes of memory, the A40 is the powerhouse for complex visualizations and demanding virtual GPU services.

Its counterpart, the A10, with slightly reduced specs, becomes a viable option for scenarios with less complex visualizations or fewer virtual users.

The choice between these two models comes down to the complexity of the visualization you are working with or the volume of virtual users and applications that you are looking to support.

3.Low-End High-Volume Virtualized GPU Services (Virtual PCs)

Virtual PCs act like digital workstations running on a server. They allow users to access a virtualized desktop experience, complete with applications and resources, remotely.

GPUs, such as NVIDIA’s A16, cater to these scenarios, delivering efficient performance for minimal individual user demands in a cost-effective manner.

The A16 features four GPUs with smaller 16 gigabytes of memory and fewer cores per GPU. The A2, known for its low power consumption, complements this space, offering an alternative for scenarios where energy efficiency is paramount.

Read More: Intel Aims to Surpass Samsung in Foundry Leadership Race by 2024 – techovedas

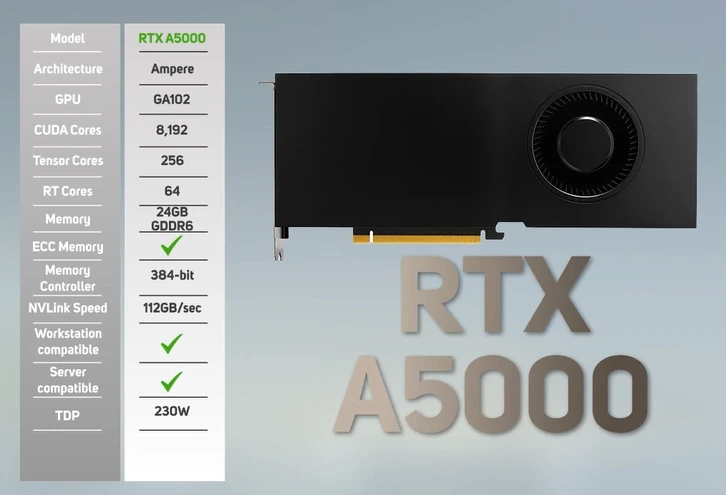

The Unconventional Contender: RTX A5000

Adding an intriguing twist to the lineup is the RTX A5000. Technically a workstation card with active cooling, it finds a sweet spot in server builds, making it a noteworthy inclusion in the Datacentre GPU discussion.

Active cooling here refers to a mechanism that uses fans or other dynamic components to dissipate heat generated by a device, such as a GPU.

Unlike passive cooling, which relies on heat sinks alone, active cooling actively moves air to regulate temperature. The RTX A5000, positioned at a lower price point, serves as an excellent choice for less demanding projects, demonstrating the versatility of NVIDIA’s GPU offerings.

Performance versus Cost

In the dichotomy between performance and cost we need to see our applications and budget.

While the A100 and A40 might cost more upfront, their superior performance significantly reduces scientific analysis or rendering times, offering long-term productivity gains.

Consider your project’s complexity – investing in higher-end GPUs could well be worth the initial extra cost.

It’s about optimizing efficiency and reaping the benefits of faster results, making the higher GPU cost a justifiable investment in enhanced productivity. It’s a nuanced decision, balancing the upfront costs against the long-term advantages.

Conclusion

Navigating the waters of NVIDIA Datacenter GPUs demands a nuanced understanding of their specifications and targeted applications.

The above serves as a compass, guiding readers through the intricacies of each GPU model and its optimal use cases. As the technology landscape continues to evolve, NVIDIA remains at the forefront, empowering enterprises with the computational prowess.

Whether it’s high-performance computing, visualization, or virtualized GPU services, NVIDIA Datacenter GPUs stand as formidable pillars in the realm of enterprise-grade technology.