Introduction

According to Allied Market Research, the global artificial intelligence (AI) chip market is projected to reach $263.6 billion by 2031. The AI chip market is vast and can be segmented in a variety of different ways, including chip type, processing type, technology, application, industry vertical, and more. However, the two main areas where AI chips are being used are at the edge (such as the chips that power your phone and smartwatch) and in data centres (for deep learning inference and training).

No matter the application, however, all AI chips can be defined as integrated circuits (ICs) that have been engineered to run machine learning workloads and may consist of FPGAs, GPUs, or custom-built ASIC AI accelerators. They work very much like how our human brains operate and process decisions and tasks. The true differentiator between a traditional chip and an AI chip is how much and what type of data it can process and how many calculations it can do at the same time.

Read on to learn more about the unique demands of AI, the benefits of AI Chip architecture, and finally the applications and future of AI Chips.

Follow us on LinkedIn for everything around Semiconductors & AI

The Distinct Requirements of AI

Unlike traditional workloads, AI demands compute power on an unprecedented scale. This workload is so strenuous that efficient and cost-effective. AI chip design was unattainable before the 2010s. AI workloads require significantly more compute power than traditional tasks, leading to the development of specialized architectures. These tailored architectures are designed to efficiently handle the intensive computational demands of AI algorithms.

The power consumption stems from managing rapidly increasing volumes of data for training AI. While it’s good for AI models to have more input data for training, it means more power consumption for processing this data.

Read more Hardest Problem for Semiconductor & AI Industry: Energy Efficient Computing – techovedas

1. Massive Parallelism

AI requires massive parallelism of multiply-accumulate functions, such as dot product functions. Consequently, chip architectures must be equipped with the right processors and arrays of memories to handle this parallelism effectively.

In the design process, parameters such as weights and activations are crucial considerations. They optimize architecture for greater efficiency. It’s essential to emphasize the importance of integrating software and hardware design for AI. This integration ensures that both aspects work seamlessly together. By optimizing both software and hardware, AI systems can achieve optimal performance. Ignoring either aspect can lead to suboptimal results and inefficiencies. Therefore, a holistic approach that considers both software and hardware is essential for AI success.

Additionally, as we reach the limits of what can be achieved on a single chip, multiple-chip systems are now being employed for AI.

2. Memory requirements

AI deals with enormous amounts of data. Moving this data around efficiently is crucial for fast and accurate AI processing. Current chip architectures may not be optimized for the specific data access patterns of AI algorithms.

Traditional computer chips, like CPUs and GPUs, were designed for tasks that are different from what AI excels at. Here’s why AI needs a new architectural approach:

In simpler terms, AI is like a juggler that needs a stage built for throwing many balls in the air at once, while traditional chips were designed for a single ball, or a few at most. New AI chip architectures are being developed to better handle the unique needs of AI.

Flash memory, while efficient for many storage purposes, is not typically suitable for AI training as it has relatively slow write speeds compared to other memory technologies such as DRAM. AI training involves frequent read and write operations to manipulate large datasets, requiring high-speed memory access to optimize processing times.

Scalability is another factor. With huge datasets, we require more storage units. But increasing storage units means more space, which, we don’t have. To increase storage density, we need memory technologies that easily scale to lower-tech nodes like 10nm and below. Packaging technologies like 3D stacking play a significant role as well.

Read more Phase change memory- Better Faster and Energy-Efficient Alternative – techovedas

Why is inference so hard?

AI inference is the process of using trained models to make predictions or decisions based on new data inputs.

To meet human-like inference performance with neural networks requires an exponential increase in AI model complexity and computing throughput. However, achieving faster, more efficient neural net processing won’t come from scaling up the number of physical processors, as investments in traditional server clusters are reaching a computational cost wall.

Standard computing architectures like CPUs and GPUs are crowded with hardware features and elements that offer no benefit to inference performance. To perform more and more operations per second, chips have become larger and much more complex. They feature multiple cores, multiple threads, on-chip networks, and complicated control circuitry.

Read more How 3D-enabled Memory Promises to Bridge the Gap between CPU and GPU – techovedas

Developers face challenges in accelerating software performance and output in machine learning models. These challenges include dealing with complicated programming models, security problems, and loss of visibility into compiler control. Layers of processing abstraction further complicate the situation. Achieving higher machine learning performance within these constraints requires laborious hand-tuning optimization. This optimization relies on intimate knowledge of the hardware architecture.

AI Chip Architecture Applications and the Future Ahead

There’s no doubt that we are in the renaissance of AI. AI processors are being put into almost every type of chip, from the smallest IoT chips to the largest servers, data centers, and graphic accelerators. The industries that require higher performance will of course utilize AI chip architecture more, but as AI chips become cheaper to produce, we will begin to see AI chip architecture in places like IoT to optimize power and other types of optimizations that we may not even know are possible yet.

In terms of memory, chip designers are beginning to put memory right next to or even within the actual computing elements of the hardware to make processing time much faster. This is in-memory computing.

Additionally, the software is driving the hardware, meaning that software AI models such as new neural networks require new AI chip architectures. The emergence of neuromorphic chips for Spiking neural networks is the best example.

There are a few promising areas of exploration for new AI chip architectures:

- Specialized Processors: Instead of general-purpose CPUs, AI chips are incorporating hardware designed specifically for the mathematical operations used in neural networks, a common type of AI algorithm. This allows for faster and more efficient processing of AI tasks.

- Memory Hierarchy: New architectures focus on placing different types of memory closer to the processing units that need them. This reduces the time and energy needed to move data around. For instance, chips may include specialized memory optimized for the way AI algorithms access data.

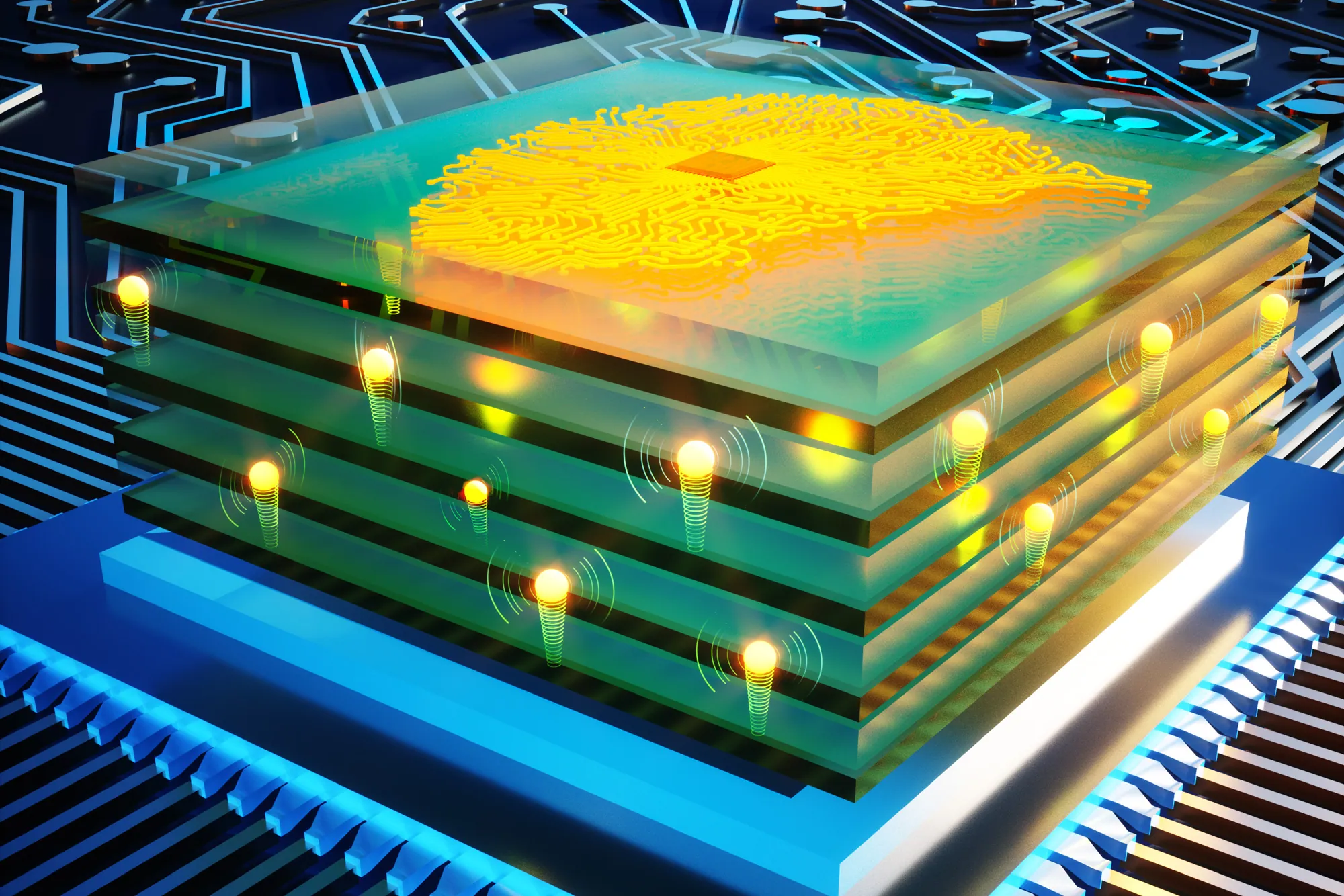

- Multi-die Architectures: As cramming more and more transistors onto a single chip faces limitations, an approach is to combine multiple smaller chips, each optimized for a specific task, in a single package. This allows for more flexibility and better performance.

Overall, AI chip design is moving towards a more specialized approach, with different components working together to achieve the best possible performance and efficiency for AI workloads.

Photonics and Heterogenous integration

Finally, we’ll see photonics and multi-die systems come more into play for new AI chip architectures to overcome some of the AI chip bottlenecks. Photonics provides a much more power-efficient way to do computing and multi-die systems (which involve the heterogeneous integration of dies, often with memory stacked directly on top of compute boards) can also improve performance as the possible connection speed between different processing elements and between processing and memory units increases.

Conclusion

The realm of AI chip architecture is marked by exponential growth and innovation. The pervasive nature of AI across industries necessitates a fundamental shift in chip architecture to meet the unprecedented demands of AI workloads.

By comprehensively addressing the distinct demands of AI, traditional chip architectures can be morphed into AI processing units.