Introduction

In a monumental leap forward for the field of artificial intelligence, NVIDIA has unleashed its latest innovation: the Blackwell Beast.

Representing a significant departure from conventional chips, the Blackwell Beast heralds the dawn of a new era in AI computing, such as offering unparalleled performance and scalability for trillion-parameter scale generative AI models.

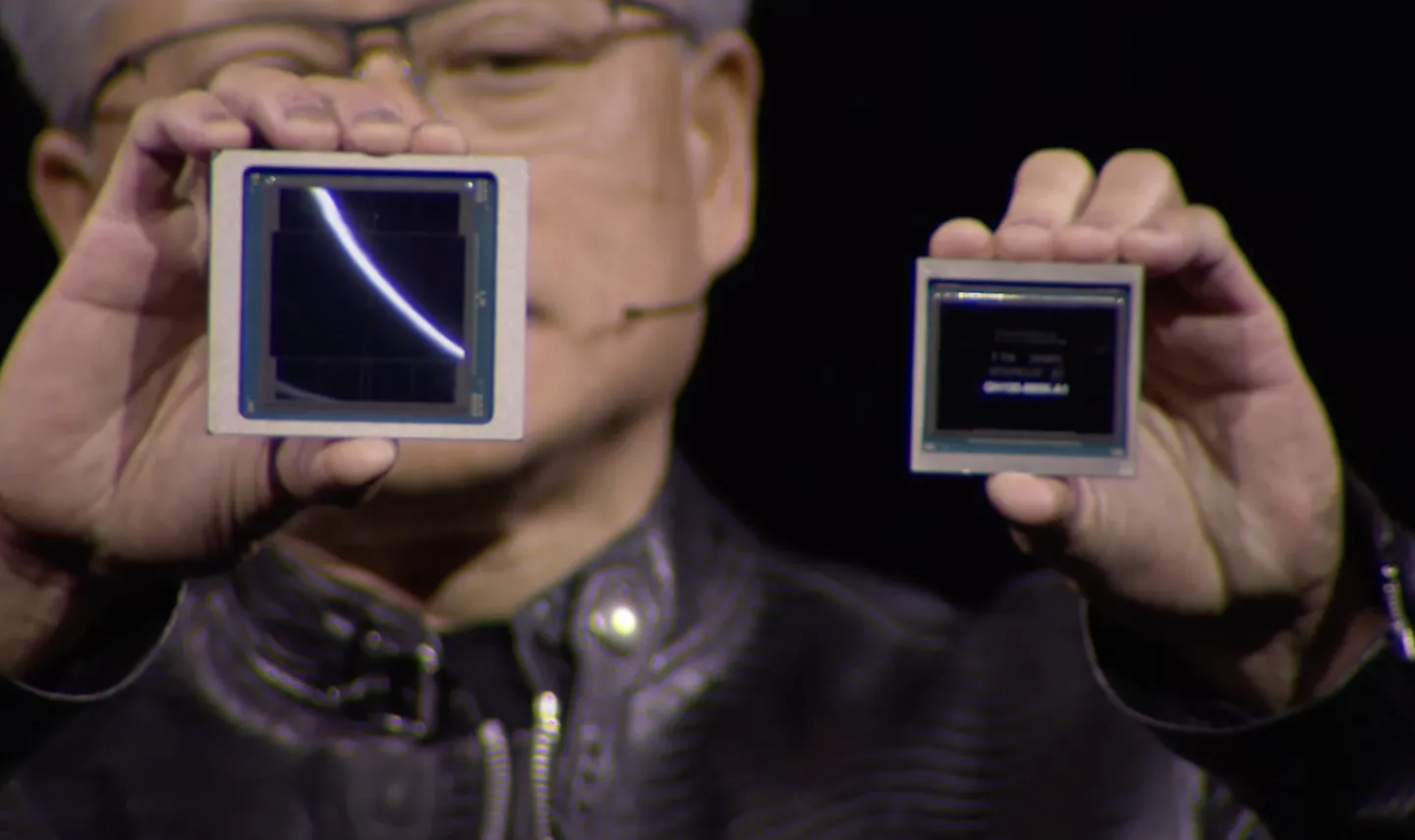

Powerhouse for AI: This chip is designed specifically for artificial intelligence tasks, and Nvidia claims it can handle models with up to 27 trillion parameters . That’s a massive leap in processing power.

Intricate Design: The B200 GPU uses a unique architecture with a high number of internal connections, which contributes to its power.

Performance Enhancements

At the heart of this groundbreaking advancement lies the B200 GPU, a technological marvel boasting up to 20 petaflops of FP4 horsepower fueled by an astonishing 208 billion transistors.

Moreover, this raw computational power is further amplified by the GB200, which integrates two B200 GPUs alongside a Grace CPU to deliver a staggering 30x improvement in LLM inference.

Its workload performance compared to its predecessors, all while operating at 25x greater efficiency than the H100.

Energy and Cost Efficiency

The Blackwell Beast isn’t just about raw power—it’s also about efficiency. Training a 1.8 trillion parameter model now demands a mere 2,000 Blackwell GPUs and 4 megawatts of power, a fraction of the resources previously required by the Hopper GPUs, which consumed 15 megawatts for the same task.

This remarkable improvement in energy and cost efficiency represents a seismic shift in the landscape of AI computing, making large-scale AI projects more accessible and sustainable than ever before.

Read More: 10 Indian Semiconductor Startups Backed by the DLI Scheme – techovedas

Benchmark Performance

In head-to-head comparisons against its predecessors, the Blackwell Beast reigns supreme.

On a GPT-3 benchmark, the GB200 outshines the H100 by a staggering margin, offering 7x greater performance and 4x faster training speeds.

These results underscore the Blackwell Beast’s dominance in the realm of AI computing, setting a new standard for performance and efficiency in the industry.

Key Technical Improvements

Beyond its sheer computational prowess, the Blackwell Beast introduces a host of key technical advancements designed to push the boundaries of AI research and development.

This includes a second-gen transformer engine that doubles compute, bandwidth, and model size, as well as a new NVLink switch that facilitates enhanced GPU communication, allowing for seamless integration and collaboration across a network of 576 GPUs with a blistering 1.8 TB/s bandwidth.

Read More: Nvidia in Trouble : 3 Authors Sue Company Over AI Training Data

Large-Scale AI Systems

To accommodate the growing demands of large-scale AI training and inference tasks, NVIDIA has introduced the GB200 NVL72 racks, each comprising 36 CPUs and 72 GPUs.

These formidable systems are capable of delivering up to 1.4 exaflops of inference performance, providing researchers and developers with the tools they need to tackle the most complex and ambitious AI projects with confidence.

Here’s a table summarizing the key features and improvements of NVIDIA’s Blackwell Beast:

| Feature | Description |

|---|---|

| Performance Enhancements | |

| B200 GPU | Up to 20 petaflops of FP4 horsepower with 208 billion transistors |

| GB200 | Combines two B200 GPUs and a Grace CPU for 30x LLM inference workload performance, 25x efficiency over H100 |

| Energy and Cost Efficiency | |

| Training Efficiency | Training a 1.8 trillion parameter model now takes 2,000 Blackwell GPUs and 4 megawatts, compared to 8,000 Hopper GPUs and 15 megawatts previously |

| Benchmark Performance | |

| GB200 vs. H100 | 7x more performant on GPT-3 benchmark, 4x faster training speed |

| Key Technical Improvements | |

| Second-gen Transformer Engine | Doubles compute, bandwidth, and model size |

| NVLink Switch | Enhanced GPU communication, allowing 576 GPUs to connect with 1.8 TB/s bandwidth |

| Large-Scale AI Systems | |

| GB200 NVL72 Racks | Combine 36 CPUs and 72 GPUs for up to 1.4 exaflops of inference performance |

Read More: Chat with Any PDF: Powered By ChatGPT – techovedas

Conclusion

With the unveiling of the Blackwell Beast, NVIDIA has once again raised the bar for AI computing, ushering in a new era of unprecedented performance, efficiency, and scalability.

From its revolutionary architecture to its groundbreaking technical innovations, the Blackwell Beast represents the pinnacle of AI engineering, empowering researchers and developers to unlock new frontiers in artificial intelligence and reshape the future of technology as we know it. With its unparalleled processing capabilities, this formidable chip not only accelerates AI research but also propels industries towards unprecedented efficiency and scalability. Moreover, by pushing the boundaries of computational power, the Blackwell Beast heralds a new era of innovation, fostering the development of AI-driven solutions that promise to revolutionize diverse sectors.

As we embark on this exciting journey towards trillion-parameter scale generative AI, one thing is clear: the age of the Blackwell Beast has arrived, and the possibilities are limitless.