Introduction:

Google recently made waves in the tech industry with the announcement of its latest advancement in artificial intelligence processing: the Trillium chip.

Departing from the traditional TPU (tensor processing unit) branding, Trillium marks the sixth generation of Google’s AI hardware. TPUs are not general-purpose processors like CPUs. They excel at specific tasks required for training and running machine learning models, particularly those using TensorFlow, a popular open-source machine learning framework.

With a focus on unparalleled performance and energy efficiency, Trillium is poised to revolutionize AI computing.

Performance Boost:

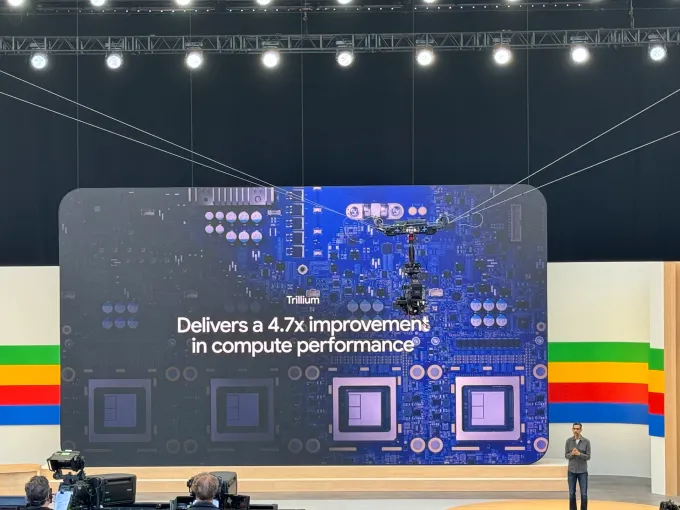

- Nearly five times faster than its predecessor, the TPU v5e

- Achieves a 4.7x increase in peak compute performance per chip

Efficiency:

- 67% more energy efficient than the TPU v5e

Applications:

- Designed to power large language models and recommender systems

- This means it can be used for tasks like text generation, image creation, and recommendation algorithms.

Availability:

- Expected to be available to Google Cloud customers in late 2024.

Architecture:

- Doubles the High Bandwidth Memory (HBM) capacity and bandwidth of the previous generation.

- Includes third-generation SparseCore technology for processing large models

- Designed to be deployed in pods of 256 chips for scalability

- Integrates with Google Cloud’s AI Hypercomputer, a supercomputing architecture for AI workloads.

Overall, the Trillium chip represents a significant advancement in Google’s AI processing capabilities. It promises faster, more efficient performance for demanding AI tasks.

Follow us on LinkedIn for everything around Semiconductors & AI

A Shift in Naming and Performance

Trillium marks a significant departure from Google’s naming conventions. It opts for a botanical moniker inspired by the flower of the same name. This choice reflects a shift towards nature-inspired branding. Trillium symbolizes growth, balance, and harmony. The name hints at Google’s vision for AI technology to seamlessly integrate with the natural world.

This move distinguishes Trillium from AWS’s Trainium and underscores Google’s commitment to innovation and differentiation in the AI hardware space.

The Trillium chip builds upon the success of its predecessors, particularly the TPUv5 chips. With enhancements in both performance and efficiency, Trillium sets a new standard for AI processing capabilities.

Google touts Trillium as “the most performant and most energy-efficient TPU to date,” promising groundbreaking advancements in AI computing.

Technical Specifications

Trillium boasts several key technical features that position it as a powerhouse in AI processing. The chip incorporates next-generation HBM (High Bandwidth Memory) technology, offering twice the capacity of its predecessors.

Moreover, Trillium introduces advancements in inter-chip communication, facilitating faster data transfer within server pods.

With communication speeds of up to 3,200 Gbps, Trillium enables seamless coordination and collaboration among interconnected chips, optimizing performance in large-scale AI deployments.

Read More: College Kids Become Chipmakers: Cornell Project Opens Up Chip Design with Free Tools – techovedas

Integration with Hypercomputer AI System

Trillium is poised to play a pivotal role in Google’s Hypercomputer AI system, where it will complement the Axion CPU to deliver cutting-edge AI capabilities.

By merging storage and software infrastructure tailored for AI applications, the Hypercomputer promises unparalleled efficiency and performance in AI processing tasks.

Furthermore, Google’s strategic partnership with Nvidia enables the Hypercomputer to provide offload access to Nvidia’s GPUs, expanding its versatility and compatibility with diverse AI workloads.

While Trillium and Nvidia GPUs operate independently, the Hypercomputer’s architecture facilitates seamless integration, empowering users to leverage the strengths of both platforms.

Scalability and Deployment

Trillium’s scalability offers a key advantage, allowing the combination of server pods into larger clusters via optical networking.

This enables efficient communication between pods, enhancing the scalability and flexibility of AI infrastructure deployments.

While Trillium chips will primarily be available through Google Cloud, their compatibility with larger clusters underscores Google’s commitment to supporting the evolving needs of AI developers and enterprises.

Read More: OpenAI Unveils GPT-4o: A Game-Changer in AI Technology – techovedas

How does Google Achieve this?

Google achieves the impressive performance and efficiency gains in the Trillium chip through a combination of architectural advancements:

1. More Power for Processing:

- Enhanced Matrix Multiply Units (MXUs): MXUs are the workhorses of AI chips, performing the calculations crucial for training and running AI models. Trillium boasts larger and faster MXUs compared to its predecessor, the TPU v5e. This translates to significantly increased processing power.

- Higher Clock Speed: In simpler terms, clock speed refers to the number of cycles a chip can complete per second. Trillium operates at a higher clock speed than the v5e, allowing it to execute more instructions in a shorter time.

2. Addressing Memory Bottlenecks:

- Doubled High-Bandwidth Memory (HBM): AI models often require vast amounts of data to be readily accessible. Trillium comes equipped with double the HBM capacity and bandwidth compared to the v5e. This ensures a smoother flow of data between the chip and memory, eliminating bottlenecks that can slow down processing.

3. Specialized Hardware for Efficiency:

- Third-Generation SparseCore: SparseCore is a Google-developed technology specifically designed to handle sparse models, a type of AI model that utilizes a lot of zeros. This new generation of SparseCore further optimizes processing for these models, leading to additional efficiency gains.

4. Scalable Pod Design:

- 256-Chip Pods: Trillium chips are designed to be deployed in interconnected pods containing 256 chips each. This allows for massive scalability – hundreds of pods can be combined to tackle even the most demanding AI workloads.

5. Integration with AI Hypercomputer:

- Cloud-Based Supercomputing: Trillium integrates seamlessly with Google’s AI Hypercomputer platform. This supercomputing architecture distributes workloads across multiple Trillium pods, further boosting overall efficiency and performance.

By combining these advancements, Google has engineered a powerful and efficient AI chip that promises to accelerate the development and deployment of next-generation artificial intelligence.

Implications for the AI Landscape

Google’s Trillium chip represents a formidable contender in the ongoing AI arms race, challenging GPU manufacturers like Nvidia and cloud providers such as Microsoft and Amazon.

While TPUs are optimized for Google’s proprietary models, their compatibility with open-source frameworks like Gemma extends their utility to a broader ecosystem of AI applications.

However, it’s worth noting that TPUs may not cater to all use cases, particularly those reliant on Nvidia’s CUDA architecture.

Google offers Trillium through their cloud platform, making this powerful chip accessible to a wider range of companies without the need for massive upfront investments.

Read More: AI Safety vs. Speed: Did Ilya Quit OpenAI Over a Clash of Visions? – techovedas

Conclusion

In unveiling Trillium, Google reaffirms its commitment to advancing the frontier of AI processing.

With its groundbreaking performance, energy efficiency, and seamless integration within the Hypercomputer AI system, Trillium promises to redefine the possibilities of AI computing.

As Google continues to push the boundaries of innovation, Trillium stands as a testament to the company’s vision of democratizing AI and driving transformative change across industries.