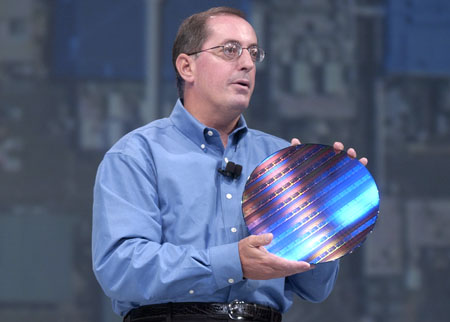

Delve into the genesis of transistors, the emergence of custom LSI chips, and the fierce race to create powerful microprocessors. Join us as we unveil the partnership between Busicom and Intel that birthed the Intel 4004 microprocessor, a trailblazer that ignited a new era of computing.

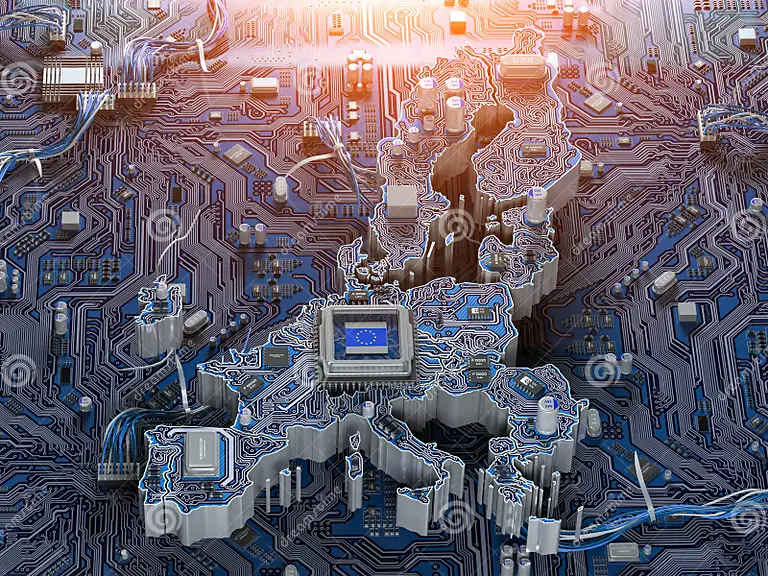

This is not only about semiconductor chips, but the United States has lost cameras, light bulbs, televisions, hard disks, displays, silicon chips, among others to other countries when it came to scale them up to products.

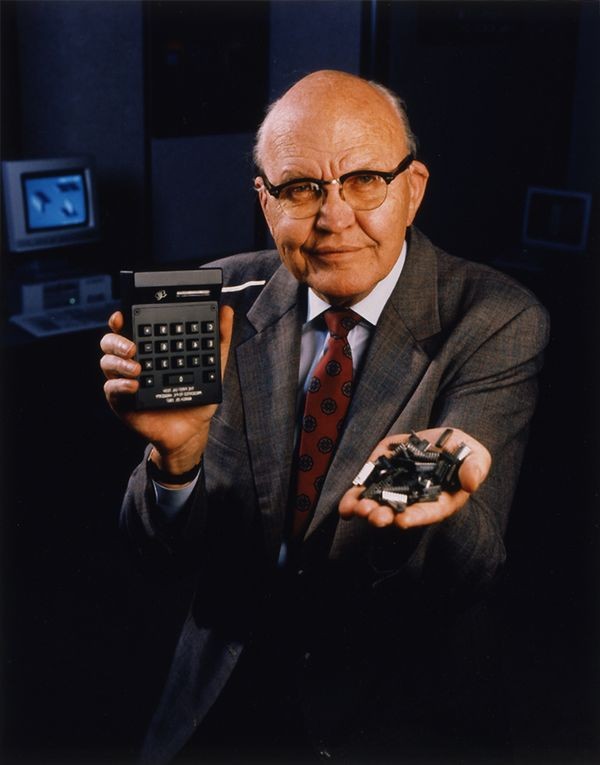

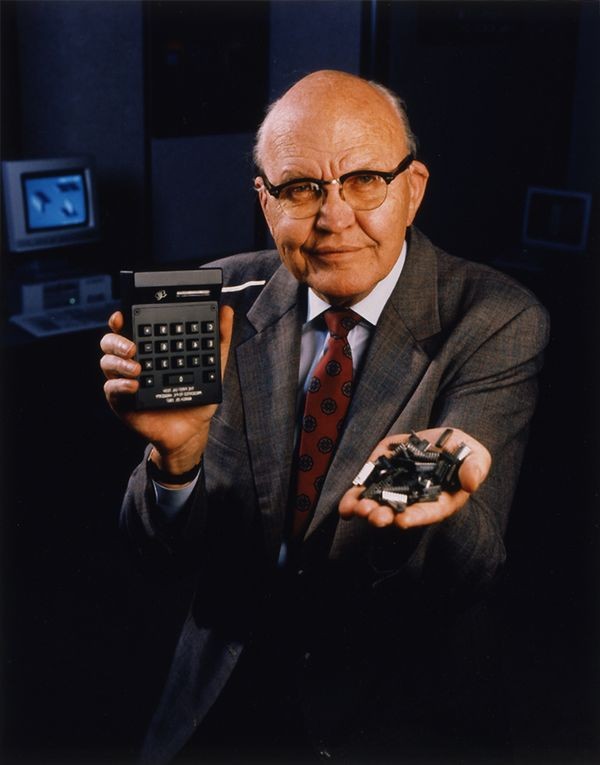

Noyce's semiconductor expertise, Moore's engineering prowess, Hoerni's managerial skills, and Grinich's electronics knowledge, along with the collective training under Shockley, became the driving force for their future endeavor

A pivotal moment arrived in 1954 when TI successfully developed the world's first commercially viable silicon transistor. In the same year, they achieved another milestone by creating the inaugural transistor radio.

The radio was supposed to fit in pockets, but it actually didn't fit in regular pockets at all! Sony realized this, so they made their salespeople wear custom pockets that were just the right size for the radio. This trick worked, and people bought the radios.

IMEC developed the first EUV light source, which is a critical component of the machine. IMEC developed the first EUV optics, which are used to focus the light onto the semiconductor wafer.

Steve Jobs approached Intel in 2005 to see if they would be interested in making the chips for the iPhone. However, Intel rejected the offer, believing that the iPhone was a niche product and that the mobile market was not worth pursuing.

Initially, Steve Jobs, the CEO of Apple, was not inclined towards the idea of creating a phone. However, Hullot, based in Apple France, managed to persuade Jobs over time.

China has become the world's leading producer of electronics. In 2021, China produced an estimated $1.7 trillion worth of electronics, accounting for about 28% of global production.

In 1990, the US controlled 40% of the global market, but by 2020, this share had fallen to 12%. Taiwan, on the other hand, has seen its share of the global market increase from 10% in 1990 to 24% in 2020.