Chapter 1- US’s Militarism Vs Japan’s Consumerism

Introduction

In the realm of modern technology, the humble semiconductor has emerged as a transformative force, enabling remarkable advancements across diverse sectors. From military applications to consumer electronics and beyond, the significance of semiconductors cannot be overstated.

In recent times, the race for semiconductor supremacy has taken center stage, with global superpowers like the USA and China competing to gain an innovation edge.

The first chip war was a period of intense competition between the United States and Japan in the semiconductor industry from the late 1970s to the early 1990s. The US initially dominated the industry, but Japan eventually surpassed it in terms of market share and technological leadership.

This article delves into the history and evolution of the semiconductor industry, highlighting its pivotal role in shaping military, commercial, and technological landscapes.

The Birth of the Semiconductor Industry

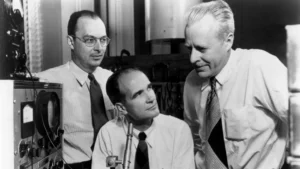

In 1947, Dr. Shockley and his two colleagues achieved a groundbreaking innovation aimed at replacing electromechanical telephone switches. Their work was recognized with a Nobel Prize in 1956. However, despite its eventual success, this innovation had modest beginnings.

The initial transistors were characterized by noise, fragility, and high production costs, hindering widespread adoption.

In April 1952, Bell Labs responded by granting licenses to 40 companies, each paying a $25,000 fee, to advance and commercialize the technology. Notably, even a small Japanese firm like Sony obtained a license, sparking competition among various enterprises.

This rivalry spurred efforts to enhance transistor performance and reduce costs, facilitating profitable opportunities.

Also Read: Japan’s $20 Billion Bet on Semiconductor Renaissance

USA: The militarisation of Semiconductors

While Texas Instruments created the first transistor radio in 1954, American companies primarily directed their attention toward the defense and space sectors.

Transistors gained favor in fighter planes and space vehicles due to their lighter weight, reduced space requirements, and lower power consumption compared to vacuum tube devices.

Notably, IBM’s purchase of the inaugural batch of transistors from Fairchild, at $150 per unit, exemplified the technology’s adoption for building an onboard computer for the B-52 bomber.

Companies like Fairchild and Texas Instruments embarked on a path of miniaturization, making transistors progressively more appealing than vacuum tubes.

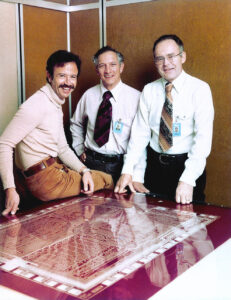

In 1959, Fairchild’s Robert Noyce introduced the first monolithic integrated circuit (IC), paralleling Jack Kilby’s similar achievements at Texas Instruments.

This advancement further elevated the role of transistors. The significance of Noyce’s ICs was underscored by the MIT instrumentation lab’s assessment in 1962, which indicated that computers using Noyce’s chips would be one-third smaller and lighter than those built with transistors.

Consequently, the decision to employ Noyce’s ICs for NASA’s lunar mission computers contributed to Fairchild’s revenue soaring from $50,000 in 1958 to $2 million just two years later.

Texas Instruments and other American firms, mirroring Fairchild’s approach, honed in on the defense and space arenas to leverage the potential of transistors.

Texas Instruments, for instance, proposed Jack Kilby’s IC-based computer to the US Air Force’s Minuteman program, aiming to enhance the computing power required for nuclear warhead deployment.

By the close of 1964, Texas Instruments had supplied a remarkable 100,000 ICs exclusively for the Minuteman program’s onboard computer systems.

The year 1965 witnessed a staggering 20% of all delivered ICs being allocated to the Minuteman program, amplifying the technological superiority of silicon-based solutions that substantially bolstered US military capabilities during the Cold War era.

Japan: Consumerisation of Semiconductors

Much like their American counterparts, Japanese companies also faced constraints in leveraging licensed transistor technology for military upgrades.

Consequently, a handful of Japanese companies, Sony among them, turned their attention towards the consumer market.

However, unlike the military and space sectors, civilians displayed reluctance to pay a premium for transistor-based radios, televisions, and music players that were lighter but lacked the sonic quality of vacuum tube devices.

Despite this challenge, Japanese companies had no alternative but to capitalize on the potential of transistors.

Sony led the charge in Japan’s pursuit of reinventing consumer electronics. The journey commenced with Sony’s introduction of pocket radios, initially seen as inferior alternatives to the more established radio receivers in the USA and Europe.

Yet, Sony and other Japanese firms directed their efforts toward refining transistor design and production processes. This strategic emphasis yielded tangible results as Japanese transistor-based electronic products gained traction, driving an increasing demand for semiconductors.

By 1957, Japan had ascended to the status of the largest semiconductor device producer, a position upheld until 1986.

Contrary to the common assumption of subsidies and preferential tax treatment, Japan’s success wasn’t solely attributed to financial incentives. Rather, Japan’s advantage was rooted in its prowess in advancing transistor technology.

Sony, in particular, distinguished itself by prioritizing scientific discoveries and technological refinement. This commitment culminated in one of Sony’s engineers winning the Nobel Prize in 1974.

Consequently, Japan’s incremental approach to innovation, fueled by a quest for creative destruction in consumer electronics, played a pivotal role in eroding the USA’s silicon edge.

This not only established Japan as a top semiconductor producer but also triggered disruptive innovation waves affecting Western firms like RCA.

Other factors

There are a number of reasons why Japan was able to beat the US in the first chip war.

Government support: The Japanese government provided significant financial and technological support to the chip industry. This included subsidies, tax breaks, and research funding.

Focus on manufacturing: Japanese companies focused on manufacturing chips, while US companies were more focused on design. This gave Japanese companies a cost advantage.

Keiretsu: Japanese companies were part of keiretsu, which are groups of companies that cooperate with each other. This gave Japanese companies an advantage in terms of information sharing and coordination.

Quality control: Japanese companies had a reputation for high quality control, which made their chips more reliable.

Innovation: Japanese companies were innovative in terms of chip design and manufacturing. They were the first to introduce a number of new technologies, such as dynamic random-access memory (DRAM) and flash memory.

As a result of these factors, Japan was able to surpass the US in the chip industry in the early 1990s.

But US was lurking around the corner to get back into the war-this time with a new kid in the block- Personal computers.

Read Part 2- The PC Revolution: How the US Beat Japan in the second Chip War

Conclusion

The journey of the semiconductor industry from its modest beginnings to its pivotal role in shaping military, commercial, and technological advancements is a testament to human innovation and creativity. The evolution of transistors, the rise of Japan’s semiconductor dominance, and the contemporary chip war highlight the integral role of semiconductors in modern society.