Introduction

Meta is building its next-generation large-scale infrastructure with a focus on artificial intelligence. This involves supporting new generative AI technologies, recommendation systems, and advanced AI research – all areas that are expected to grow significantly in importance in the coming years as AI models become more sophisticated and require vastly more computing power.

Last year, Meta unveiled its first in-house AI inference accelerator called MTIA version 1, which was designed specifically for Meta’s AI workloads such as its deep learning recommendation models that power personalized experiences across Meta platforms.

MTIA represents a long-term venture by Meta to develop the most efficient architecture for its unique AI tasks. By improving the computational efficiency of its infrastructure through solutions like MTIA v1, Meta can better support its software engineers in developing advanced AI systems that will continue to enhance the experiences of billions of users worldwide.

Now, let’s discuss the details about the Meta’s custom chip.

Follow us on Linkedin for everything around Semiconductors & AI

Under the hood

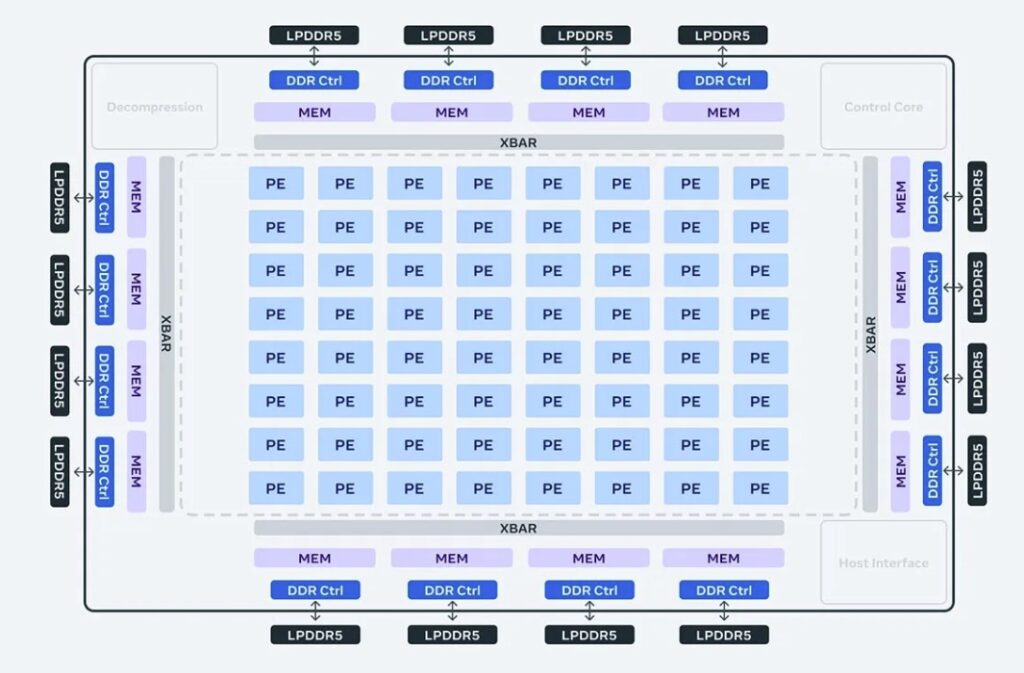

This inference accelerator is part of Meta’s broader full-stack development program for custom, domain-specific silicon that addresses the company’s unique workloads and systems. This new version of MTIA more than doubles the compute and memory bandwidth of their previous solution while maintaining our close tie-in to the company’s AI workloads.

Performance Boost:

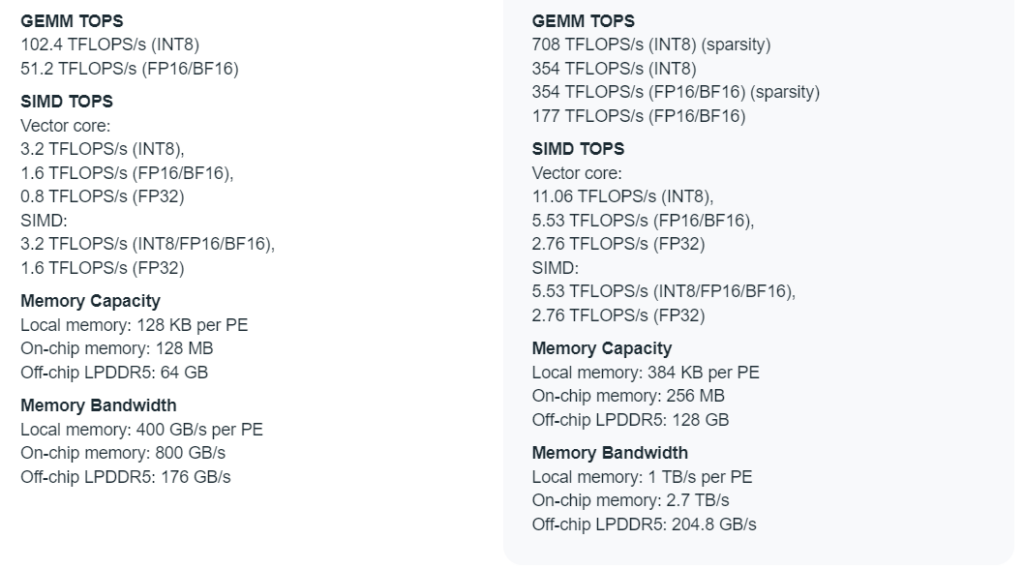

- Compared to MTIA v1, version 2 boasts 3.5 times faster overall performance.

- For tasks involving sparse computation (where many variables have a value of zero), it delivers a whopping 7x speedup.

- These advancements come from architectural changes, enhanced memory, and increased storage capacity.

Technical Specs:

- Manufactured using a more advanced 5-nanometer process compared to the 7-nanometer process of MTIA v1.

- Increased clock speed from 800 MHz to 1.35 GHz.

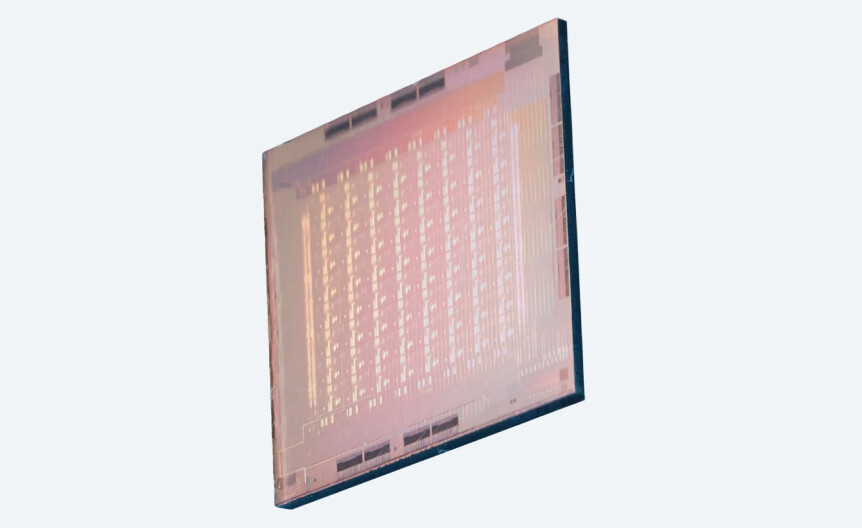

- Larger chip size (421 mm²) to accommodate more on-chip resources.

- Increased local PE storage ( tripled), on-chip SRAM ( doubled), and LPDDR5 capacity ( doubled).

Focus on Inference:

- Similar to MTIA v1, version 2 excels at AI inference, which involves using trained models to make predictions on new data.

- Currently, it cannot be used for training AI models, which is a process that requires significant computational power.

Market Implications:

- This advancement signifies Meta’s push towards independence from relying on expensive GPUs from companies like Nvidia for their AI needs.

- It contributes to a growing trend of tech giants creating custom AI chips optimized for their specific workloads.

Why does Meta needs custom chips?

Meta’s primary AI workloads involve utilizing large-scale recommendation models and ranking systems. These systems personalize experiences for users across Meta’s family of apps and services. They analyze each user’s preferences, behaviors, interactions, and demographic information. Then, they recommend new friends, groups, events, pages, articles, and other content based on this analysis.

Meta’s data centers specifically design and optimize the MTIA inference accelerator to efficiently run recommendation and ranking models at scale. Users generate massive amounts of new data daily, necessitating constant re-training of the models. Additionally, their recommendations require real-time re-ranking. The MTIA’s architecture aims to provide the perfect balance of compute power, memory bandwidth, and capacity to support these AI-driven personalization models.

Read more How AI Hardware has Evolved From Vacuum Tubes to Quantum Computing – techovedas

Understanding ‘batch sizes‘

This chip’s architecture fundamentally focuses on providing the right balance of compute, memory bandwidth, and memory capacity for serving ranking and recommendation models.

When training deep learning models, multiple examples (images, text snippets, etc.) are fed into the network simultaneously in a “batch”. This is done to optimize computations. Larger batch sizes allow for faster processing but require more memory. Smaller batches are more memory efficient but slower.

In tasks where you’re making predictions, like recommending products or movies, the size of the group of predictions you make at once affects how quickly you can make them. Smaller batches mean lower throughput. On the MTIA chip, batch sizes can vary significantly between different ranking/recommendation models used by Meta, from just a few examples up to hundreds.

The Rack

For their next-generation silicon, Meta developed an intriguing rack setup reportedly capable of housing up to 72 of the new accelerators. Each rack contains three shelves holding 12 boards with chip pairs, a dense configuration evidently intended to really push the new chip’s clock speed and power limits.

They specifically designed the system so that they could clock the chip at 1.35GHz (up from 800 MHz) and run it at 90 watts compared to 25 watts for our first-generation design.

Read more Nvidia’s NVL72 rack scale systems – techovedas

Performance Results

The results so far show that this MTIA chip can handle both the low complexity (LC) and high complexity (HC) ranking and recommendation models that are components of Meta’s products. Across these models, there can be a ~10x-100x difference in model size and the amount of compute per input sample. Because Meta controls the whole stack, they can achieve greater efficiency compared to commercially available GPUs.

Early results show that this next generation silicon has already improved performance by 3x over its first generation chip across four key models it evaluated. At the platform level, with 2x the number of devices and a powerful 2-socket CPU, Meta is able to achieve 6x model serving throughput and a 1.5x performance per watt improvement over the first generation MTIA system.

Here’s a numbers comparison of Meta’s MTIA v2 and Nvidia’s H100 GPU:

- Performance:

- MTIA v2 excels in sparse INT8 inference at 708 teraops, while the H100 delivers a broader 4 petaflops FP8 performance (though for different precision levels).

- Power Efficiency:

- MTIA v2 boasts a performance of 7.8 TOPS/watt, beating Nvidia’s H100 SXM at 5.65 TOPS/watt.

- Cost:

- Specific pricing for MTIA v2 isn’t public yet, but estimates suggest it could be significantly cheaper than the H100’s $30,000+ price tag.

Key takeaway: MTIA v2 trades raw power for superior efficiency on specific tasks, potentially at a lower cost.

Conclusion

MTIA has been deployed in Meta’s data centres and is now serving models in production. Meta is already seeing the positive results of this program as it’s allowing them to dedicate and invest in more compute power for their more intensive AI workloads. It is proving to be highly complementary to commercially available GPUs in delivering the optimal mix of performance and efficiency on Meta-specific workloads.